But is it true? Brian Tse of Concordia AI says China is more focused on practical applications & less AGI-pilled than the US

Full episode out tomorrow!

But is it true? Brian Tse of Concordia AI says China is more focused on practical applications & less AGI-pilled than the US

Full episode out tomorrow!

Plus, because the headline animation is so intuitively appealing, I was almost taken in by it myself!

Plus, because the headline animation is so intuitively appealing, I was almost taken in by it myself!

In this short 🧵:

1) what the authors actually did, and why "no world models" simply does not follow

2) a few highlights from the literature on AI world models

x.com/keyonV/stat...

In this short 🧵:

1) what the authors actually did, and why "no world models" simply does not follow

2) a few highlights from the literature on AI world models

x.com/keyonV/stat...

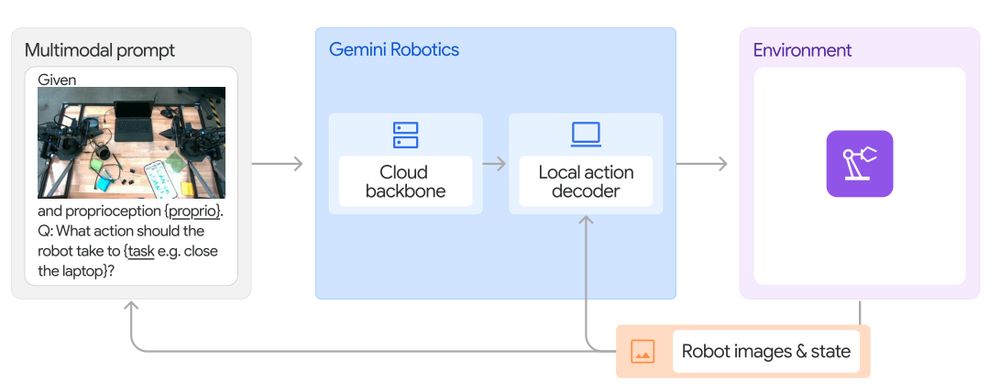

But … that makes the Operator model challenging. The actual contact-with-the-world agent is likely going to be taking remote direction

See also: Gemini Robotics 🤖 ☁️

But … that makes the Operator model challenging. The actual contact-with-the-world agent is likely going to be taking remote direction

See also: Gemini Robotics 🤖 ☁️

Tell it that you don't want it to confirm every step. It still will, in some cases for the better.

It can do ~100-action sequences; it tries new strategies rather than getting stuck.

Here it uses @waymark – sadly no HTML5 canvas support! 😦

Tell it that you don't want it to confirm every step. It still will, in some cases for the better.

It can do ~100-action sequences; it tries new strategies rather than getting stuck.

Here it uses @waymark – sadly no HTML5 canvas support! 😦

OpenAI is attempting the second-biggest theft in human history.

The amicus brief is completely correct. The terms they've suggested are completely unacceptable."

@TheZvi on the @elonmusk vs @OpenAI lawsuit

OpenAI is attempting the second-biggest theft in human history.

The amicus brief is completely correct. The terms they've suggested are completely unacceptable."

@TheZvi on the @elonmusk vs @OpenAI lawsuit

and GPT-4.1 seems less aligned than GPT-4o

The more RL you apply, the more misaligned they get, & we don't seem to be trying that hard"

@TheZvi on why his p(doom) is up to 70%

and GPT-4.1 seems less aligned than GPT-4o

The more RL you apply, the more misaligned they get, & we don't seem to be trying that hard"

@TheZvi on why his p(doom) is up to 70%

Doesn't sound good either!

Doesn't sound good either!

Scary stuff!

Scary stuff!

the NatSec folks say: I've seen how China operates. A deal is impossible. We have to win, we have to race"

@jeremiecharris & @harris_edouard on the USA's AI dilemma

🧵

the NatSec folks say: I've seen how China operates. A deal is impossible. We have to win, we have to race"

@jeremiecharris & @harris_edouard on the USA's AI dilemma

🧵

Overall, an excellent conversation. Enjoy!

Overall, an excellent conversation. Enjoy!

"if technical progress outpaces society's ability to adapt, then the people doing technical work might end up having huge societal consequences"

"if technical progress outpaces society's ability to adapt, then the people doing technical work might end up having huge societal consequences"

Here, she argues that "Nonproliferation is the wrong approach to AI misuse" and instead promotes the concept of "adaptation buffers"

x.com/hlntnr/stat...

Here, she argues that "Nonproliferation is the wrong approach to AI misuse" and instead promotes the concept of "adaptation buffers"

x.com/hlntnr/stat...

Jack says that "experimental" models, which are launched with lower rate limits & sometimes limited access, don't always get a full write-up, but reassures us that industry-leading safety testing has been done.

Jack says that "experimental" models, which are launched with lower rate limits & sometimes limited access, don't always get a full write-up, but reassures us that industry-leading safety testing has been done.

Maybe, but it probably depends on getting models to do more and more of the interpretability work for us.

Maybe, but it probably depends on getting models to do more and more of the interpretability work for us.

Jack says that "anything you can train jointly will have deeper understanding"

But for each modality, it depends on "how much positive transfer there is to this new task"

Jack says that "anything you can train jointly will have deeper understanding"

But for each modality, it depends on "how much positive transfer there is to this new task"

x.com/gfodor/stat...

x.com/gfodor/stat...

Was it simply that the same next steps were obvious to all, or are people swapping secrets at SF parties?

@jack_w_rae, who led "Thinking" for Gemini 2.5, shares his perspective

Reasoning Model🧵↓

Was it simply that the same next steps were obvious to all, or are people swapping secrets at SF parties?

@jack_w_rae, who led "Thinking" for Gemini 2.5, shares his perspective

Reasoning Model🧵↓

Guy says:

- "bias towards simplicity – the simpler, the better"

- "the faster you run experiments, the more likely you'll find something good"

- "let the experiments tell you which way to go"

Tons of practical wisdom here. Enjoy!

Guy says:

- "bias towards simplicity – the simpler, the better"

- "the faster you run experiments, the more likely you'll find something good"

- "let the experiments tell you which way to go"

Tons of practical wisdom here. Enjoy!

For one thing, "turn your hyper-parameters up!"

x.com/augmentcode...

For one thing, "turn your hyper-parameters up!"

x.com/augmentcode...

Like running an AI lab in general, it's ... "pretty capital intensive"

x.com/augmentcode...

Like running an AI lab in general, it's ... "pretty capital intensive"

x.com/augmentcode...

@augmentcode's @guygr & I discussed AI assistance for professional engineers & large-scale codebases.

Ever heard of "Reinforcement Learning from Developer Behaviors"?

Listen to "Code Context is King" now!

👂↓🧵

@augmentcode's @guygr & I discussed AI assistance for professional engineers & large-scale codebases.

Ever heard of "Reinforcement Learning from Developer Behaviors"?

Listen to "Code Context is King" now!

👂↓🧵

Here's @SiyuHe7 talking about Squidiff, a model that runs experiments in silico by predicting how single cell transciptomes will respond to perturbations

This can save researchers months!

x.com/SiyuHe7/sta...

Here's @SiyuHe7 talking about Squidiff, a model that runs experiments in silico by predicting how single cell transciptomes will respond to perturbations

This can save researchers months!

x.com/SiyuHe7/sta...