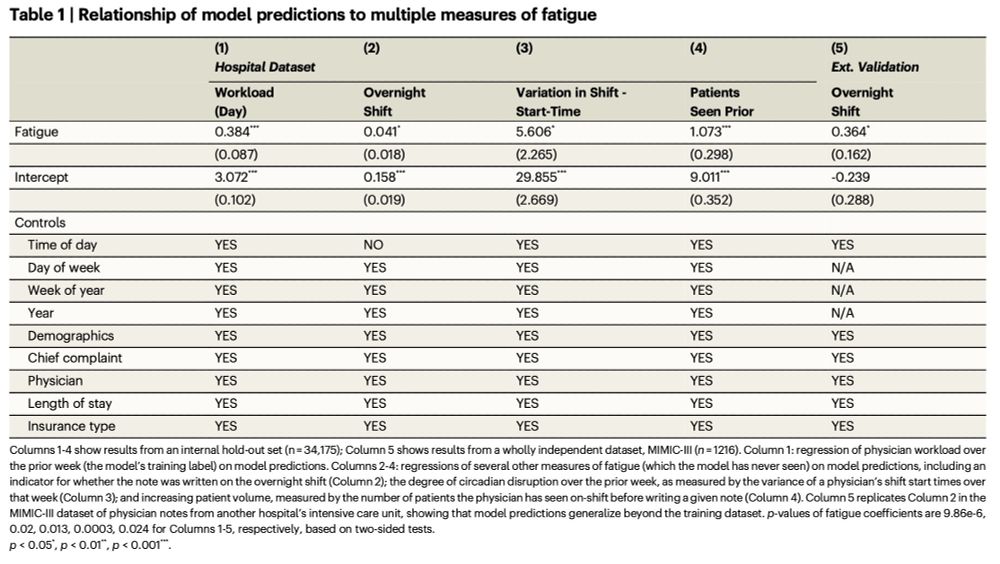

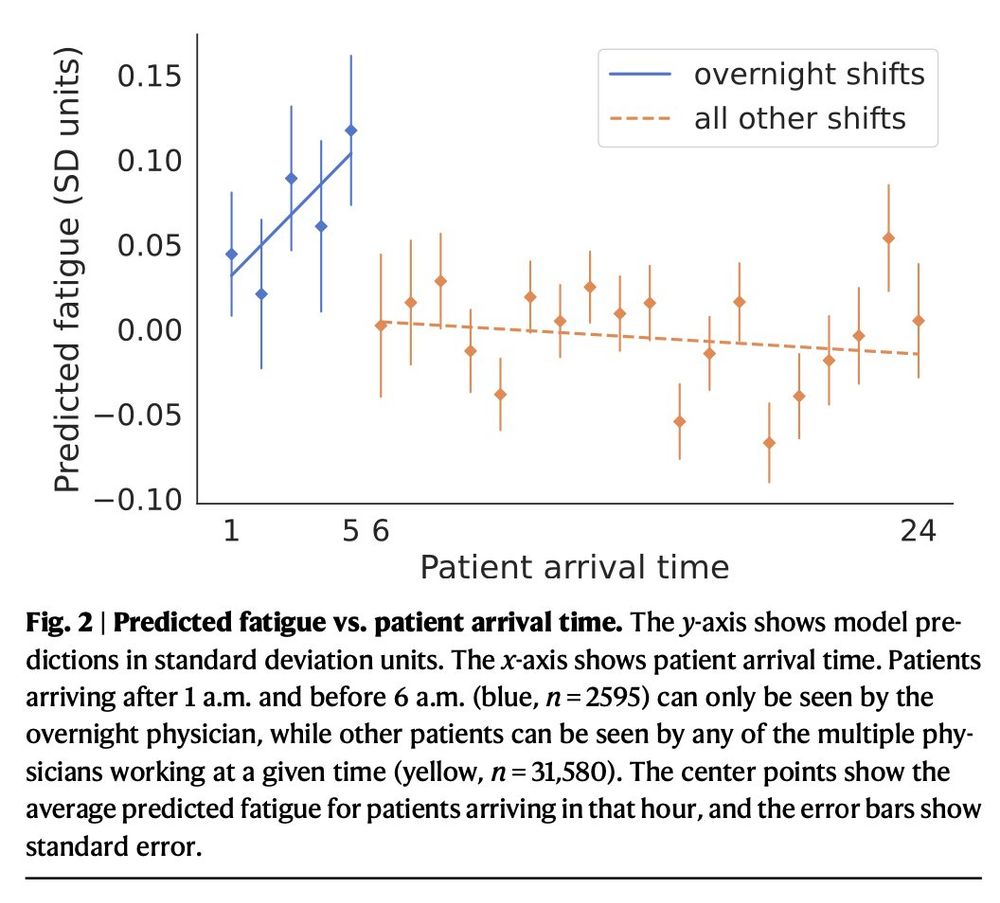

1. Who’s working an overnight shift (in our data + external validation in MIMIC)

2. Who’s working on a disruptive circadian schedule

3. How many patients has the doc seen *on the current shift*

1. Who’s working an overnight shift (in our data + external validation in MIMIC)

2. Who’s working on a disruptive circadian schedule

3. How many patients has the doc seen *on the current shift*

Thank you to my great collaborators @kyle-macmillan.bsky.social , Anup Malani, Hongyuan Mei, and @chenhaotan.bsky.social

Thank you to my great collaborators @kyle-macmillan.bsky.social , Anup Malani, Hongyuan Mei, and @chenhaotan.bsky.social

- Better evaluation of long-context summarization

- Research on legal language understanding

- Development of more accurate & reliable legal AI tools

Dataset: huggingface.co/datasets/Chi...

- Better evaluation of long-context summarization

- Research on legal language understanding

- Development of more accurate & reliable legal AI tools

Dataset: huggingface.co/datasets/Chi...

- Simple factual errors

- Incorrect legal citations

- Misrepresentation of procedural history

- Mischaracterization of Court's reasoning

Fine-tuned smaller models tend to make more egregious errors than GPT-4.

- Simple factual errors

- Incorrect legal citations

- Misrepresentation of procedural history

- Mischaracterization of Court's reasoning

Fine-tuned smaller models tend to make more egregious errors than GPT-4.

- Largest legal case summarization dataset

- 200+ years of Supreme Court cases

- "Ground truth" summaries written by Court attorneys and approved by Justices

- Variation in summary styles and compression rates over time

- Largest legal case summarization dataset

- 200+ years of Supreme Court cases

- "Ground truth" summaries written by Court attorneys and approved by Justices

- Variation in summary styles and compression rates over time

1. A smaller fine-tuned LLM scores well on metrics but has more factual errors.

2. Experts prefer GPT-4 summaries—even over the “ground-truth” syllabuses.

3. ROUGE and similar metrics poorly reflect human preferences.

4. Even LLM-based evaluations still misalign with human judgment.

1. A smaller fine-tuned LLM scores well on metrics but has more factual errors.

2. Experts prefer GPT-4 summaries—even over the “ground-truth” syllabuses.

3. ROUGE and similar metrics poorly reflect human preferences.

4. Even LLM-based evaluations still misalign with human judgment.

Paper: arxiv.org/abs/2501.00097

When evaluating LLM-generated and human-written summaries, we find interesting discrepancies between automatic metrics, LLM-based evaluation, and human expert judgements.

Paper: arxiv.org/abs/2501.00097

When evaluating LLM-generated and human-written summaries, we find interesting discrepancies between automatic metrics, LLM-based evaluation, and human expert judgements.

Qingcheng Zeng, @chenhaotan.bsky.social, @robvoigt.bsky.social, and Alexander Zentefis!

Qingcheng Zeng, @chenhaotan.bsky.social, @robvoigt.bsky.social, and Alexander Zentefis!

EMNLP 2024 Workshop on Narrative Understanding. If you are in Miami, the presentation will be at 3:30pm!

Paper: aclanthology.org/2024.wnu-1.12/

Dataset (soon): mheddaya.com/research/nar...

EMNLP 2024 Workshop on Narrative Understanding. If you are in Miami, the presentation will be at 3:30pm!

Paper: aclanthology.org/2024.wnu-1.12/

Dataset (soon): mheddaya.com/research/nar...

Our error analysis shows some mistakes arise from genuine interpretative ambiguity. Check out the last three examples here:

Our error analysis shows some mistakes arise from genuine interpretative ambiguity. Check out the last three examples here:

We find that smaller fine-tuned LLMs outperform larger models like GPT-4o, while also offering better scalability and cost efficiency. But they also err differently.

We find that smaller fine-tuned LLMs outperform larger models like GPT-4o, while also offering better scalability and cost efficiency. But they also err differently.

As an application, we propose an ontology for inflation's causes/effects and create a large-scale dataset classifying sentences from U.S. news articles.

As an application, we propose an ontology for inflation's causes/effects and create a large-scale dataset classifying sentences from U.S. news articles.

In our work, we address both the conceptual and technical challenges.

In our work, we address both the conceptual and technical challenges.

📄 aclanthology.org/2024.wnu-1.12/

📄 aclanthology.org/2024.wnu-1.12/