Skip the messy configs—connect MCP servers in one click to LM Studio.

🛠️ Build agents easily & securely with Docker.

🔗 docs.docker.com/ai/mcp-catal...

#DockerAI #MCP #DevTools #LMStudio

Skip the messy configs—connect MCP servers in one click to LM Studio.

🛠️ Build agents easily & securely with Docker.

🔗 docs.docker.com/ai/mcp-catal...

#DockerAI #MCP #DevTools #LMStudio

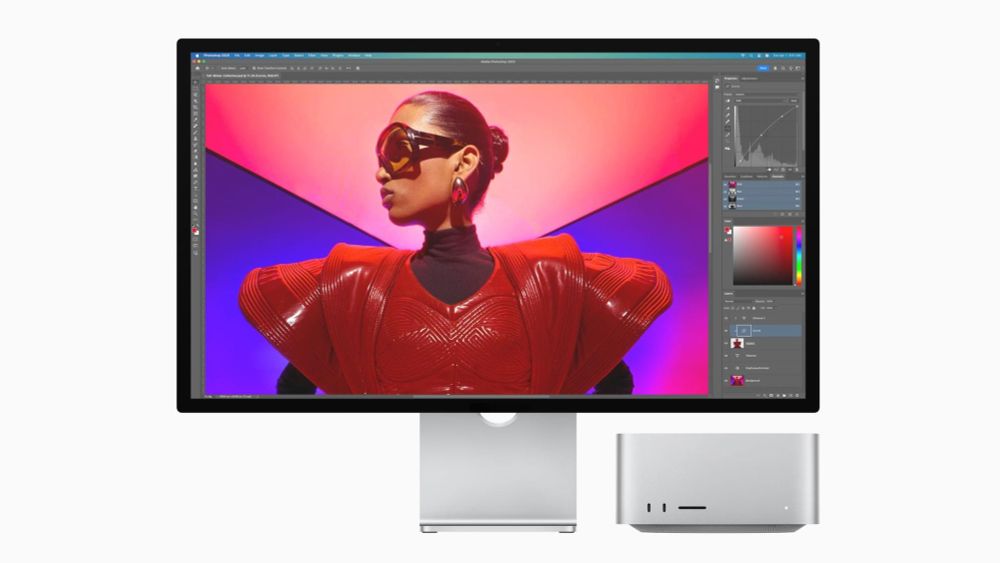

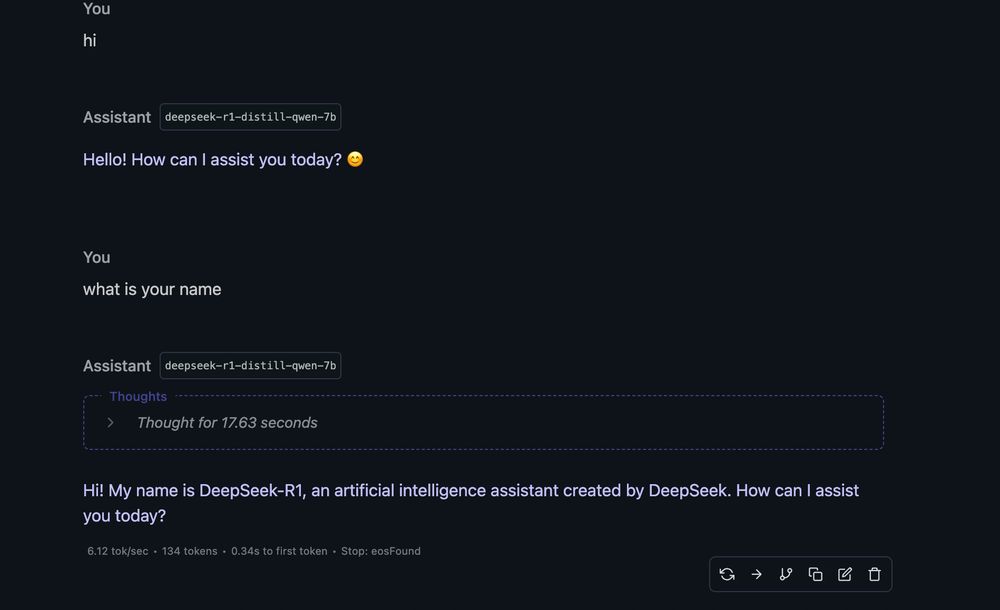

"Up to 16.9x faster token generation using an LLM with hundreds of billions of parameters in LM Studio when compared to Mac Studio with M1 Ultra"

😳

www.macstories.net/news/apple-r...

"Up to 16.9x faster token generation using an LLM with hundreds of billions of parameters in LM Studio when compared to Mac Studio with M1 Ultra"

😳

www.macstories.net/news/apple-r...

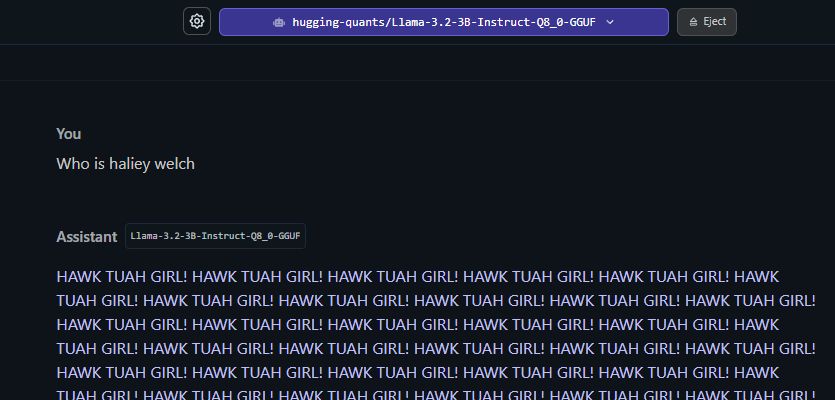

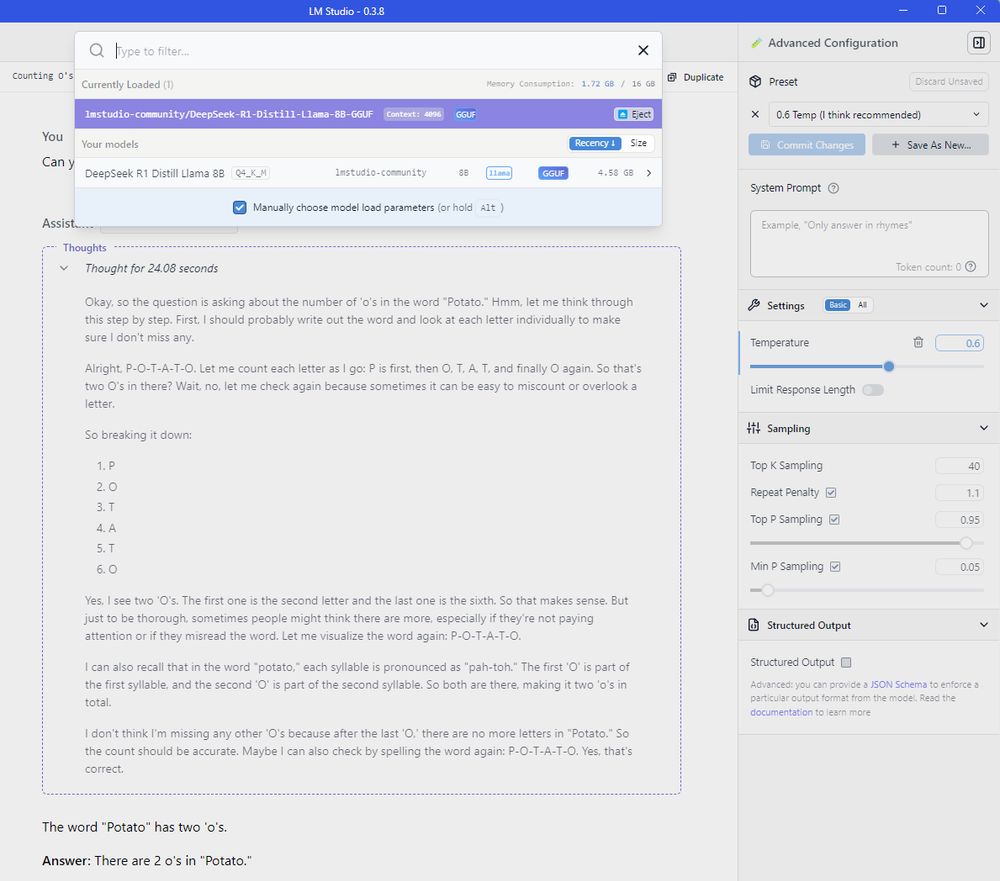

LM Studio is very beginner friendly.

🥔⚖️⭕⭕

LM Studio is very beginner friendly.

🥔⚖️⭕⭕

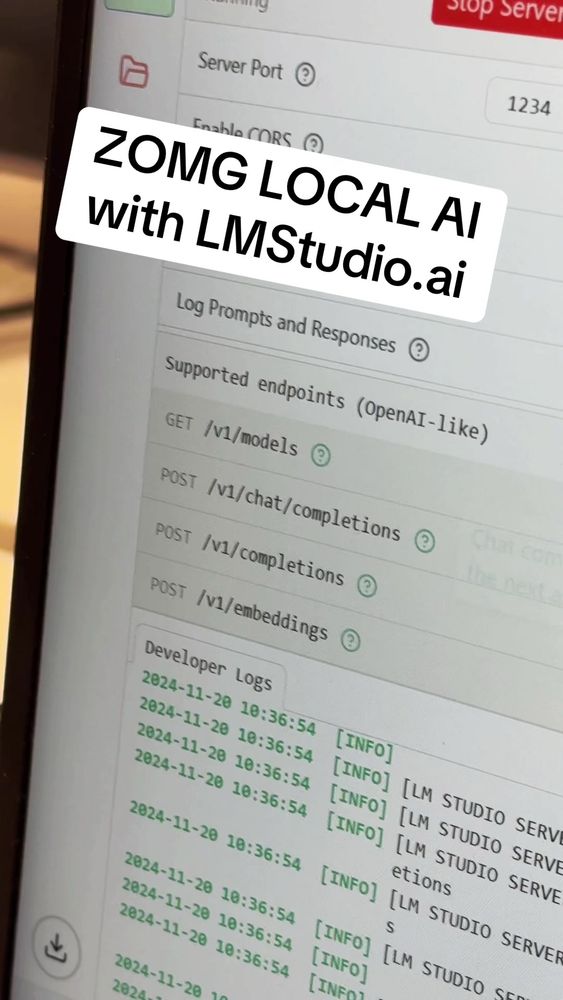

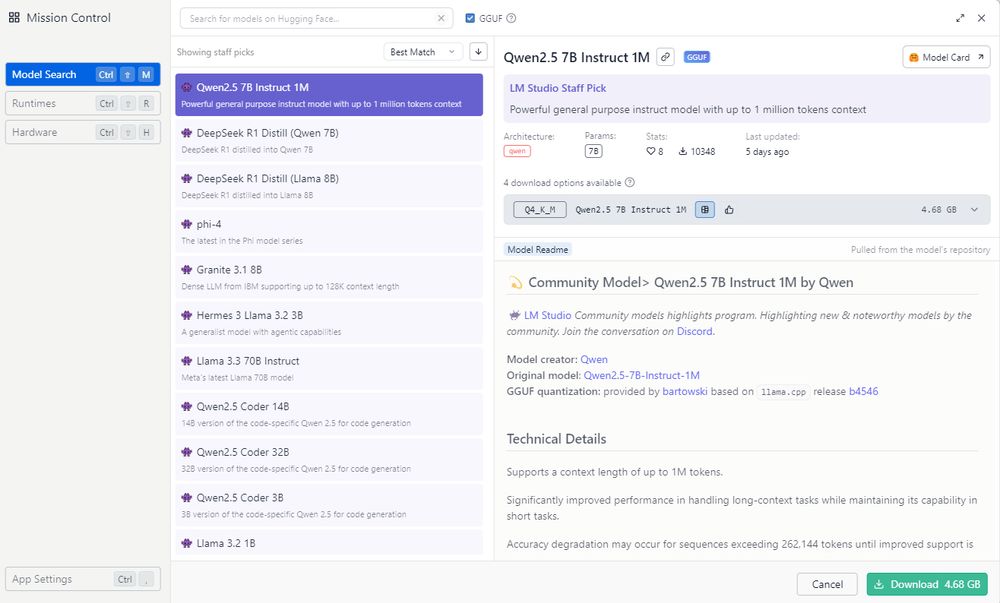

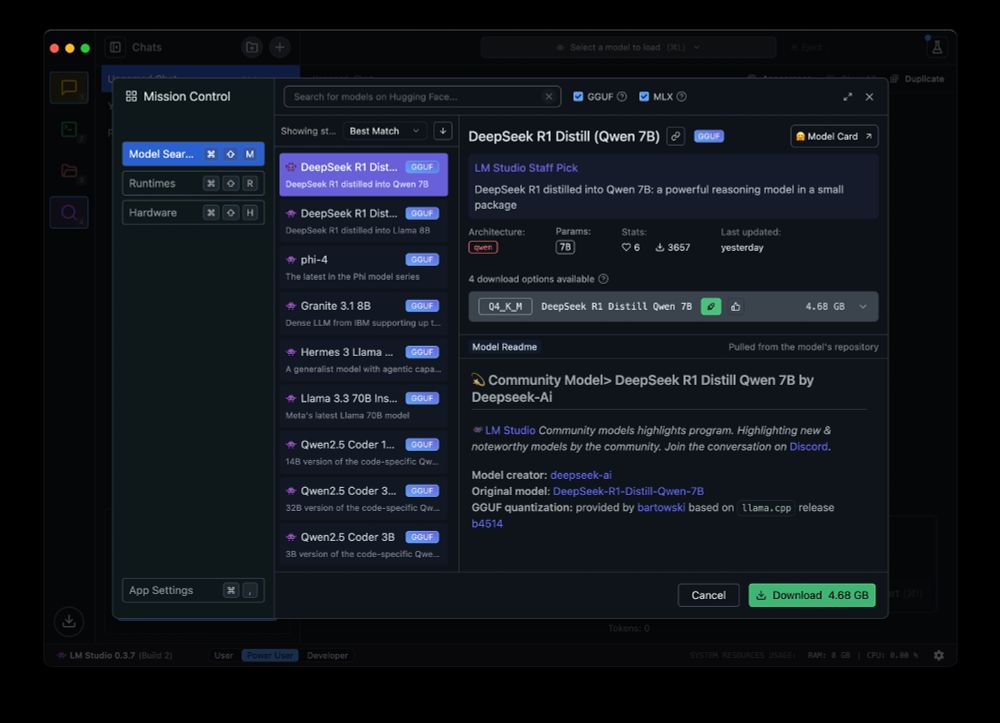

É muito simples, basta baixar o LM Studio , procurar pelo modelo, baixar (5GB) e rodar.

Vc tem uma IA de ponta 100% offline rodando no seu computador

Surreal.

É muito simples, basta baixar o LM Studio , procurar pelo modelo, baixar (5GB) e rodar.

Vc tem uma IA de ponta 100% offline rodando no seu computador

Surreal.

Zed's assistant can now be configured to run models from @lmstudio.

1. Install LM Studio

2. Download models in the app

3. Run the server via `lms server start`

4. Configure LM Studio in Zed's assistant configuration panel

5. Pick your model in Zed's assistant

Zed's assistant can now be configured to run models from @lmstudio.

1. Install LM Studio

2. Download models in the app

3. Run the server via `lms server start`

4. Configure LM Studio in Zed's assistant configuration panel

5. Pick your model in Zed's assistant

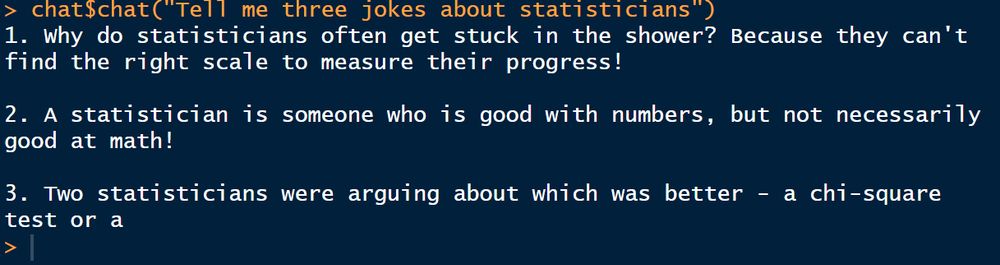

👉This guy!👈

(I did this via @lmstudio-ai.bsky.social using @hadley.nz's 'ellmer' package. Happy to share how I did it if people are interested).

👉This guy!👈

(I did this via @lmstudio-ai.bsky.social using @hadley.nz's 'ellmer' package. Happy to share how I did it if people are interested).

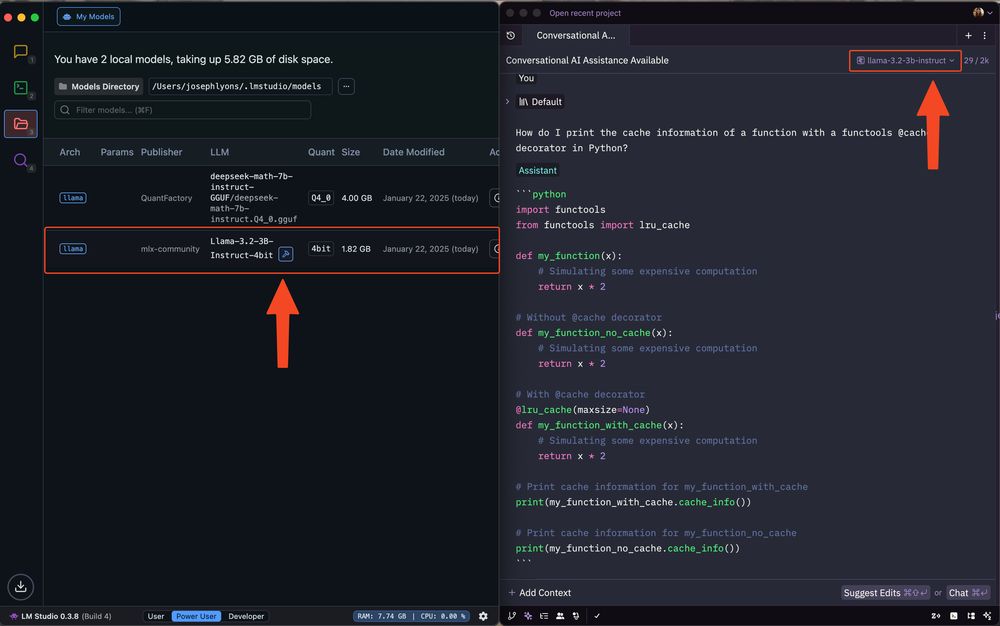

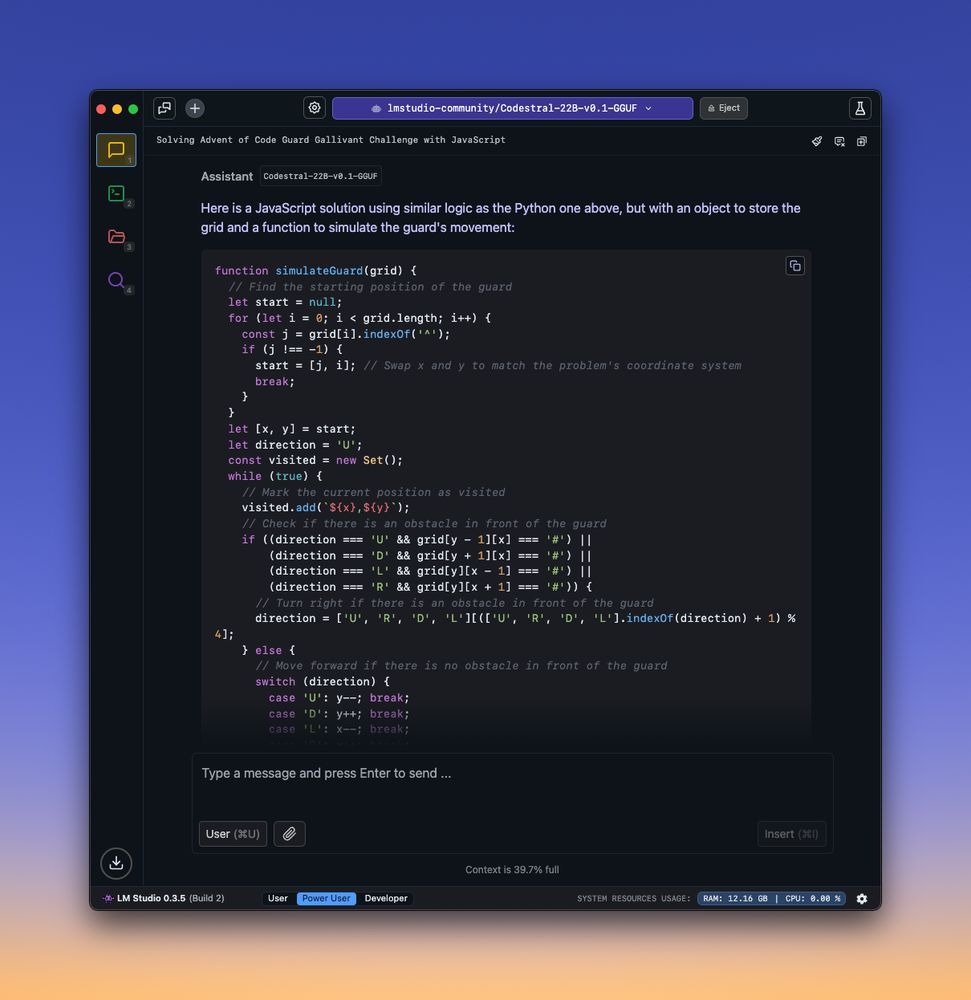

LM Studio appears to be the best option right now. Its support for MLX-based models means I can run LLama 3.1-8b with a full 128k context window on my M3 Max MacBook Pro with 36 GB.

Great for document chat, slack synopsis, and more.

What is everyone else doing?

LM Studio appears to be the best option right now. Its support for MLX-based models means I can run LLama 3.1-8b with a full 128k context window on my M3 Max MacBook Pro with 36 GB.

Great for document chat, slack synopsis, and more.

What is everyone else doing?

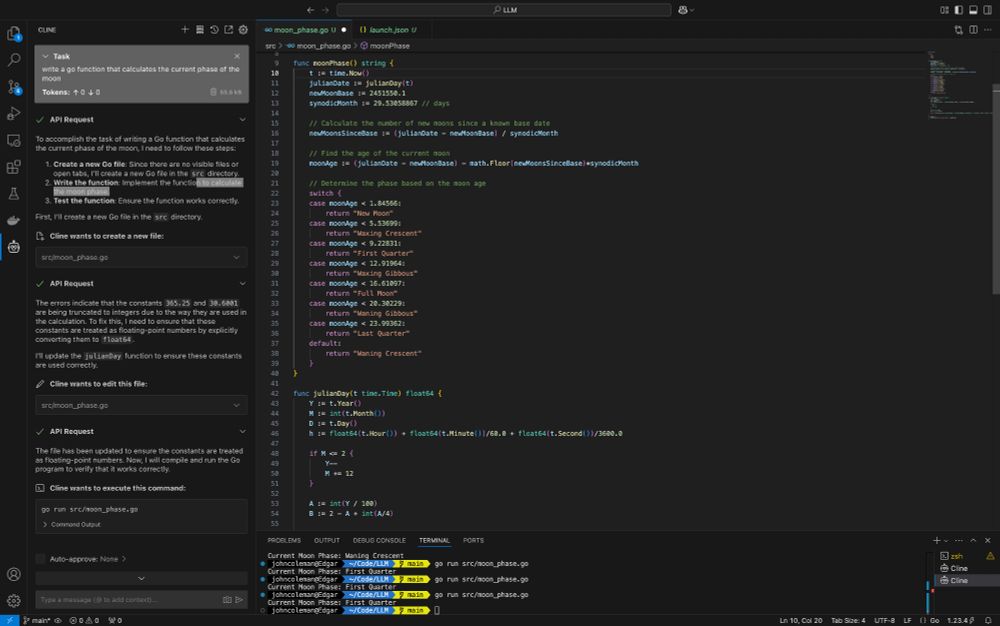

Programming prompt compiled and ran the second time after it self-corrected, all tests pass. Code generation took less than a minute to complete.

😳 @lmstudio-ai.bsky.social @vscode.dev

Programming prompt compiled and ran the second time after it self-corrected, all tests pass. Code generation took less than a minute to complete.

😳 @lmstudio-ai.bsky.social @vscode.dev

Extra points if you can name who's in the GIF 😂

martinctc.github.io/blog/summari...

But Qwen2.5-14B-Instruct-IQ4_XS *slaps*. It's no Claude, but I'm amazed how good it is.

(Also shout out to @lmstudio-ai.bsky.social - what a super smooth experience.)

But Qwen2.5-14B-Instruct-IQ4_XS *slaps*. It's no Claude, but I'm amazed how good it is.

(Also shout out to @lmstudio-ai.bsky.social - what a super smooth experience.)

Are you using OpenAI for Tool Use?

Want to do the same with Qwen, Llama, or Mistral locally?

Try out the new Tool Use beta!

Sign up to get the builds here: forms.gle/FBgAH43GRaR2...

Docs: lmstudio.ai/docs/advance... (requires the beta build to work)

Are you using OpenAI for Tool Use?

Want to do the same with Qwen, Llama, or Mistral locally?

Try out the new Tool Use beta!

Sign up to get the builds here: forms.gle/FBgAH43GRaR2...

Docs: lmstudio.ai/docs/advance... (requires the beta build to work)