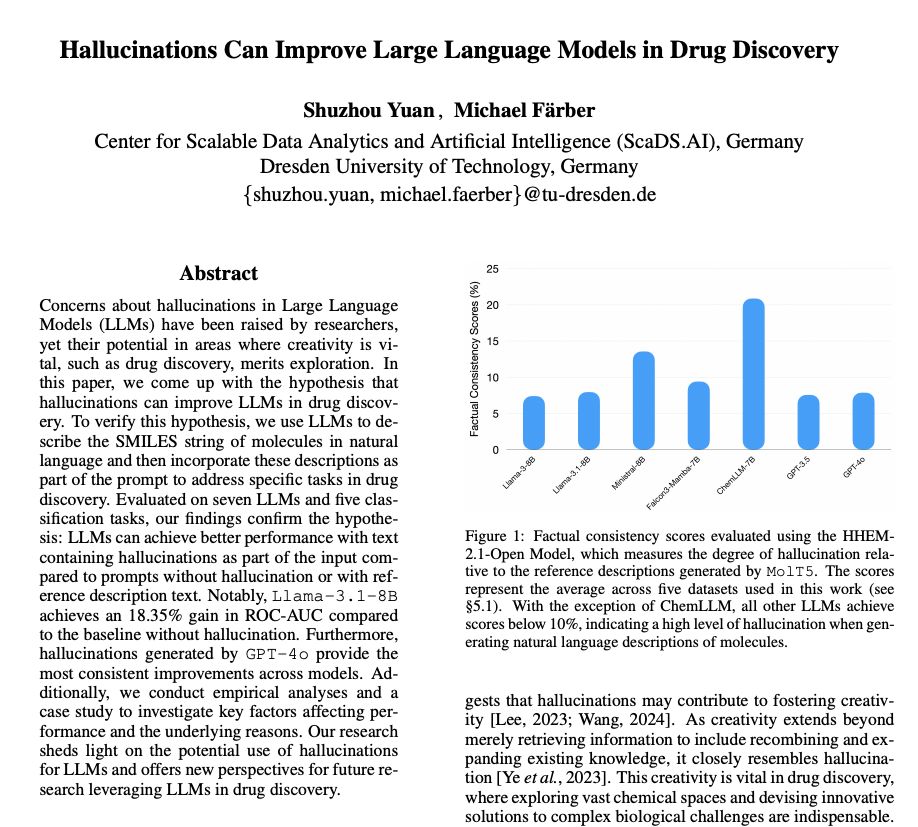

Folks like Karpathy have suggested that hallucination is an LLM's greatest feature.

Is there any evidence for the latter?

Folks like Karpathy have suggested that hallucination is an LLM's greatest feature.

Is there any evidence for the latter?

2025 is a huge year for AI Agents.

Here's what's included:

- Introduction to AI Agents

- The role of tools in Agents

- Enhancing model performance

- Quick start to Agents with LangChain

- Production applications with Vertex AI Agents

2025 is a huge year for AI Agents.

Here's what's included:

- Introduction to AI Agents

- The role of tools in Agents

- Enhancing model performance

- Quick start to Agents with LangChain

- Production applications with Vertex AI Agents

Provides a survey of time-series anomaly detection solutions.

Provides a survey of time-series anomaly detection solutions.

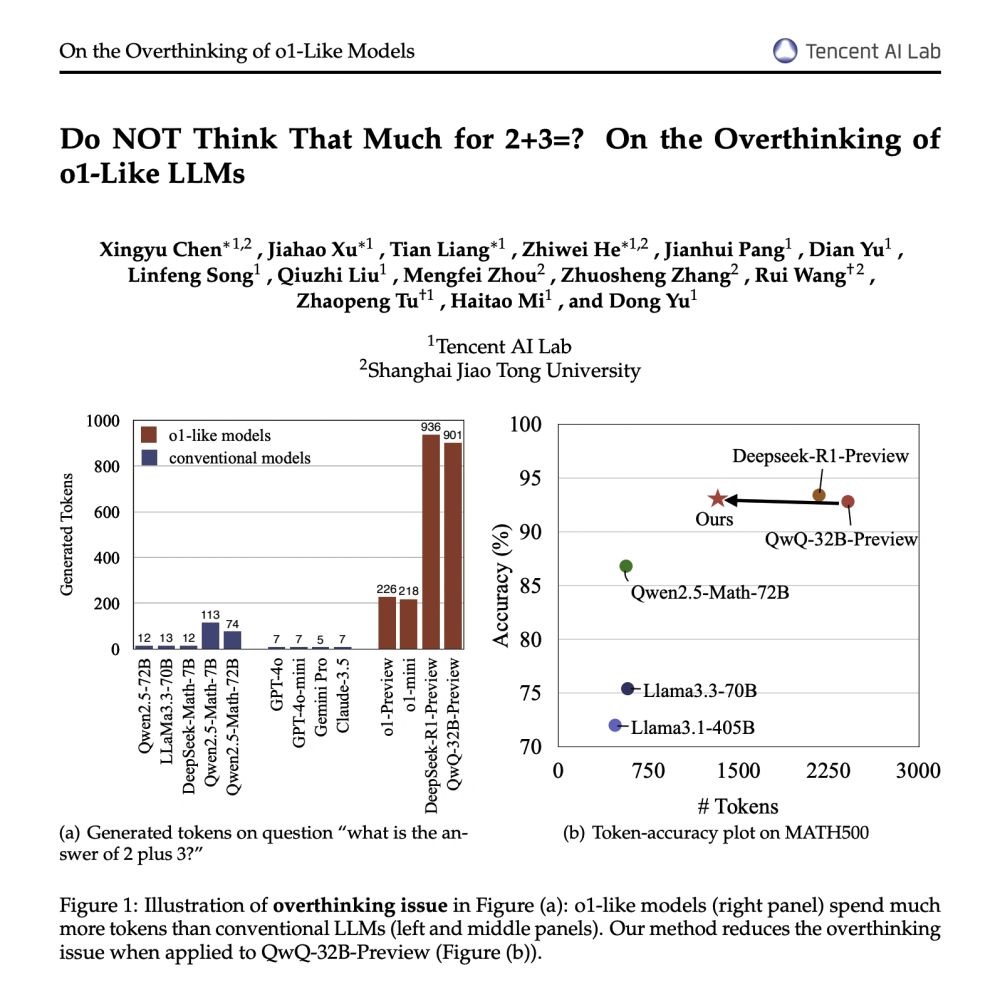

This approach can reduce token output by 48.6% while maintaining accuracy on the widely-used MATH500 test set as applied to QwQ-32B-Preview.

There are three parts to this study:

- analysis of the overthinking issue

This approach can reduce token output by 48.6% while maintaining accuracy on the widely-used MATH500 test set as applied to QwQ-32B-Preview.

There are three parts to this study:

- analysis of the overthinking issue

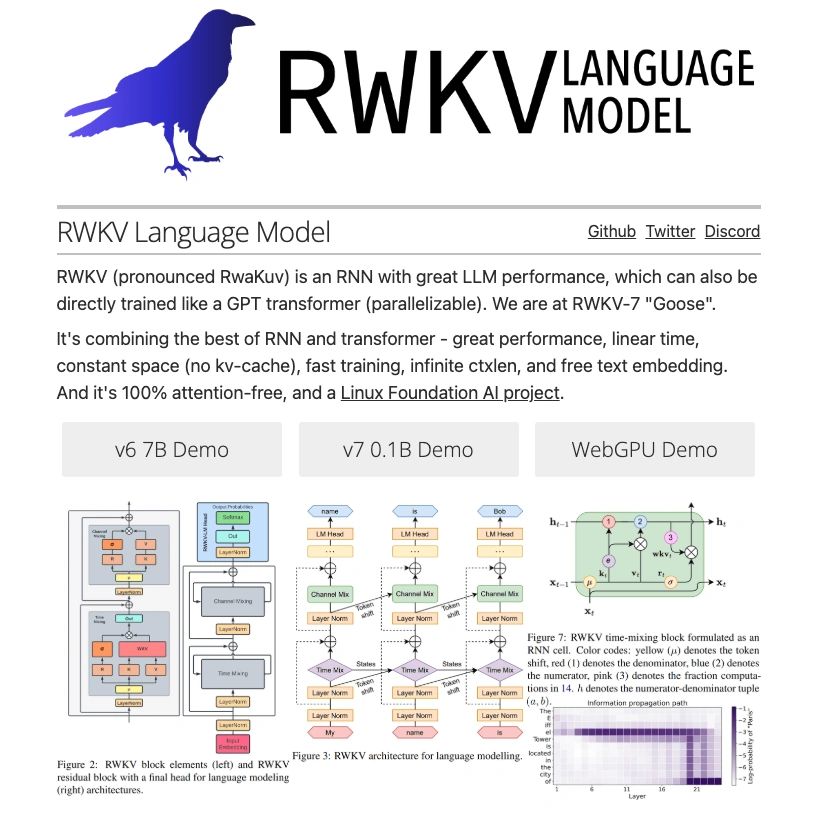

RWKV combines the best of RNN and transformers.

There is code for training your own model, fine-tuning, GUI, API, fast WebGPU inference, and more.

Cool project!

RWKV combines the best of RNN and transformers.

There is code for training your own model, fine-tuning, GUI, API, fast WebGPU inference, and more.

Cool project!

Features include (from the repo):

• 🤖 Advanced Agent Management: Create and orchestrate multiple AI agents with different roles and capabilities

Features include (from the repo):

• 🤖 Advanced Agent Management: Create and orchestrate multiple AI agents with different roles and capabilities

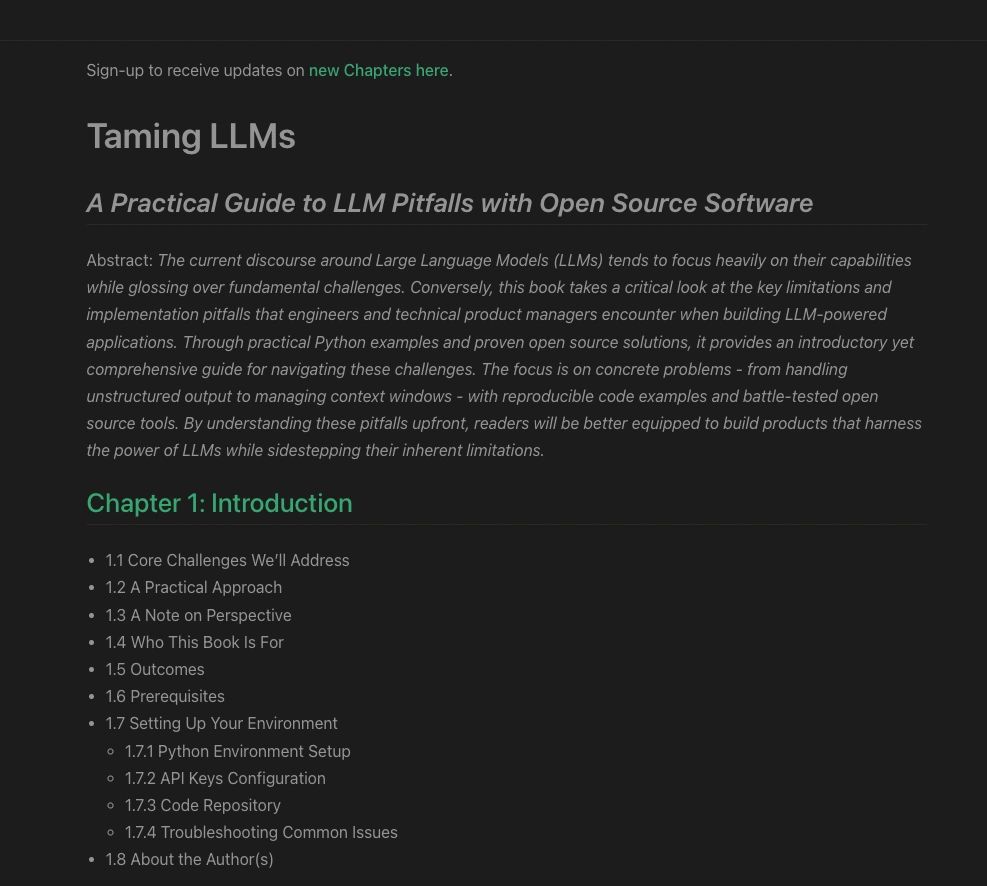

A few chapters have been made available already like structured output and evals. More to come soon!

A few chapters have been made available already like structured output and evals. More to come soon!

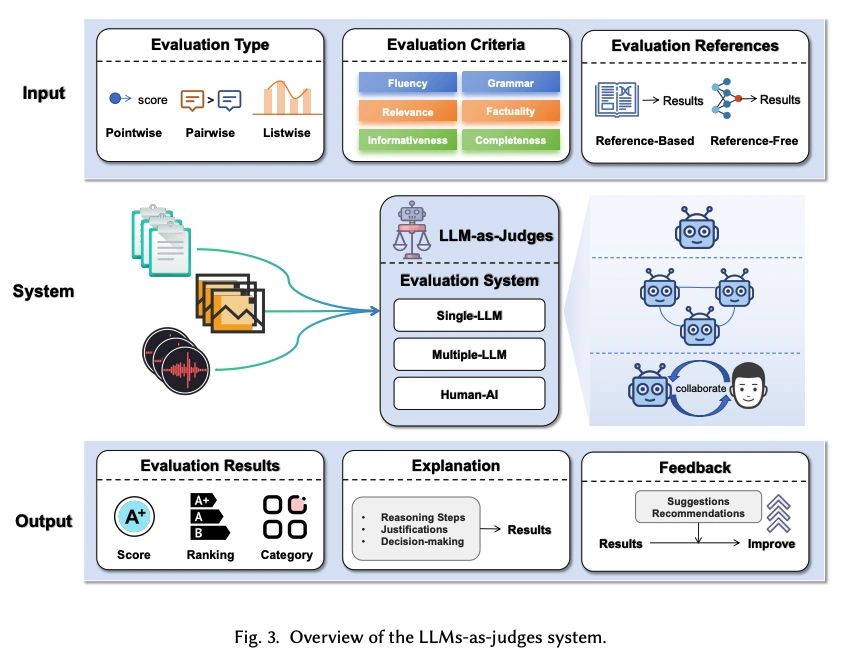

Presents a comprehensive survey of the LLMs-as-judges paradigm from five key perspectives: Functionality, Methodology, Applications, Meta-evaluation, and Limitations.

Presents a comprehensive survey of the LLMs-as-judges paradigm from five key perspectives: Functionality, Methodology, Applications, Meta-evaluation, and Limitations.

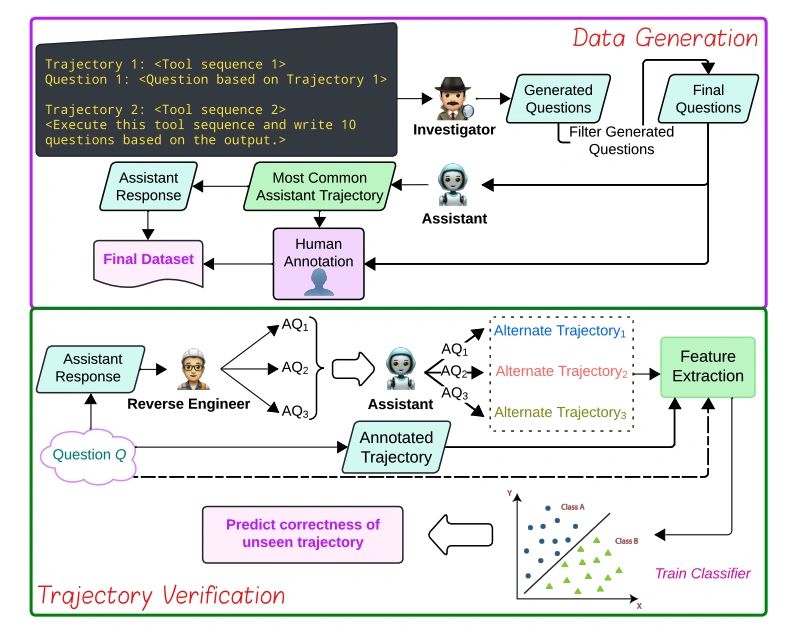

Presents MAG-V, a multi-agent framework that first generates a dataset of questions that mimic customer queries. It then reverse engineer alternate questions from responses to verify agent trajectories.

arxiv.org/abs/2412.04494

Presents MAG-V, a multi-agent framework that first generates a dataset of questions that mimic customer queries. It then reverse engineer alternate questions from responses to verify agent trajectories.

arxiv.org/abs/2412.04494

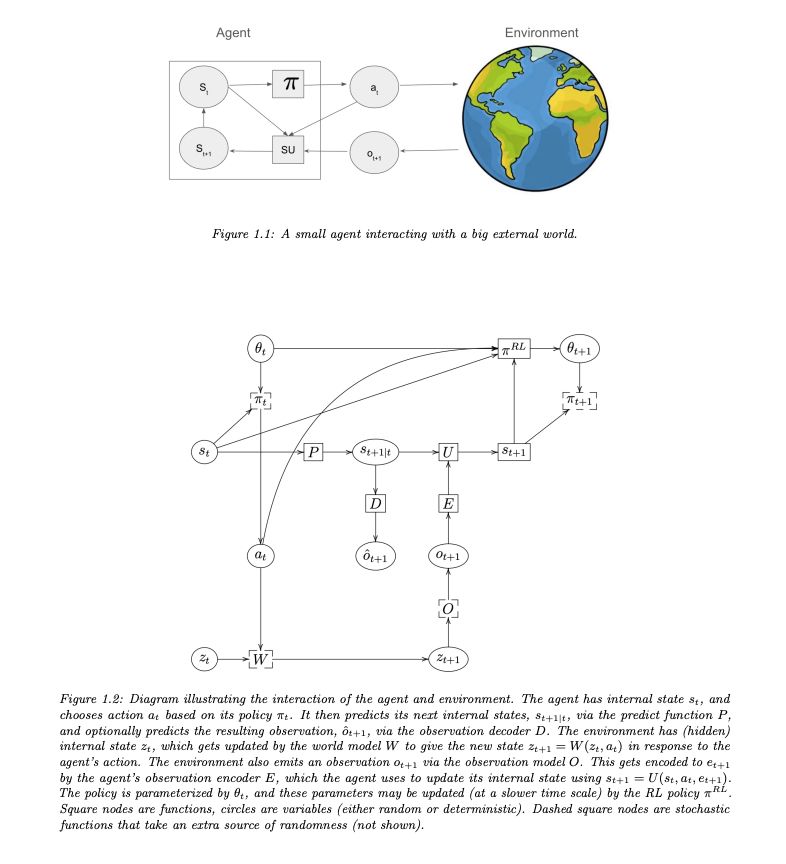

This gem just dropped on arXiv.

An up-to-date overview of reinforcement learning and sequential decision-making.

arxiv.org/abs/2412.05265

This gem just dropped on arXiv.

An up-to-date overview of reinforcement learning and sequential decision-making.

arxiv.org/abs/2412.05265

Extends the rStar reasoning framework to enhance reasoning accuracy and factual reliability of LLMs.

Extends the rStar reasoning framework to enhance reasoning accuracy and factual reliability of LLMs.

Introduces DataLab, a unified BI platform that integrates an LLM-based agent framework with an augmented computational notebook interface.

Introduces DataLab, a unified BI platform that integrates an LLM-based agent framework with an augmented computational notebook interface.

Great survey on LLM-brained GUI agents, including techniques and applications.

https://arxiv.org/abs/2411.18279

Great survey on LLM-brained GUI agents, including techniques and applications.

https://arxiv.org/abs/2411.18279

It achieves state-of-the-art accuracy (79.6%) on the MATH benchmark with Qwen2.5-7B-Instruct, surpassing GPT-4o (76.6%) and Claude 3.5 (71.1%).

It achieves state-of-the-art accuracy (79.6%) on the MATH benchmark with Qwen2.5-7B-Instruct, surpassing GPT-4o (76.6%) and Claude 3.5 (71.1%).

Scientific discovery is the next big goal for AI. We are seeing a huge number of research studies tackling AI-powered scientific discovery from different angles and for different problems.

Scientific discovery is the next big goal for AI. We are seeing a huge number of research studies tackling AI-powered scientific discovery from different angles and for different problems.

Shows that combining simple distillation from O1's API with supervised fine-tuning significantly boosts performance on complex math reasoning tasks.

Shows that combining simple distillation from O1's API with supervised fine-tuning significantly boosts performance on complex math reasoning tasks.

This is probably one of the important open-source efforts in post-training of LLMs.

This is probably one of the important open-source efforts in post-training of LLMs.

Proposes that LLM-based chatbots play the ‘language game of bullshit’

Proposes that LLM-based chatbots play the ‘language game of bullshit’

Voyage AI releases voyage-3 and voyage-3-lite embedding models.

Voyage AI releases voyage-3 and voyage-3-lite embedding models.

Proposes StructGPT to improve the zero-shot reasoning ability of LLMs over structured data. Effective for solving question answering tasks based on structured data.

paper: arxiv.org/abs/2305.09645

Proposes StructGPT to improve the zero-shot reasoning ability of LLMs over structured data. Effective for solving question answering tasks based on structured data.

paper: arxiv.org/abs/2305.09645