@GaelVaroquaux: neural networks, tabular data, uncertainty, active learning, atomistic ML, learning theory.

https://dholzmueller.github.io

🥈2nd place used stacking with diverse models

🥇1st place found a larger dataset

🥈2nd place used stacking with diverse models

🥇1st place found a larger dataset

Hall 3 + Hall 2B #32, 10am Singapore time

Paper: arxiv.org/abs/2408.01536

Hall 3 + Hall 2B #32, 10am Singapore time

Paper: arxiv.org/abs/2408.01536

www.kaggle.com/competitions...

www.kaggle.com/competitions...

Link to the repo: github.com/dholzmueller...

PS: The newest pytabkit version now includes multiquantile regression for RealMLP and a few other improvements.

bsky.app/profile/dhol...

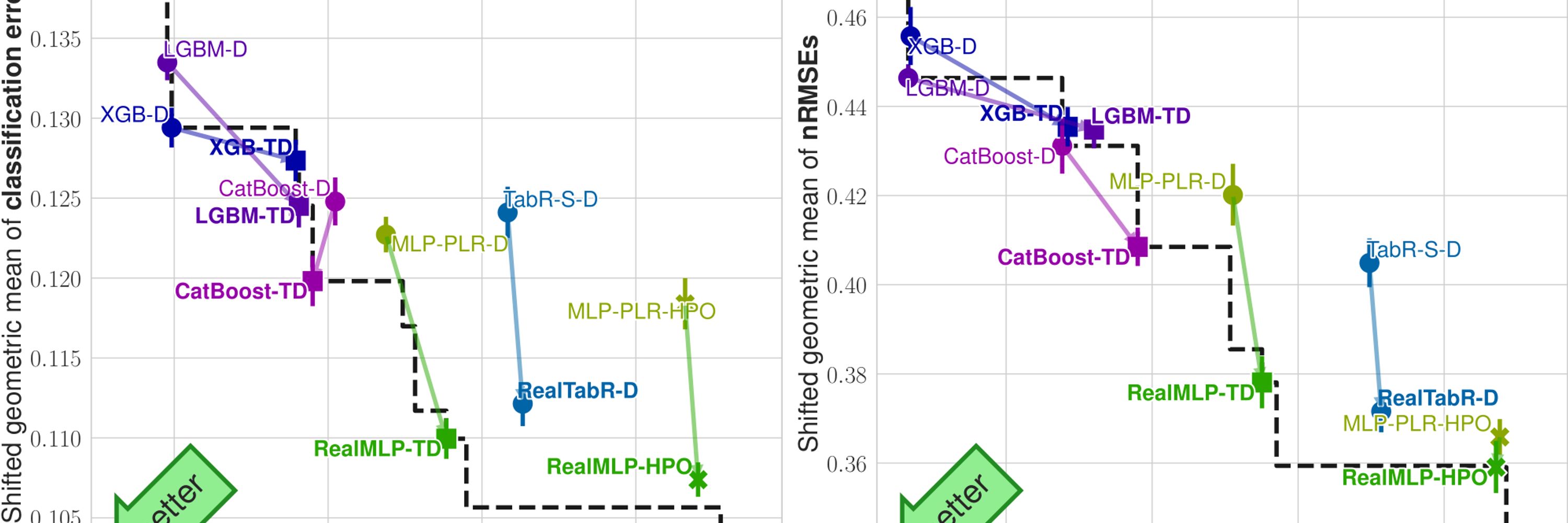

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

www.kaggle.com/competitions...

www.kaggle.com/competitions...

Link to the repo: github.com/dholzmueller...

PS: The newest pytabkit version now includes multiquantile regression for RealMLP and a few other improvements.

bsky.app/profile/dhol...

projecteuclid.org/journals/ann...

projecteuclid.org/journals/ann...