And possible applications of the filter mechanism, like as a zero-shot "lie detector" that can flag incorrect statements in ordinary text.

And possible applications of the filter mechanism, like as a zero-shot "lie detector" that can flag incorrect statements in ordinary text.

If we pick up the representation for a question in French, it will accurately match items expressed in the Thai language.

If we pick up the representation for a question in French, it will accurately match items expressed in the Thai language.

OK, here it is fixed. Nice thing about workbench is that it just takes a second to edit the prompt, and you can see how the LLM responds, now deciding very early it should be ':'

OK, here it is fixed. Nice thing about workbench is that it just takes a second to edit the prompt, and you can see how the LLM responds, now deciding very early it should be ':'

Instead it first "thinks" about the (English) word "love".

In other words: LLMs translate using *concepts*, not tokens.

Instead it first "thinks" about the (English) word "love".

In other words: LLMs translate using *concepts*, not tokens.

The workbench doesn't just show you the model's output. It shows the grid of internal states that lead to the output. Researchers call this visualization the "logit lens".

The workbench doesn't just show you the model's output. It shows the grid of internal states that lead to the output. Researchers call this visualization the "logit lens".

That's easy! (you might think) Because surely it knows: amore, amor, amour are all based on the same Latin word. It can just drop the "e", or add a "u".

That's easy! (you might think) Because surely it knows: amore, amor, amour are all based on the same Latin word. It can just drop the "e", or add a "u".

We need to be aware when an LM is thinking about tokens or concepts.

They do both, and it makes a difference which way it's thinking.

We need to be aware when an LM is thinking about tokens or concepts.

They do both, and it makes a difference which way it's thinking.

@keremsahin22.bsky.social + Sheridan are finding cool ways to look into Olah's induction hypothesis too!

@keremsahin22.bsky.social + Sheridan are finding cool ways to look into Olah's induction hypothesis too!

Sheridan discovered (Neurips mechint 2025) that semantic vector arithmetic works better in this space. (Token semantics work in tokenspace.)

arithmetic.baulab.info/

Sheridan discovered (Neurips mechint 2025) that semantic vector arithmetic works better in this space. (Token semantics work in tokenspace.)

arithmetic.baulab.info/

That happens even for computer code. They copy the BEHAVIOR of the code, but write it in a totally different way!

That happens even for computer code. They copy the BEHAVIOR of the code, but write it in a totally different way!

If the target context is in Chinese, they will copy the concept into Chinese. Or patch them between runs to get Italian. They mediate translation.

If the target context is in Chinese, they will copy the concept into Chinese. Or patch them between runs to get Italian. They mediate translation.

Instead of copying tokens, they copy *concepts*.

Instead of copying tokens, they copy *concepts*.

Yes, the token induction of Elhage and Olsson is there.

But there is *another* route where the copying is done in a different way. It shows up it in attention heads that do 2-ahead copying.

bsky.app/profile/sfe...

Yes, the token induction of Elhage and Olsson is there.

But there is *another* route where the copying is done in a different way. It shows up it in attention heads that do 2-ahead copying.

bsky.app/profile/sfe...

Induction heads are how transformers copy text: they find earlier tokens in identical contexts. (Elhage 2021, Olsson 2022 arxiv.org/abs/2209.11895)

But when that context "what token came before" is erased, how could induction possibly work?

Induction heads are how transformers copy text: they find earlier tokens in identical contexts. (Elhage 2021, Olsson 2022 arxiv.org/abs/2209.11895)

But when that context "what token came before" is erased, how could induction possibly work?

But in meaningFUL phrases, the LM often ERASES the context!!

Exactly opposite of what we expected.

But in meaningFUL phrases, the LM often ERASES the context!!

Exactly opposite of what we expected.

In footprints.baulab.info (EMNLP) while dissecting the problem of how LMs read badly tokenized words like " n.ort.he.astern", Sheridan found a huge surprise: they do it by _erasing_ contextual information.

In footprints.baulab.info (EMNLP) while dissecting the problem of how LMs read badly tokenized words like " n.ort.he.astern", Sheridan found a huge surprise: they do it by _erasing_ contextual information.

I want to draw your attention to a COLM paper by my student @sfeucht.bsky.social that has totally changed the way I think and teach about LLM representations. The work is worth knowing.

And you can meet Sheridan at COLM, Oct 7!

bsky.app/profile/sfe...

I want to draw your attention to a COLM paper by my student @sfeucht.bsky.social that has totally changed the way I think and teach about LLM representations. The work is worth knowing.

And you can meet Sheridan at COLM, Oct 7!

bsky.app/profile/sfe...

• What is GPT-OSS 120B thinking inside?

• What does OLMO-32b learn between all its hundreds of checkpoints?

• Why do Qwen3 layers have such different roles from LLama's?

• How does Foundation-Sec reason about cybersecurity?

• What is GPT-OSS 120B thinking inside?

• What does OLMO-32b learn between all its hundreds of checkpoints?

• Why do Qwen3 layers have such different roles from LLama's?

• How does Foundation-Sec reason about cybersecurity?

This could be relevant to your research...

This could be relevant to your research...

The picture is not complete, but it's worth reading and contemplating.

The picture is not complete, but it's worth reading and contemplating.

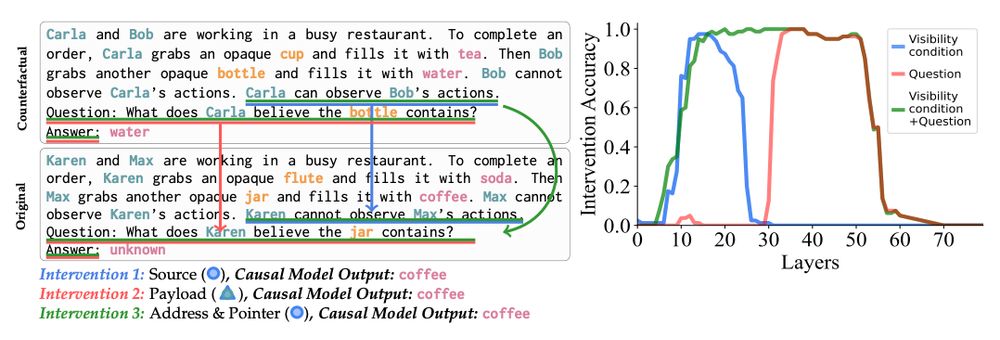

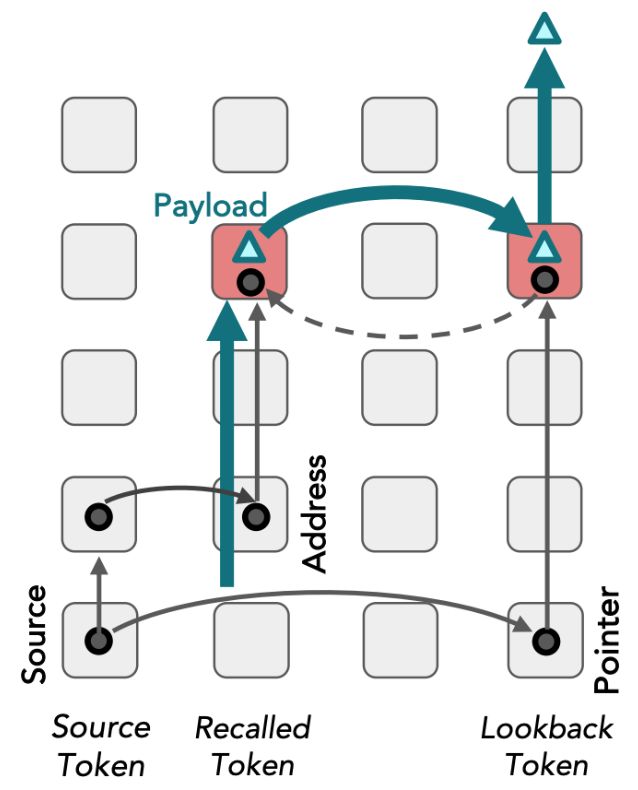

That's because "dereference thing 2" looks for the floating definition of "where is thing 2."

The patch redirects this second indirection!

That's because "dereference thing 2" looks for the floating definition of "where is thing 2."

The patch redirects this second indirection!

Dai calls these OI's arxiv.org/abs/2409.05448 and

@fjiahai.bsky.social calls them binding IDs arxiv.org/abs/2310.17191

It is a general "lookback" pattern.

Next surprise is nested...

Dai calls these OI's arxiv.org/abs/2409.05448 and

@fjiahai.bsky.social calls them binding IDs arxiv.org/abs/2310.17191

It is a general "lookback" pattern.

Next surprise is nested...

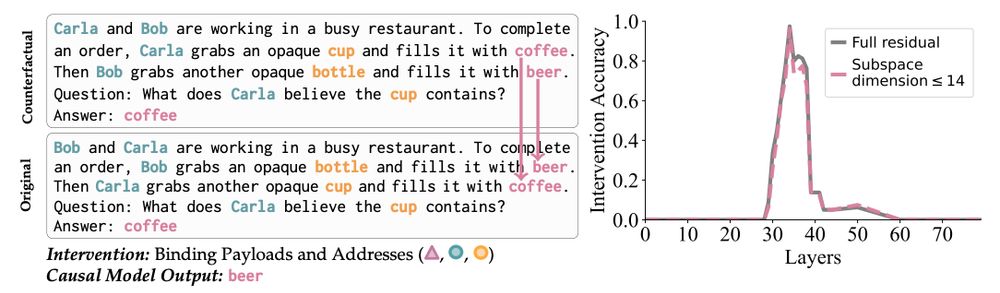

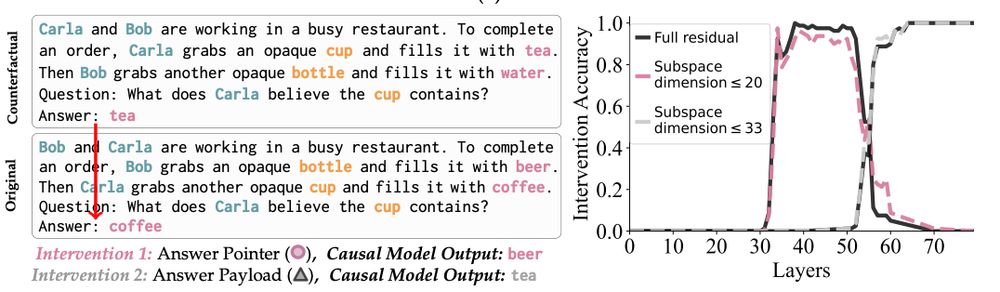

The first thing to understand is his remarkable Fig 2 experiment. Why does the patching of one state, which alters coffee->tea, switch to coffee->beer when you move states deeper than layer 55?

The first thing to understand is his remarkable Fig 2 experiment. Why does the patching of one state, which alters coffee->tea, switch to coffee->beer when you move states deeper than layer 55?

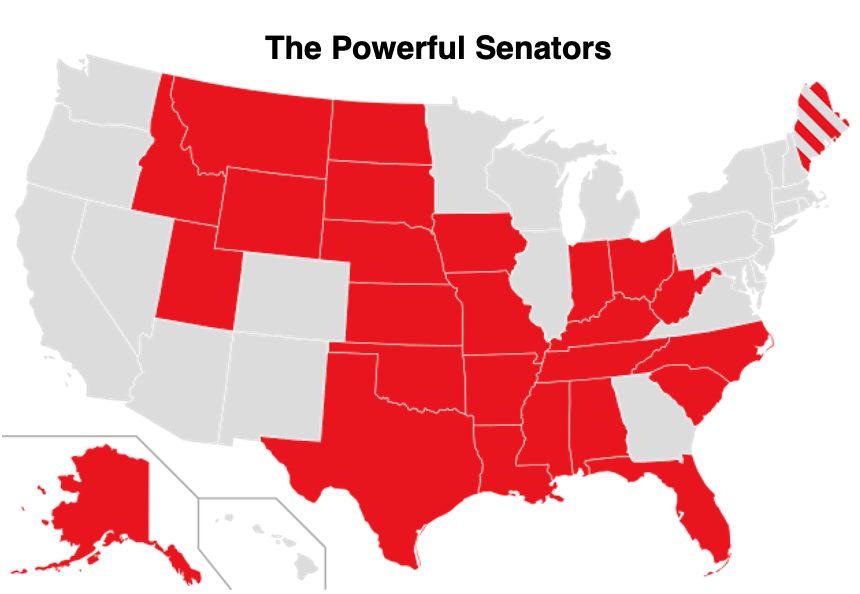

Read here about local impact and how to contact them. They DO listen to voters. They WILL listen to you!

thevisible.net/posts/005-a...

Read here about local impact and how to contact them. They DO listen to voters. They WILL listen to you!

thevisible.net/posts/005-a...

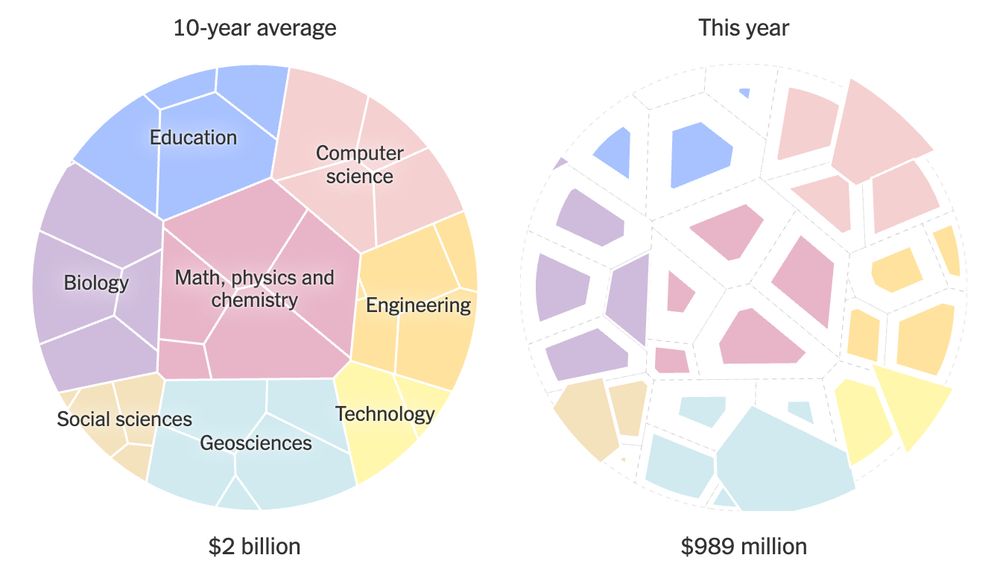

Your help is needed to fix this. The current DC plan PERMANENTLY slashes NSF, NIH, all science training. Money isn't redirected—it's gone.

Please read+share what's happening

thevisible.net/posts/004-s...

Your help is needed to fix this. The current DC plan PERMANENTLY slashes NSF, NIH, all science training. Money isn't redirected—it's gone.

Please read+share what's happening

thevisible.net/posts/004-s...