Working on inference at Hugging Face 🤗. Open source ML 🚀.

go.bsky.app/VngRFva

The @hf.co Research team is excited to share their new e-book that covers the full pipeline:

· pre-training,

· post-training,

· infra.

200+ pages of what worked and what didn’t. ⤵️

The @hf.co Research team is excited to share their new e-book that covers the full pipeline:

· pre-training,

· post-training,

· infra.

200+ pages of what worked and what didn’t. ⤵️

A little over a year ago, @hf.co acquired XetHub to unlock the next phase of growth in models and datasets. huggingface.co/blog/xethub-...

In April, there were 1,000 Hugging Face repos on Xet. Now every repo (over 6M) on the Hub is on Xet.

A little over a year ago, @hf.co acquired XetHub to unlock the next phase of growth in models and datasets. huggingface.co/blog/xethub-...

In April, there were 1,000 Hugging Face repos on Xet. Now every repo (over 6M) on the Hub is on Xet.

huggingface.co/blog/kernel-...

huggingface.co/blog/kernel-...

huggingface.co/blog/welcome...

huggingface.co/blog/welcome...

github.com/huggingface/...

github.com/huggingface/...

github.com/koaning/mkt...

github.com/koaning/mkt...

Details in 🧵:

Details in 🧵:

A dream come true: cute and low priced, hackable yet easy to use, powered by open-source and the infinite community.

Read more and order now at huggingface.co/blog/reachy-...

A dream come true: cute and low priced, hackable yet easy to use, powered by open-source and the infinite community.

Read more and order now at huggingface.co/blog/reachy-...

David Holz made a great writeup of how you can use kernels in your projects: huggingface.co/blog/hello-h...

David Holz made a great writeup of how you can use kernels in your projects: huggingface.co/blog/hello-h...

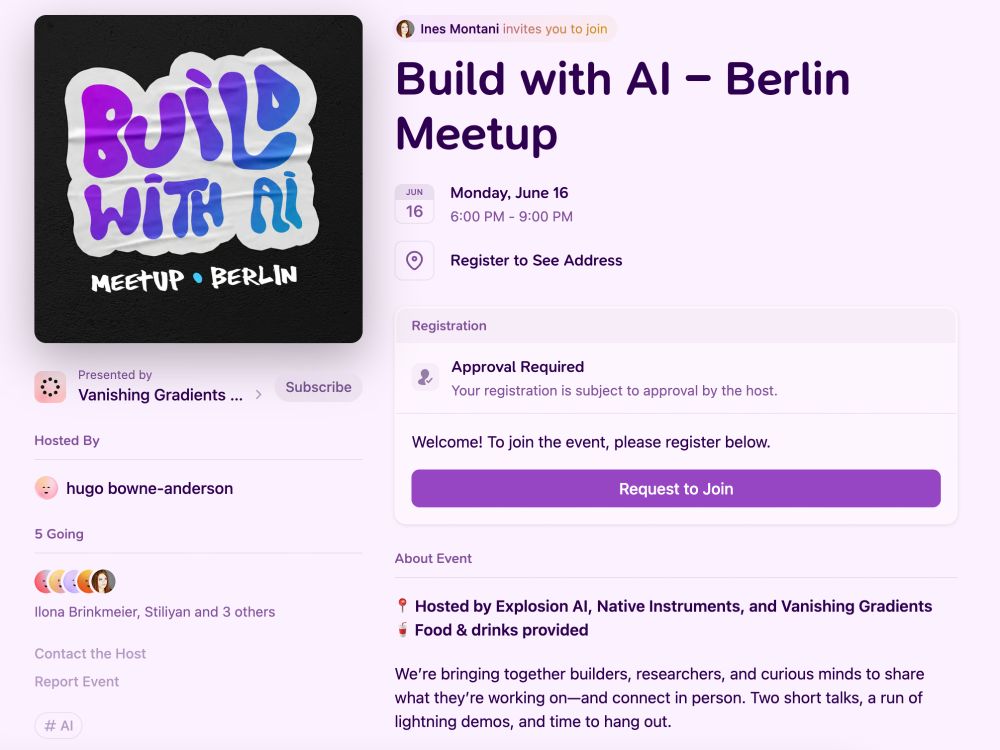

📆 June 16, 18:00

📍 Native Instruments (Kreuzberg)

🎟️ lu.ma/d53y9p2u

📆 June 16, 18:00

📍 Native Instruments (Kreuzberg)

🎟️ lu.ma/d53y9p2u

github.com/huggingface/...

github.com/huggingface/...

github.com/huggingface/...

github.com/huggingface/...

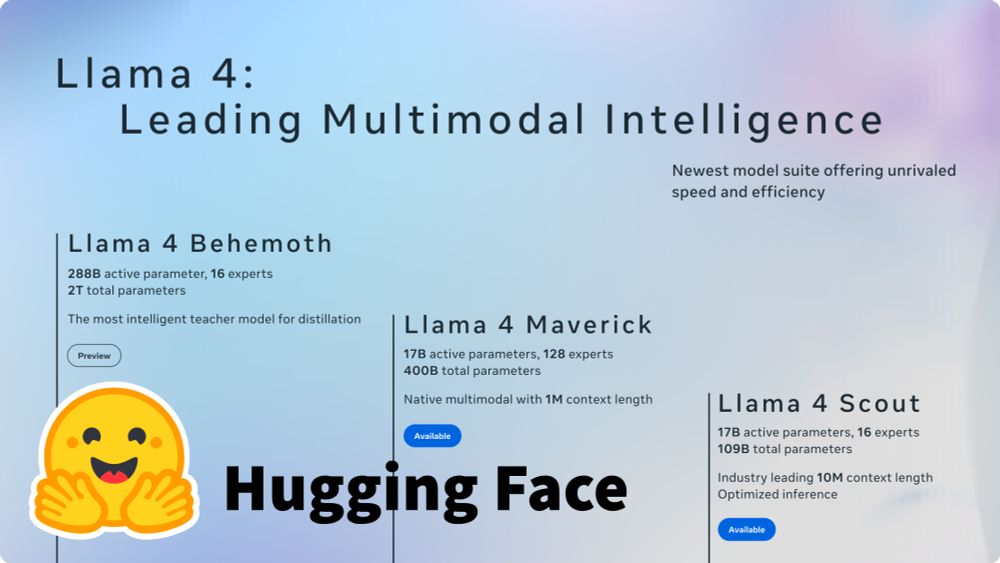

Read the whole post to see more about these models.

Read the whole post to see more about these models.

You can deploy it from endpoints directly with an optimally selected hardware and configurations.

Give it a try 👇

You can deploy it from endpoints directly with an optimally selected hardware and configurations.

Give it a try 👇

Register here to receive news and early access opportunities throughout the development year: forms.kagi.com?q=orion_linu...

Register here to receive news and early access opportunities throughout the development year: forms.kagi.com?q=orion_linu...

A few months ago, I published a timeline of our work and this is a big step (of many!) to bring our storage to the Hub - more in 🧵👇

We've done a lot of work since joining @hf.co, and I wanted to share a reading guide to see our progress since we got here.

A few months ago, I published a timeline of our work and this is a big step (of many!) to bring our storage to the Hub - more in 🧵👇

Awesome work by @mohit-sharma.bsky.social and @narsilou.bsky.social !

Text-generation-inference v3.1.0 is out and supports it out of the box.

Both on AMD and Nvidia !

Awesome work by @mohit-sharma.bsky.social and @narsilou.bsky.social !

Text-generation-inference v3.1.0 is out and supports it out of the box.

Both on AMD and Nvidia !

Text-generation-inference v3.1.0 is out and supports it out of the box.

Both on AMD and Nvidia !