More on the story and use cases here: anishathalye.com/semlib/. (2/)

More on the story and use cases here: anishathalye.com/semlib/. (2/)

It works surprisingly well in practice.

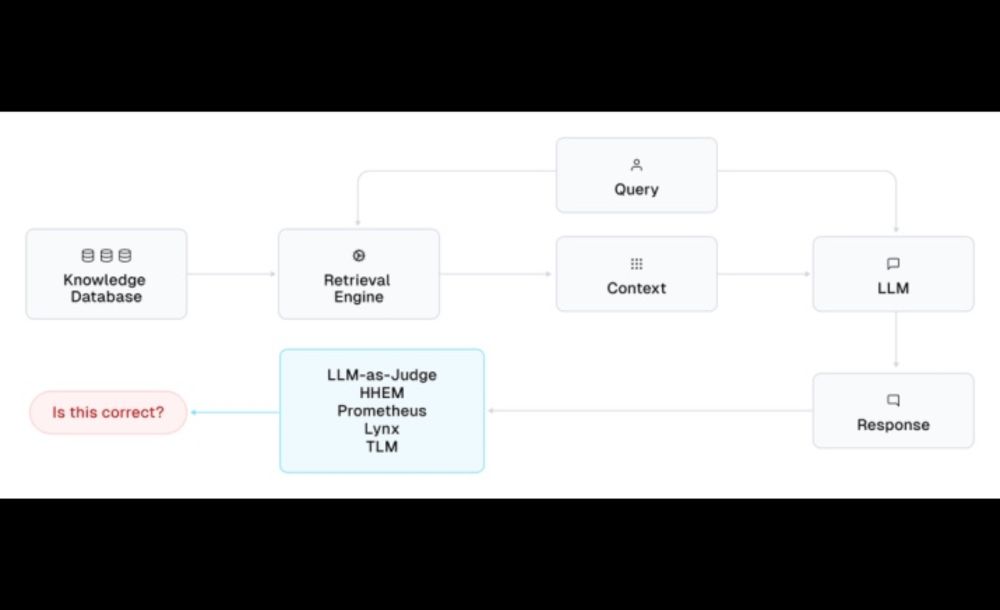

cleanlab.ai/blog/rag-eva...

Hoping to see more of these real-time reference-free evaluations to give end users more confidence in the outputs of AI applications.

It works surprisingly well in practice.

cleanlab.ai/blog/rag-eva...

Hoping to see more of these real-time reference-free evaluations to give end users more confidence in the outputs of AI applications.