After UIUC's blue and @tticconnect.bsky.social blue, I’m delighted to add another shade of blue to my journey at Hopkins @jhucompsci.bsky.social. Super excited!!

After UIUC's blue and @tticconnect.bsky.social blue, I’m delighted to add another shade of blue to my journey at Hopkins @jhucompsci.bsky.social. Super excited!!

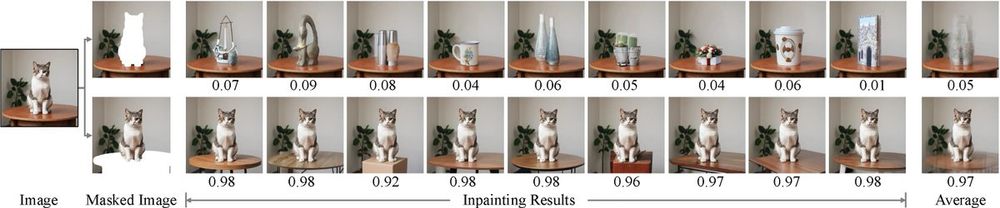

When we remove the top bowl, we get diverse semantics: fruits, plants, and other objects that just happen to fit the shape. As we go down, it becomes less diverse: occasional flowers, new bowls in the middle, & finally just bowls at the bottom.

When we remove the top bowl, we get diverse semantics: fruits, plants, and other objects that just happen to fit the shape. As we go down, it becomes less diverse: occasional flowers, new bowls in the middle, & finally just bowls at the bottom.

Search for “cups” → You’ll almost always see a table.

Search for “tables” → You rarely see cups.

So: P(table | cup) ≫ P(cup | table)

We exploit this asymmetry to guide counterfactual inpainting

Search for “cups” → You’ll almost always see a table.

Search for “tables” → You rarely see cups.

So: P(table | cup) ≫ P(cup | table)

We exploit this asymmetry to guide counterfactual inpainting

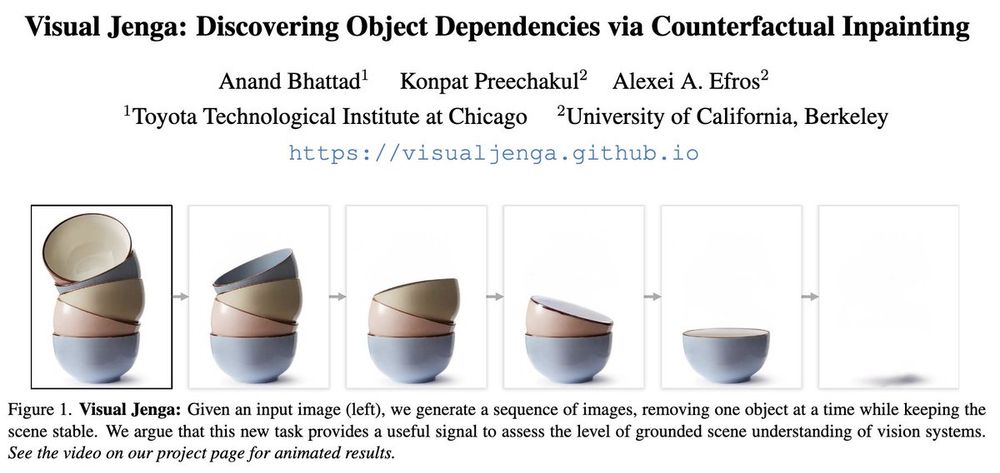

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

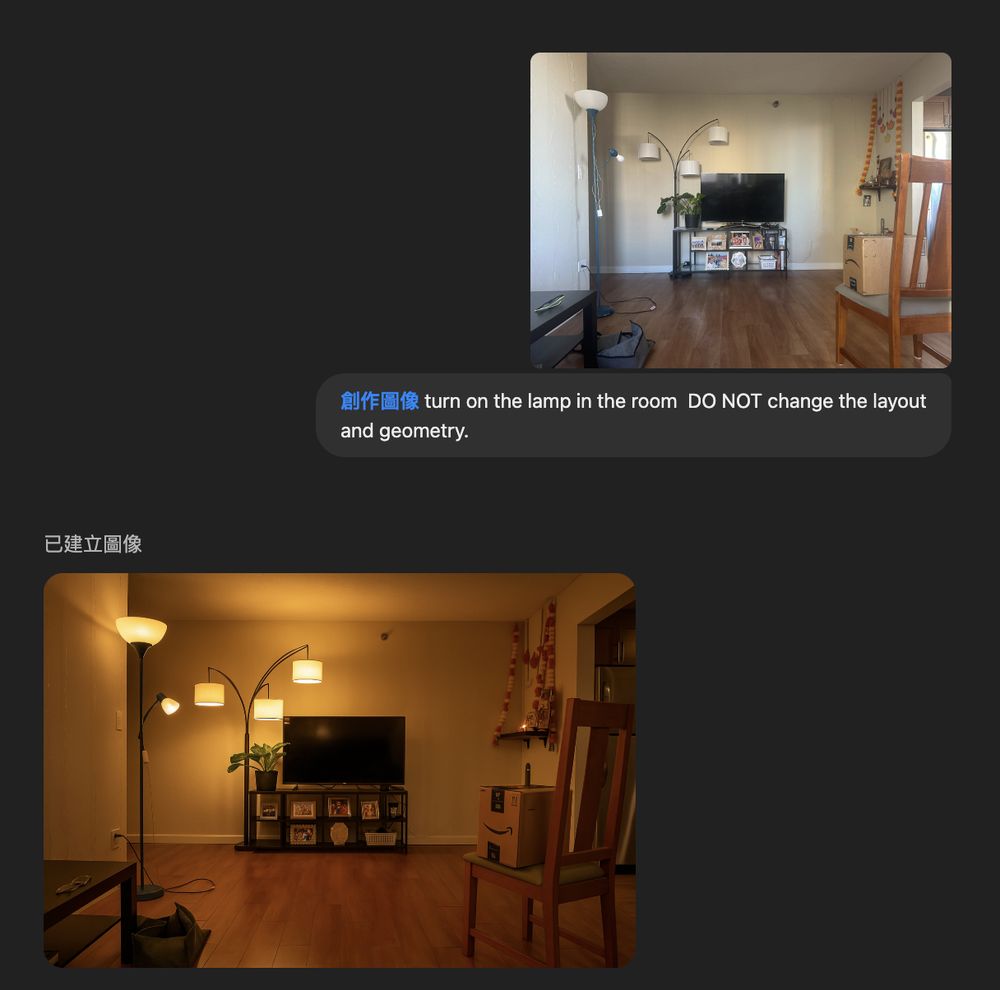

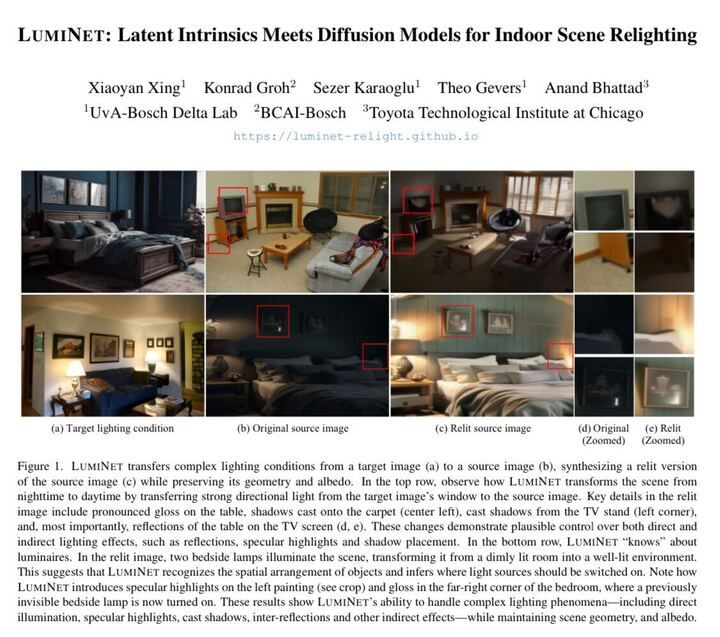

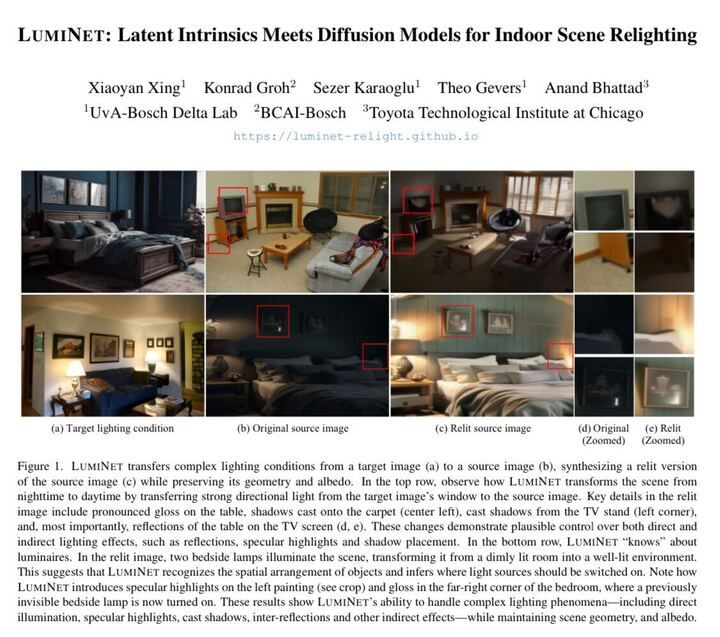

Xiaoyan, who’s been working with me on relighting, sent this over. It’s one of the hardest examples we’ve consistently used to stress-test LumiNet: luminet-relight.github.io

Xiaoyan, who’s been working with me on relighting, sent this over. It’s one of the hardest examples we’ve consistently used to stress-test LumiNet: luminet-relight.github.io

Check out our #3DV2025 UrbanIR paper, led by @chih-hao.bsky.social that does exactly this.

🔗: urbaninverserendering.github.io

Check out our #3DV2025 UrbanIR paper, led by @chih-hao.bsky.social that does exactly this.

🔗: urbaninverserendering.github.io

w/ Mathieu Garon and @jflalonde.bsky.social

w/ Mathieu Garon and @jflalonde.bsky.social

Train a diffusion model as a renderer that takes intrinsic images as input. Once trained, we can perform zero-shot object compositing & can easily extend this to other object editing tasks, such as material swapping.

arxiv.org/abs/2410.08168

Train a diffusion model as a renderer that takes intrinsic images as input. Once trained, we can perform zero-shot object compositing & can easily extend this to other object editing tasks, such as material swapping.

arxiv.org/abs/2410.08168

sites.google.com/view/standou...

sites.google.com/view/standou...

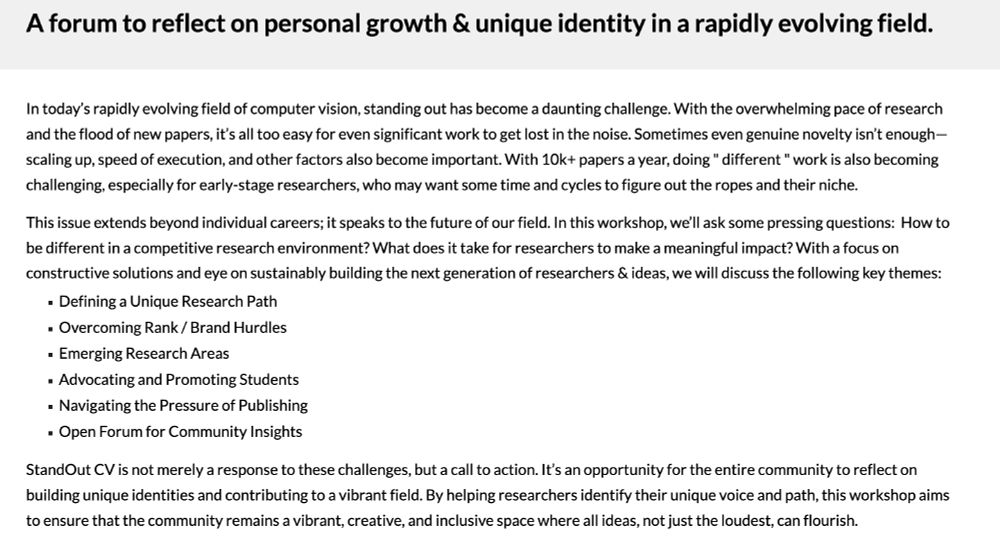

Here's what "Standing Out" means to us:

"A forum to reflect on personal growth & unique identity in a rapidly evolving field."

The goal is to help researchers find their authentic voice while building a more vibrant, inclusive CV community.

Here's what "Standing Out" means to us:

"A forum to reflect on personal growth & unique identity in a rapidly evolving field."

The goal is to help researchers find their authentic voice while building a more vibrant, inclusive CV community.

⚠️ Important: When registering for #CVPR2025, please check-mark our workshop in the interest form!

@cvprconference.bsky.social

⚠️ Important: When registering for #CVPR2025, please check-mark our workshop in the interest form!

@cvprconference.bsky.social

We're excited to announce "How to Stand Out in the Crowd?" at #CVPR2025 Nashville - our 4th community-building workshop featuring this incredible speaker lineup!

🔗 sites.google.com/view/standou...

We're excited to announce "How to Stand Out in the Crowd?" at #CVPR2025 Nashville - our 4th community-building workshop featuring this incredible speaker lineup!

🔗 sites.google.com/view/standou...

We discovered an easy way to extract albedo without any albedo-like images. By questioning why intrinsic image representations must be 3-channel maps, we trained a simple relighting model where intrinsics & lighting are latent variables.

We discovered an easy way to extract albedo without any albedo-like images. By questioning why intrinsic image representations must be 3-channel maps, we trained a simple relighting model where intrinsics & lighting are latent variables.

We’re thrilled to release our 360° video dataset. Training a simple conditional diffusion model with explicit camera control can synthesize novel 3D scenes—all from a single image input! #NeurIPS2024

We’re thrilled to release our 360° video dataset. Training a simple conditional diffusion model with explicit camera control can synthesize novel 3D scenes—all from a single image input! #NeurIPS2024

📍 East Exhibit Hall A-C, #1500

🗓️ Wed 11 Dec, 16:30 – 19:30 PT

Latent Intrinsics: Intrinsic image representation as latent variables, resulting in emergent albedo-like maps for free.

Code is now available: github.com/xiao7199/Lat...

arXiv: arxiv.org/abs/2405.21074

📍 East Exhibit Hall A-C, #1500

🗓️ Wed 11 Dec, 16:30 – 19:30 PT

Latent Intrinsics: Intrinsic image representation as latent variables, resulting in emergent albedo-like maps for free.

Code is now available: github.com/xiao7199/Lat...

arXiv: arxiv.org/abs/2405.21074

arXiv: arxiv.org/abs/2412.00177

project: luminet-relight.github.io

arXiv: arxiv.org/abs/2412.00177

project: luminet-relight.github.io

📝: Paper link: arxiv.org/abs/2412.00177

🔗 :Project page: luminet-relight.github.io

🐦: Twitter thread (longer version):https://x.com/anand_bhattad/status/1864479658353242485

Work led by Xiaoyan Xing (PhD student at the University of Amsterdam)!

📝: Paper link: arxiv.org/abs/2412.00177

🔗 :Project page: luminet-relight.github.io

🐦: Twitter thread (longer version):https://x.com/anand_bhattad/status/1864479658353242485

Work led by Xiaoyan Xing (PhD student at the University of Amsterdam)!

How accurate are these results? That's very hard to tell at the moment 🤔

But our tests on the MIT data, our user study, plus our qualitative results all point to us being on the right track. Gen models seem to know about how light interacts with our world.

How accurate are these results? That's very hard to tell at the moment 🤔

But our tests on the MIT data, our user study, plus our qualitative results all point to us being on the right track. Gen models seem to know about how light interacts with our world.

Evaluation is done on challenging MIT multi-illum dataset (only real data we have with GT and similar lighting conditions across scenes). By combining latent intrinsic with generative models, we cut down the error (RMSE) by 35%! See our paper for qualitative results.

Evaluation is done on challenging MIT multi-illum dataset (only real data we have with GT and similar lighting conditions across scenes). By combining latent intrinsic with generative models, we cut down the error (RMSE) by 35%! See our paper for qualitative results.

We used our good old StyLitGAN from #CVPR2024 to generate diverse training data and filter it to get the top 1000 using CLIP. We combine this with the existing MIT Multi-Illum & Big Time datasets. About ~2500 unique images make our training set

We used our good old StyLitGAN from #CVPR2024 to generate diverse training data and filter it to get the top 1000 using CLIP. We combine this with the existing MIT Multi-Illum & Big Time datasets. About ~2500 unique images make our training set

Given two images (source image to be relit and target lighting condition), we first extract latent intrinsic image representations using our NeurIPS2024 work for both these images and then train a simple latent ControlNet.

Given two images (source image to be relit and target lighting condition), we first extract latent intrinsic image representations using our NeurIPS2024 work for both these images and then train a simple latent ControlNet.