@jessicaschrouff.bsky.social @sanmikoyejo.bsky.social @sethlazar.org Hoda Heidari

@jessicaschrouff.bsky.social @sanmikoyejo.bsky.social @sethlazar.org Hoda Heidari

To Eun Kim discussed his paper “Towards Fair RAG: On the Impact of Fair Ranking in Retrieval-Augmented Generation”

To Eun Kim discussed his paper “Towards Fair RAG: On the Impact of Fair Ranking in Retrieval-Augmented Generation”

Join us TODAY for a full day of discussions on fairness metrics & evaluation. Schedule: afciworkshop.org/schedule

⏰ We start at 9 am with the opening remarks, followed by a keynote by on Fairness Measurement by Hoda Heidari

Join us TODAY for a full day of discussions on fairness metrics & evaluation. Schedule: afciworkshop.org/schedule

⏰ We start at 9 am with the opening remarks, followed by a keynote by on Fairness Measurement by Hoda Heidari

My favorite part of the workshop 🥳

💬 Join our amazing leads* at the roundtables for insightful discussions on Fairness/Bias Metrics and Evaluation.

* @angelinawang.bsky.social, Candace Ross (FAIR), Tom Hartvigsen (UofVirginia)

My favorite part of the workshop 🥳

💬 Join our amazing leads* at the roundtables for insightful discussions on Fairness/Bias Metrics and Evaluation.

* @angelinawang.bsky.social, Candace Ross (FAIR), Tom Hartvigsen (UofVirginia)

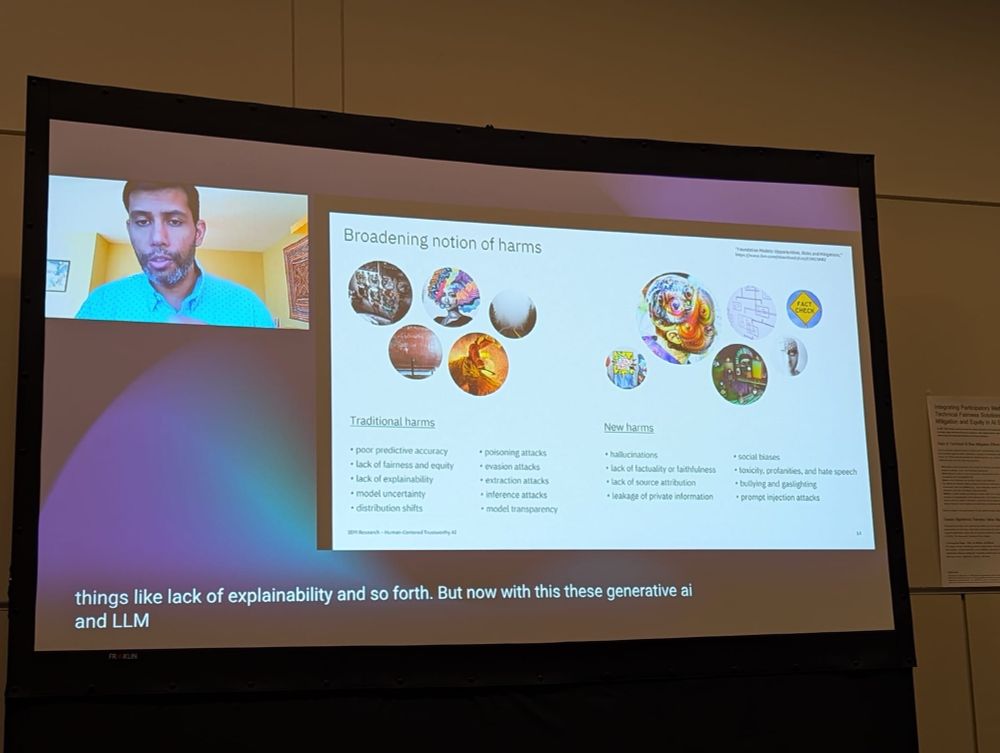

Join our expert panellists* for a timely discussion on “Rethinking fairness in the era of large language models”!!

* @jessicaschrouff.bsky.social, @sethlazar.org, Sanmi Koyejo, Hoda Heidari

Join our expert panellists* for a timely discussion on “Rethinking fairness in the era of large language models”!!

* @jessicaschrouff.bsky.social, @sethlazar.org, Sanmi Koyejo, Hoda Heidari

The Nteasee Study arxiv.org/abs/2409.12197

and

The Case for Globalizing Fairness dl.acm.org/doi/10.1145/... (1/11)

The Nteasee Study arxiv.org/abs/2409.12197

and

The Case for Globalizing Fairness dl.acm.org/doi/10.1145/... (1/11)