Abhinav Upadhyay

@abhi9u.bsky.social

NetBSD Dev | Python Internals, AI, compilers, databases, & performance engineering | https://blog.codingconfessions.com/

In my latest article, I talk about this in detail. The article shows the Linux kernel code which handles system calls and breaks down the performance implication of each step the kernel takes.

Read it here: blog.codingconfessions.com/p/what-makes...

Read it here: blog.codingconfessions.com/p/what-makes...

What Makes System Calls Expensive: A Linux Internals Deep Dive

An explanation of how Linux handles system calls on x86-64 and why they show up as expensive operations in performance profiles

blog.codingconfessions.com

September 21, 2025 at 12:47 PM

In my latest article, I talk about this in detail. The article shows the Linux kernel code which handles system calls and breaks down the performance implication of each step the kernel takes.

Read it here: blog.codingconfessions.com/p/what-makes...

Read it here: blog.codingconfessions.com/p/what-makes...

More importantly, they also make the user space code slow after return from the kernel, and this can be more costly. This happens due to the loss of the microarchitectural state of the process, such as instruction pipeline getting drained, branch predictor buffers getting flushed, etc.

September 21, 2025 at 12:47 PM

More importantly, they also make the user space code slow after return from the kernel, and this can be more costly. This happens due to the loss of the microarchitectural state of the process, such as instruction pipeline getting drained, branch predictor buffers getting flushed, etc.

Errata-2: Increased penalty on cache misses and branch misses increases the CPI (cycles per instruction) and lowers IPC.

The article has all the details correctly explained.

The article has all the details correctly explained.

July 13, 2025 at 2:23 PM

Errata-2: Increased penalty on cache misses and branch misses increases the CPI (cycles per instruction) and lowers IPC.

The article has all the details correctly explained.

The article has all the details correctly explained.

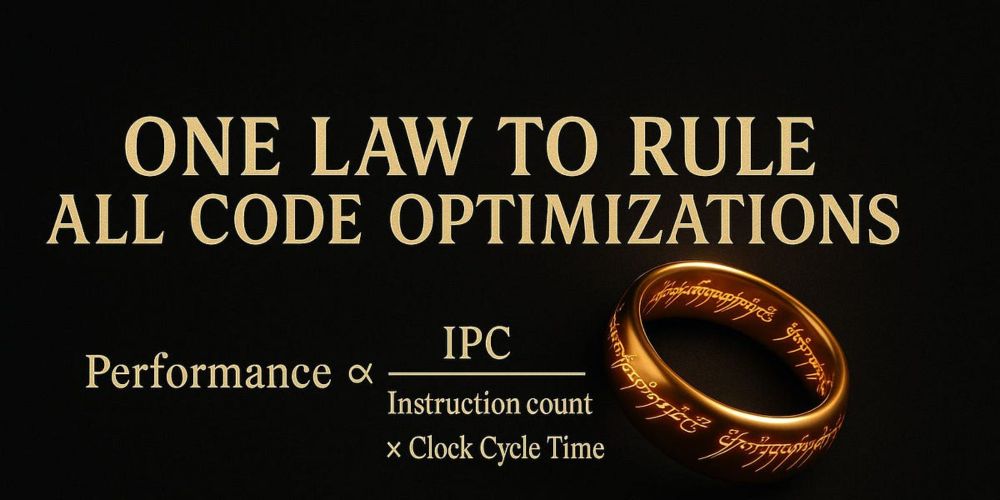

Errata-1: I meant to write the law as performance = 1 / (instruction count x cycles per instruction x cycle time).

July 13, 2025 at 2:23 PM

Errata-1: I meant to write the law as performance = 1 / (instruction count x cycles per instruction x cycle time).

Read it here: blog.codingconfessions.com/p/one-law-to...

One Law to Rule Them All: The Iron Law of Software Performance

A systems-level reasoning model for understanding why optimizations succeed or fail.

blog.codingconfessions.com

July 13, 2025 at 1:50 PM

Read it here: blog.codingconfessions.com/p/one-law-to...

In my latest article, I show this connection with the help of several common optimizations:

- loop unrolling

- function inlining

- SIMD vectorization

- branch elimination

- cache optimization

If you are someone who thinks about performance, I think you will find it worth your time.

- loop unrolling

- function inlining

- SIMD vectorization

- branch elimination

- cache optimization

If you are someone who thinks about performance, I think you will find it worth your time.

July 13, 2025 at 1:50 PM

In my latest article, I show this connection with the help of several common optimizations:

- loop unrolling

- function inlining

- SIMD vectorization

- branch elimination

- cache optimization

If you are someone who thinks about performance, I think you will find it worth your time.

- loop unrolling

- function inlining

- SIMD vectorization

- branch elimination

- cache optimization

If you are someone who thinks about performance, I think you will find it worth your time.

Reasoning about microarchitectural code optimizations also works very similarly. Each optimization affects one or more of these factors:

- number of instructions executed (dynamic instruction count)

- instructions executed per cycle (IPC)

- CPU clock cycle

- number of instructions executed (dynamic instruction count)

- instructions executed per cycle (IPC)

- CPU clock cycle

July 13, 2025 at 1:50 PM

Reasoning about microarchitectural code optimizations also works very similarly. Each optimization affects one or more of these factors:

- number of instructions executed (dynamic instruction count)

- instructions executed per cycle (IPC)

- CPU clock cycle

- number of instructions executed (dynamic instruction count)

- instructions executed per cycle (IPC)

- CPU clock cycle

As an example: when increasing the depth of the instruction pipeline, CPU architects can use this law to decide how much can they increase the depth. Increasing the depth reduces the cycle time, but it indirectly increases the IPC as cache miss and branch miss penalties become more severe.

July 13, 2025 at 1:50 PM

As an example: when increasing the depth of the instruction pipeline, CPU architects can use this law to decide how much can they increase the depth. Increasing the depth reduces the cycle time, but it indirectly increases the IPC as cache miss and branch miss penalties become more severe.

The Iron Law is used by CPU architects to analyse how a change in the CPU microarchitecture will drive the performance of the CPU.

The law defines CPU performance in terms of three factors:

Performance = 1 / (instruction count x instructions per cycle x cycle time)

The law defines CPU performance in terms of three factors:

Performance = 1 / (instruction count x instructions per cycle x cycle time)

July 13, 2025 at 1:50 PM

The Iron Law is used by CPU architects to analyse how a change in the CPU microarchitecture will drive the performance of the CPU.

The law defines CPU performance in terms of three factors:

Performance = 1 / (instruction count x instructions per cycle x cycle time)

The law defines CPU performance in terms of three factors:

Performance = 1 / (instruction count x instructions per cycle x cycle time)

This is where you wish for a theory to systematically reason about the optimizations so that you know why they work and why they don't work. A framework that turns the guesses into surgical precision.

Does something like this exist? Maybe there is: the Iron Law of performance.

Does something like this exist? Maybe there is: the Iron Law of performance.

July 13, 2025 at 1:50 PM

This is where you wish for a theory to systematically reason about the optimizations so that you know why they work and why they don't work. A framework that turns the guesses into surgical precision.

Does something like this exist? Maybe there is: the Iron Law of performance.

Does something like this exist? Maybe there is: the Iron Law of performance.

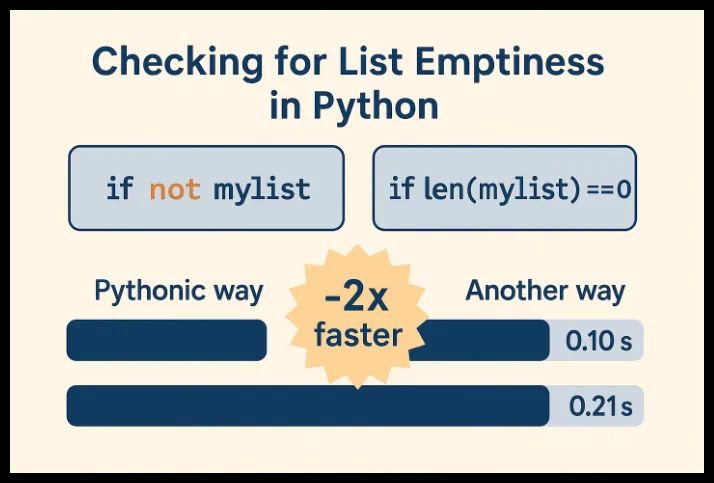

If you want a more detailed explanation of what is happening here, and why the `not` operator doesn't need to do the same thing: see my article.

blog.codingconfessions.com/p/python-per...

blog.codingconfessions.com/p/python-per...

April 12, 2025 at 8:43 AM

If you want a more detailed explanation of what is happening here, and why the `not` operator doesn't need to do the same thing: see my article.

blog.codingconfessions.com/p/python-per...

blog.codingconfessions.com/p/python-per...

My article is written with zero assumption of any prior background in computer architecture. It uses real-world analogies to explain how the CPU works and why these features exist. And gives code examples to put all of that knowledge into action.

blog.codingconfessions.com/p/hardware-a...

blog.codingconfessions.com/p/hardware-a...

Hardware-Aware Coding: CPU Architecture Concepts Every Developer Should Know

Write faster code by understanding how it flows through your CPU

blog.codingconfessions.com

March 28, 2025 at 8:48 AM

My article is written with zero assumption of any prior background in computer architecture. It uses real-world analogies to explain how the CPU works and why these features exist. And gives code examples to put all of that knowledge into action.

blog.codingconfessions.com/p/hardware-a...

blog.codingconfessions.com/p/hardware-a...

A mispredicted branch usually wastes 20-30 cycles. So you don't really want to be missing branches in a critical path. For that you need to know how the branch predictors work and techniques to help it predict the branches better.

In the article you will find a couple of example on how to do that

In the article you will find a couple of example on how to do that

March 28, 2025 at 8:48 AM

A mispredicted branch usually wastes 20-30 cycles. So you don't really want to be missing branches in a critical path. For that you need to know how the branch predictors work and techniques to help it predict the branches better.

In the article you will find a couple of example on how to do that

In the article you will find a couple of example on how to do that

If the prediction is right, your program finishes execution faster.

If the prediction is wrong, then the speculative work goes wasted and the CPU has to start from scratch to fetch the right set of instructions and execute them.

If the prediction is wrong, then the speculative work goes wasted and the CPU has to start from scratch to fetch the right set of instructions and execute them.

March 28, 2025 at 8:48 AM

If the prediction is right, your program finishes execution faster.

If the prediction is wrong, then the speculative work goes wasted and the CPU has to start from scratch to fetch the right set of instructions and execute them.

If the prediction is wrong, then the speculative work goes wasted and the CPU has to start from scratch to fetch the right set of instructions and execute them.

Waiting until that happens means the instruction pipeline is not doing anything, which wastes resources.

To avoid this wastage of time and resource the CPU employs branch predictors to predict the branch direction and speculatively execute those instructions.

To avoid this wastage of time and resource the CPU employs branch predictors to predict the branch direction and speculatively execute those instructions.

March 28, 2025 at 8:48 AM

Waiting until that happens means the instruction pipeline is not doing anything, which wastes resources.

To avoid this wastage of time and resource the CPU employs branch predictors to predict the branch direction and speculatively execute those instructions.

To avoid this wastage of time and resource the CPU employs branch predictors to predict the branch direction and speculatively execute those instructions.

Finally, branch prediction.

All real-world code is non-linear in nature, it consists of branches, such as if/else, switch case, function calls, loops, etc. The execution flow depends on the branch result and that is not known until the processor executes it.

All real-world code is non-linear in nature, it consists of branches, such as if/else, switch case, function calls, loops, etc. The execution flow depends on the branch result and that is not known until the processor executes it.

March 28, 2025 at 8:48 AM

Finally, branch prediction.

All real-world code is non-linear in nature, it consists of branches, such as if/else, switch case, function calls, loops, etc. The execution flow depends on the branch result and that is not known until the processor executes it.

All real-world code is non-linear in nature, it consists of branches, such as if/else, switch case, function calls, loops, etc. The execution flow depends on the branch result and that is not known until the processor executes it.

To build a performant system, you need to write code which takes advantage of how the caches work.

In the article I explain how caches work and how can you write code to take advantage of caches to avoid the penalty of slow main memory access.

In the article I explain how caches work and how can you write code to take advantage of caches to avoid the penalty of slow main memory access.

March 28, 2025 at 8:48 AM

To build a performant system, you need to write code which takes advantage of how the caches work.

In the article I explain how caches work and how can you write code to take advantage of caches to avoid the penalty of slow main memory access.

In the article I explain how caches work and how can you write code to take advantage of caches to avoid the penalty of slow main memory access.

E.g. an instruction could be finish in 1 cycle but often its operands are in main memory and retrieving them from there takes hundreds of cycles. To hide this high latency, caches are used. Fetching from L1 cache takes only 3-4 cycles.

March 28, 2025 at 8:48 AM

E.g. an instruction could be finish in 1 cycle but often its operands are in main memory and retrieving them from there takes hundreds of cycles. To hide this high latency, caches are used. Fetching from L1 cache takes only 3-4 cycles.

While ILP enables the hardware to execute several instructions per cycle, those instructions often are bottlenecked on memory.

March 28, 2025 at 8:48 AM

While ILP enables the hardware to execute several instructions per cycle, those instructions often are bottlenecked on memory.

But it is only possible when your code provides enough independent instructions to the hardware. Usually compilers try to optimize for it, but sometimes you need to do it yourself as well.

In the article I explain how ILP works with code examples to demonstrate how you can take advantage of it.

In the article I explain how ILP works with code examples to demonstrate how you can take advantage of it.

March 28, 2025 at 8:48 AM

But it is only possible when your code provides enough independent instructions to the hardware. Usually compilers try to optimize for it, but sometimes you need to do it yourself as well.

In the article I explain how ILP works with code examples to demonstrate how you can take advantage of it.

In the article I explain how ILP works with code examples to demonstrate how you can take advantage of it.