https://www.linkedin.com/in/zrmor/

www.nytimes.com/2025/11/23/w...

www.nytimes.com/2025/11/23/w...

🗓️Wed 11 June 2025

⏰2-3pm BST

Talk title: 'Neurobiological constraints on learning: bug or feature?'

ℹ️ Details / registration: www.eventbrite.co.uk/e/ucl-neuroa...

🗓️Wed 11 June 2025

⏰2-3pm BST

Talk title: 'Neurobiological constraints on learning: bug or feature?'

ℹ️ Details / registration: www.eventbrite.co.uk/e/ucl-neuroa...

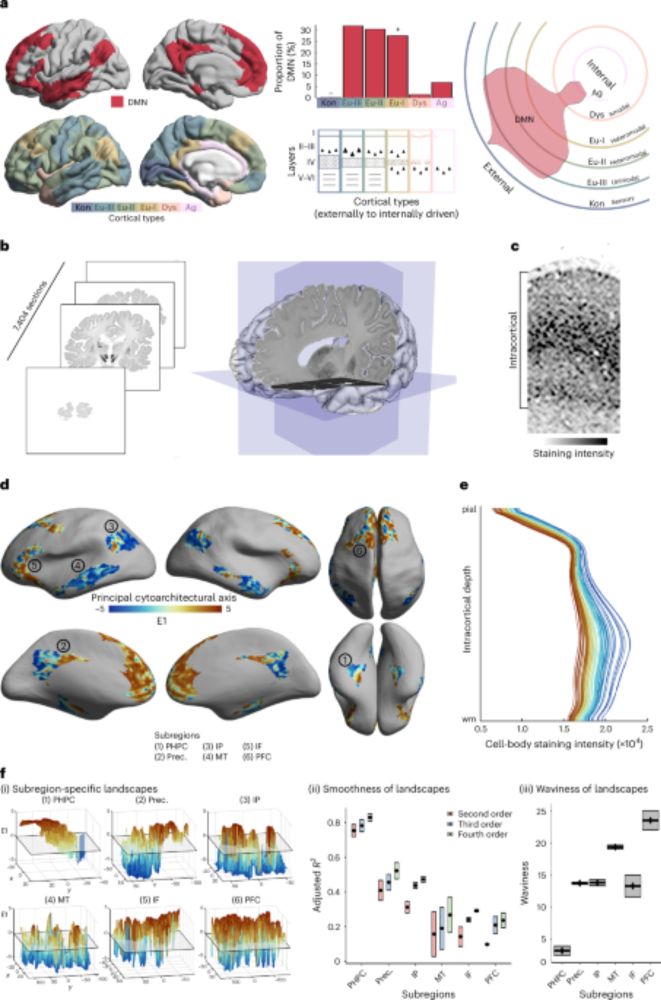

Cytoarchitecture, wiring and signal flow of the human default mode network

Combining 3D histology, 7T MRI, and connectomics to explore DMN structure-function associations

Led by Casey Paquola, @themindwanders.bsky.social & a terrific team of colleagues 🙏

Cytoarchitecture, wiring and signal flow of the human default mode network

Combining 3D histology, 7T MRI, and connectomics to explore DMN structure-function associations

Led by Casey Paquola, @themindwanders.bsky.social & a terrific team of colleagues 🙏

www.biorxiv.org/content/10.1...

tl;dr: if you repeatedly give an animal a stimulus sequence XXXY, then throw in the occasional XXXX, there are large responses to the Y in XXXY, but not to the final X in XXXX, even though that's statistically "unexpected".

🧠📈 🧪

www.biorxiv.org/content/10.1...

tl;dr: if you repeatedly give an animal a stimulus sequence XXXY, then throw in the occasional XXXX, there are large responses to the Y in XXXY, but not to the final X in XXXX, even though that's statistically "unexpected".

🧠📈 🧪

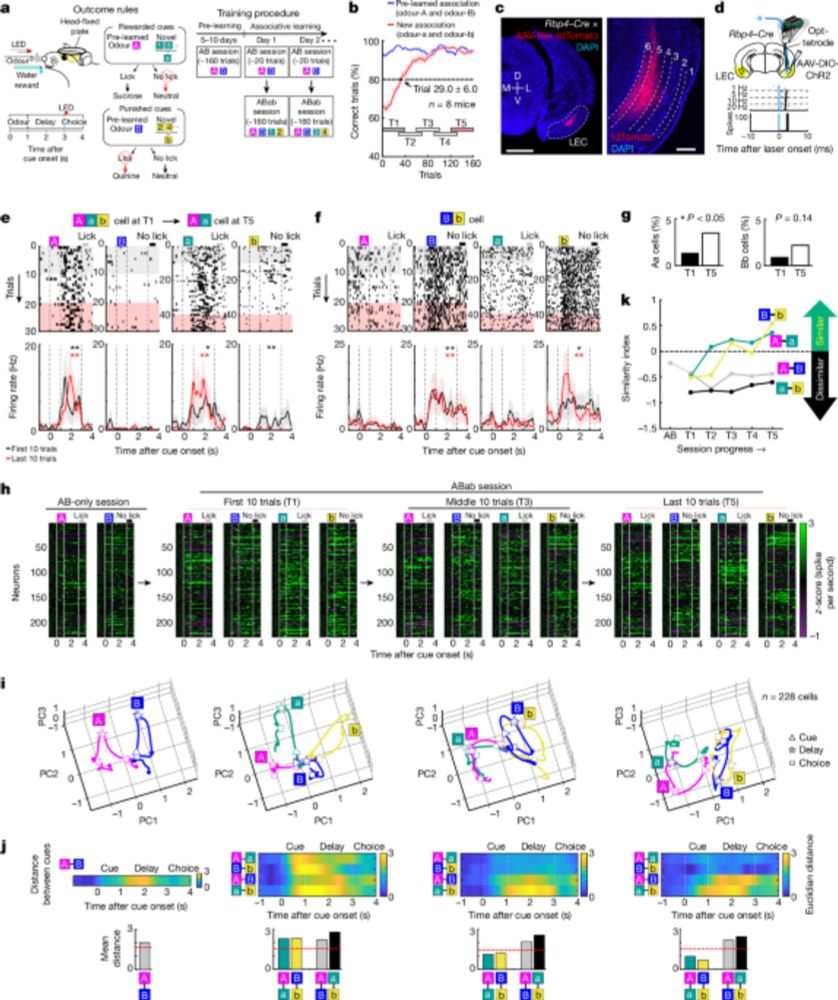

www.science.org/content/arti...

www.science.org/content/arti...

www.nature.com/articles/s41...

Studying this kind of outcome monitoring is going to be critical to understand the losses at play in the brain!

🧠📈

www.nature.com/articles/s41...

Studying this kind of outcome monitoring is going to be critical to understand the losses at play in the brain!

🧠📈

kempnerinstitute.harvard.edu/research/dee...

kempnerinstitute.harvard.edu/research/dee...

First column out now on that most convenient of all the fictions in neuroscience: averaging

thetransmitter.org/neural-codin...

First column out now on that most convenient of all the fictions in neuroscience: averaging

thetransmitter.org/neural-codin...

I'd like to add to that confusing mix: Learning by thinking

www.cell.com/trends/cogni...

If I figure out something purely through self-reflection, is that "learning"? If not, why?

🧠📈 🧪

I'd like to add to that confusing mix: Learning by thinking

www.cell.com/trends/cogni...

If I figure out something purely through self-reflection, is that "learning"? If not, why?

🧠📈 🧪

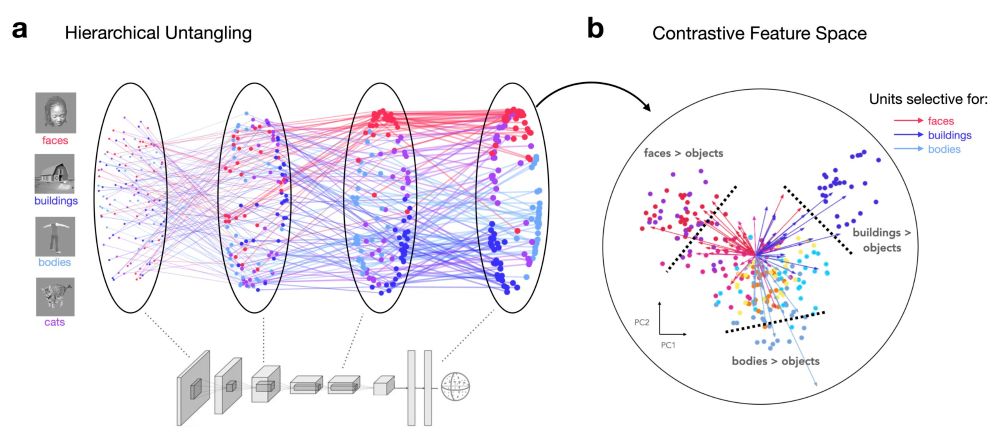

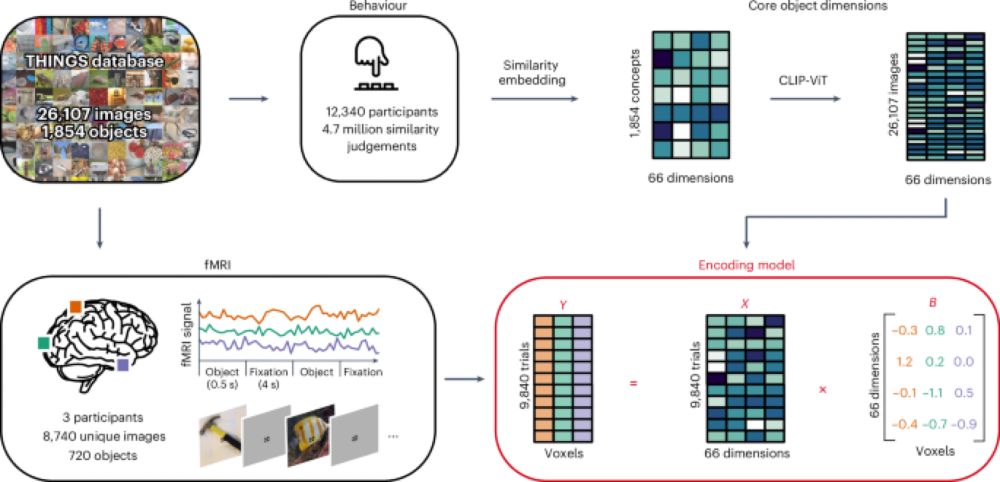

My takeaways:

1/ Clearly, semantic categories alone aren't enough to explain object perception or the neural system behind it.

#neuroscience #VisionScience

My takeaways:

1/ Clearly, semantic categories alone aren't enough to explain object perception or the neural system behind it.

#neuroscience #VisionScience

Increasingly, it looks like neural networks converge on the same representational structures - regardless of their specific losses and architectures - as long as they're big and trained on real world data.

🧠📈 🧪

Increasingly, it looks like neural networks converge on the same representational structures - regardless of their specific losses and architectures - as long as they're big and trained on real world data.

🧠📈 🧪