Yuzhe Yang

@yuzheyang.bsky.social

1.7K followers

70 following

22 posts

Asst Prof @UCLA | RS @Google | PhD @MIT | BS @PKU

#ML, #AI, #health, #medicine

https://www.cs.ucla.edu/~yuzhe

Posts

Media

Videos

Starter Packs

Pinned

Yuzhe Yang

@yuzheyang.bsky.social

· Jun 17

SensorLM: Learning the Language of Wearable Sensors

We present SensorLM, a family of sensor-language foundation models that enable wearable sensor data understanding with natural language. Despite its pervasive nature, aligning and interpreting sensor ...

arxiv.org

Reposted by Yuzhe Yang

Reposted by Yuzhe Yang

Reposted by Yuzhe Yang

Yuzhe Yang

@yuzheyang.bsky.social

· Dec 4

Hello world! I’m recruiting ~3 PhD students for Fall 2025 at UCLA 🚀

Please apply to UCLA CS or CompMed if you are interested in ML and (Gen)AI for healthcare / medicine / science.

See my website for more on my research & how to apply: people.csail.mit.edu/yuzhe

Please apply to UCLA CS or CompMed if you are interested in ML and (Gen)AI for healthcare / medicine / science.

See my website for more on my research & how to apply: people.csail.mit.edu/yuzhe

Yuzhe Yang

@yuzheyang.bsky.social

· Nov 26

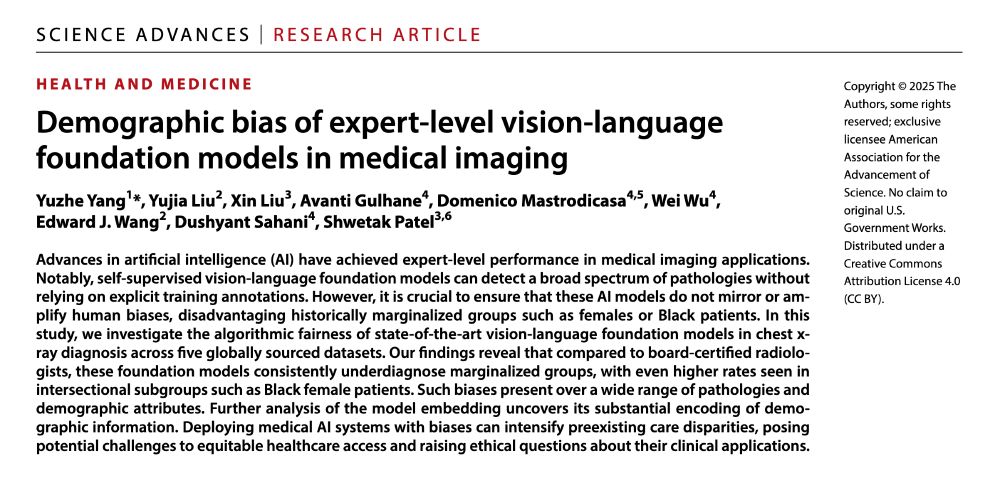

Yuzhe Yang

@yuzheyang.bsky.social

· Nov 25

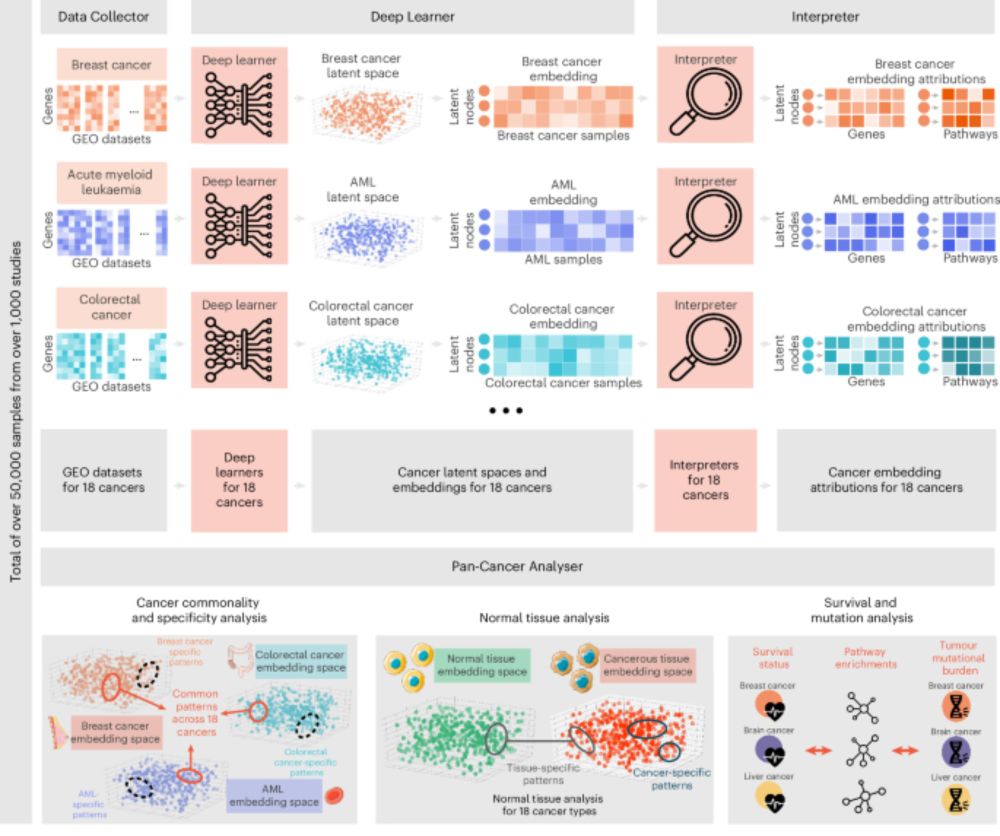

Reposted by Yuzhe Yang

Su-In Lee

@suinlee.bsky.social

· Nov 23

Yuzhe Yang

@yuzheyang.bsky.social

· Nov 20