https://wimmerth.github.io

We share findings on the iterative nature of reconstruction, the roles of cross and self-attention, and the emergence of correspondences across the network [1/8] ⬇️

Michal Stary, Julien Gaubil, Ayush Tewari, Vincent Sitzmann

arxiv.org/abs/2510.24907

Trending on www.scholar-inbox.com

We share findings on the iterative nature of reconstruction, the roles of cross and self-attention, and the emergence of correspondences across the network [1/8] ⬇️

✨ AnyUp: Universal Feature Upsampling 🔎

Upsample any feature - really any feature - with the same upsampler, no need for cumbersome retraining.

SOTA feature upsampling results while being feature-agnostic at inference time.

🌐 wimmerth.github.io/anyup/

💡 It works if we leverage a self-supervised representation!

Meet RepTok🦎: A generative model that encodes an image into a single continuous latent while keeping realism and semantics. 🧵 👇

💡 It works if we leverage a self-supervised representation!

Meet RepTok🦎: A generative model that encodes an image into a single continuous latent while keeping realism and semantics. 🧵 👇

✨ AnyUp: Universal Feature Upsampling 🔎

Upsample any feature - really any feature - with the same upsampler, no need for cumbersome retraining.

SOTA feature upsampling results while being feature-agnostic at inference time.

🌐 wimmerth.github.io/anyup/

✨ AnyUp: Universal Feature Upsampling 🔎

Upsample any feature - really any feature - with the same upsampler, no need for cumbersome retraining.

SOTA feature upsampling results while being feature-agnostic at inference time.

🌐 wimmerth.github.io/anyup/

We do the simplest thing: just train a model (e.g., a next-token predictor) on all elements of the concatenated dataset [X,Y,Z].

You end up with a better model of dataset X than if you had trained on X alone!

6/9

We do the simplest thing: just train a model (e.g., a next-token predictor) on all elements of the concatenated dataset [X,Y,Z].

You end up with a better model of dataset X than if you had trained on X alone!

6/9

Come and check out our poster on learning better features for semantic correspondence in Hawaii!

📍 Poster #538 (Session 2)

🗓️ Oct 21 | 3:15 – 5:00 p.m. HST

genintel.github.io/DIY-SC

iccv.thecvf.com/Conferences/...

Come and check out our poster on learning better features for semantic correspondence in Hawaii!

📍 Poster #538 (Session 2)

🗓️ Oct 21 | 3:15 – 5:00 p.m. HST

genintel.github.io/DIY-SC

In DIY-SC, we improve foundational features using a light-weight adapter trained with carefully filtered and refined pseudo-labels.

🔧 Drop-in alternative to plain DINOv2 features!

📦 Code + pre-trained weights available now.

🔥 Try it in your next vision project!

It refines DINOv2 or SD+DINOv2 features and achieves a new SOTA on the semantic correspondence dataset SPair-71k when not relying on annotated keypoints! [1/6]

genintel.github.io/DIY-SC

In DIY-SC, we improve foundational features using a light-weight adapter trained with carefully filtered and refined pseudo-labels.

🔧 Drop-in alternative to plain DINOv2 features!

📦 Code + pre-trained weights available now.

🔥 Try it in your next vision project!

We’re proud to announce that we have 5 papers accepted to the main conference and 7 papers accepted at various CVPR workshops this year!

We’re looking forward to sharing our research with the community in Nashville!

Stay tuned for more details! @mpi-inf.mpg.de

#computervision #machinelearning #research

#computervision #machinelearning #research

This work was a great collaboration with @moechsle.bsky.social, @miniemeyer.bsky.social, and Federico Tombari.

🧵⬇️

This work was a great collaboration with @moechsle.bsky.social, @miniemeyer.bsky.social, and Federico Tombari.

🧵⬇️

The answer is yes!

Take a look at Spatial Reasoning Models. Hats off for this amazing work!

Naively, no, with sequentialization and the correct order, they can!

Check out @chriswewer.bsky.social's and Bart's SRM's for details.

Project: geometric-rl.mpi-inf.mpg.de/srm/

Paper: arxiv.org/abs/2502.21075

Code: github.com/Chrixtar/SRM

The answer is yes!

Take a look at Spatial Reasoning Models. Hats off for this amazing work!

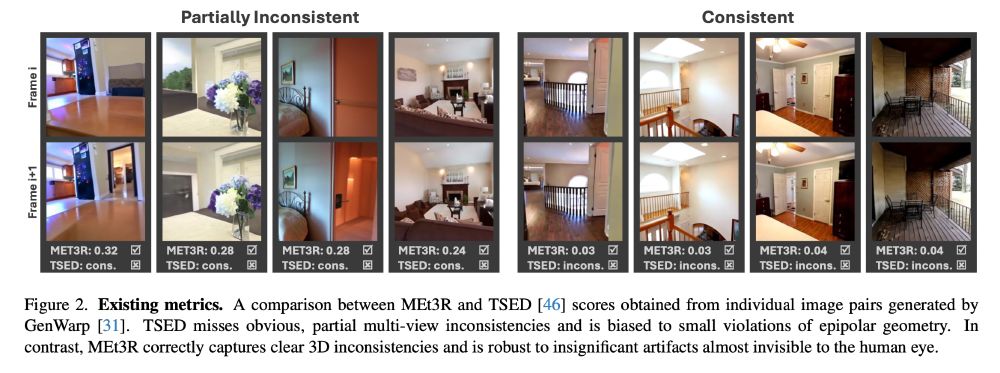

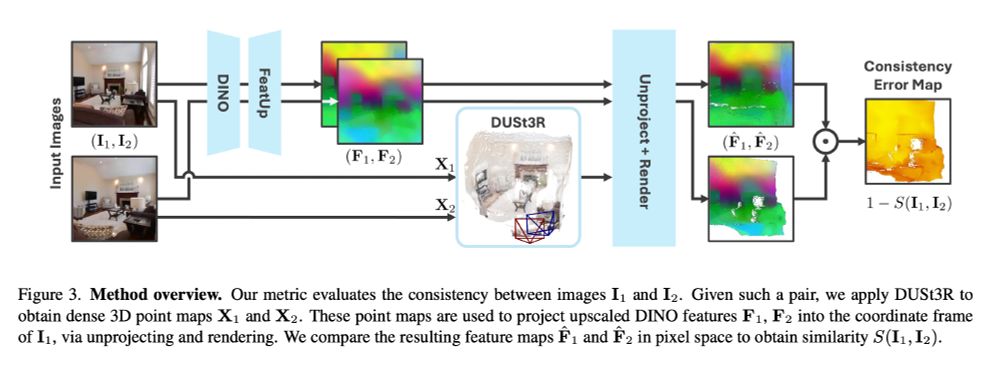

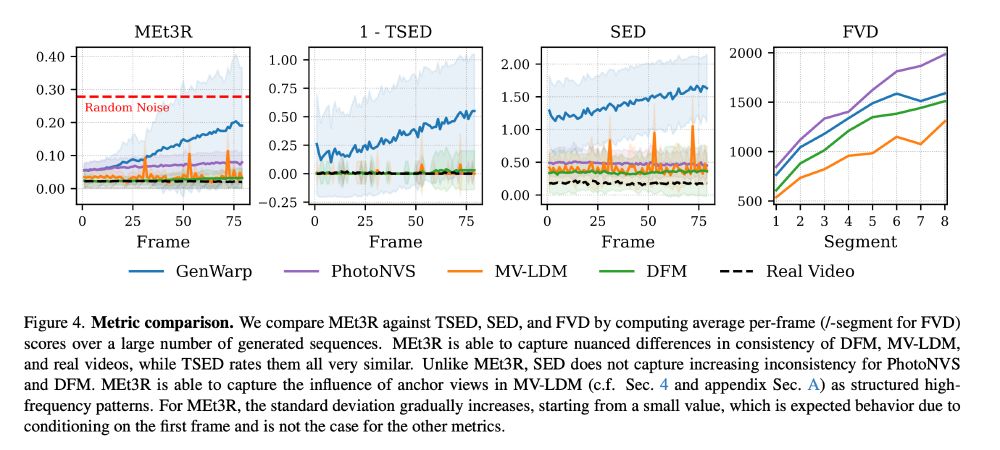

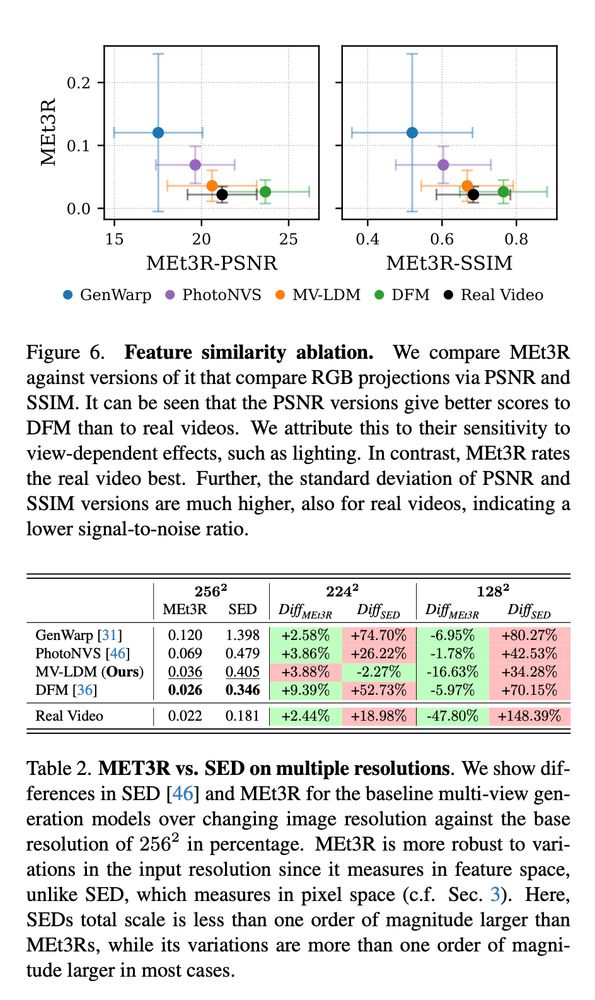

Introducing 𝗠𝗘𝘁𝟯𝗥: 𝗠𝗲𝗮𝘀𝘂𝗿𝗶𝗻𝗴 𝗠𝘂𝗹𝘁𝗶-𝗩𝗶𝗲𝘄 𝗖𝗼𝗻𝘀𝗶𝘀𝘁𝗲𝗻𝗰𝘆 𝗶𝗻 𝗚𝗲𝗻𝗲𝗿𝗮𝘁𝗲𝗱 𝗜𝗺𝗮𝗴𝗲𝘀.

Lacking 3D consistency in generated images is a limitation of many current multi-view/video/world generative models. To quantitatively measure these inconsistencies, check out Mohammad Asims new work!

We realized that we are often lacking metrics for comparing the quality of video and multi-view diffusion models. Especially the quantification of multi-view 3D consistency across frames is difficult.

But not anymore: Introducing MET3R 🧵

We realized that we are often lacking metrics for comparing the quality of video and multi-view diffusion models. Especially the quantification of multi-view 3D consistency across frames is difficult.

But not anymore: Introducing MET3R 🧵

Mohammad Asim, Christopher Wewer, Thomas Wimmer, Bernt Schiele, Jan Eric Lenssen

tl;dr: DUSt3R + DINO + FeatUp together want to be FID for multiview generation

arxiv.org/abs/2501.06336

Mohammad Asim, Christopher Wewer, Thomas Wimmer, Bernt Schiele, Jan Eric Lenssen

tl;dr: DUSt3R + DINO + FeatUp together want to be FID for multiview generation

arxiv.org/abs/2501.06336

Mohammad Asim, Christopher Wewer, @wimmerthomas.bsky.social, Bernt Schiele, Jan Eric Lenssen

tl;dr: DUSt3R-based method to measure multi-view consistency of generated views without given camera poses

arxiv.org/abs/2501.06336