linkedin.com/in/tolga-bilge

newsletter.tolgabilge.com

Prohibiting the development of superintelligence can prevent this risk.

We’ve just launched a new campaign to get this done.

Prohibiting the development of superintelligence can prevent this risk.

We’ve just launched a new campaign to get this done.

We've been compiling a growing list of examples of AI companies saying one thing, and doing the opposite:

controlai.news/p/art...

We've been compiling a growing list of examples of AI companies saying one thing, and doing the opposite:

controlai.news/p/art...

TODAY: Politicians across the UK political spectrum back our campaign for binding rules on dangerous AI development.

This is the first time a coalition of parliamentarians have acknowledged the extinction threat posed by AI.

1/6

TODAY: Politicians across the UK political spectrum back our campaign for binding rules on dangerous AI development.

This is the first time a coalition of parliamentarians have acknowledged the extinction threat posed by AI.

1/6

What are the facts?

— Trump announced Stargate

— Elon Musk says they don’t have the money

— Nadella says his $80b is for Azure

— Trump doesn’t know if they have it

— Reporting suggests they may only have $52b

newsletter.tolgabilge.com/p/stargate-g...

What are the facts?

— Trump announced Stargate

— Elon Musk says they don’t have the money

— Nadella says his $80b is for Azure

— Trump doesn’t know if they have it

— Reporting suggests they may only have $52b

newsletter.tolgabilge.com/p/stargate-g...

Top AI scientists, and even the CEOs of the biggest AI companies themselves, have warned that AI threatens human extinction.

The time for action is now. Sign below 👇

controlai.com/public...

Top AI scientists, and even the CEOs of the biggest AI companies themselves, have warned that AI threatens human extinction.

The time for action is now. Sign below 👇

controlai.com/public...

AI companies often say one thing and do the opposite. We’ve been watching closely, and have been compiling a list of examples:

controlai.news/p/art...

AI companies often say one thing and do the opposite. We’ve been watching closely, and have been compiling a list of examples:

controlai.news/p/art...

AI development is advancing rapidly, and we may soon have AI systems that surpass humans in intelligence, yet we have no way to control them. Our very existence is at stake.

This could be the biggest deal in history.

🧵

AI development is advancing rapidly, and we may soon have AI systems that surpass humans in intelligence, yet we have no way to control them. Our very existence is at stake.

This could be the biggest deal in history.

🧵

This was in reference to a prediction he made back in 2011. He also thought there was a 5 to 50% chance of human extinction within a year of human-level AI being built!

This was in reference to a prediction he made back in 2011. He also thought there was a 5 to 50% chance of human extinction within a year of human-level AI being built!

We've collected some predictions for AI in 2025, by Elon Musk, Sam Altman, Dario Amodei, Gary Marcus, and Eli Lifland.

Get them in our free weekly newsletter 👇

controlai.news/p/the...

We've collected some predictions for AI in 2025, by Elon Musk, Sam Altman, Dario Amodei, Gary Marcus, and Eli Lifland.

Get them in our free weekly newsletter 👇

controlai.news/p/the...

Her boss, Sam Altman, is now bragging about how OpenAI is rushing to create superintelligence.

Her boss, Sam Altman, is now bragging about how OpenAI is rushing to create superintelligence.

newsletter.tolgabilge.com/p/two-years-of-ai-politics-past-present

newsletter.tolgabilge.com/p/two-years-of-ai-politics-past-present

newsletter.tolgabilge.com/p/two-years-of-ai-politics-past-present

newsletter.tolgabilge.com/p/two-years-of-ai-politics-past-present

1️⃣ Voters back AI policy focus on preventing extreme risks

2️⃣ Meta asks the government to block OpenAI's for-profit switch

3️⃣ Eric Schmidt warns there's a time to unplug AI

Get our free newsletter:

controlai.news/p/con...

1️⃣ Voters back AI policy focus on preventing extreme risks

2️⃣ Meta asks the government to block OpenAI's for-profit switch

3️⃣ Eric Schmidt warns there's a time to unplug AI

Get our free newsletter:

controlai.news/p/con...

1️⃣ OpenAI launches o1, in tests tries to avoid shutdown

2️⃣ Google DeepMind launches Gemini 2.0

3️⃣ Comments by incoming AI czar David Sacks on AGI threat resurface

Get our free newsletter here 👇

controlai.news/p/sub...

1️⃣ OpenAI launches o1, in tests tries to avoid shutdown

2️⃣ Google DeepMind launches Gemini 2.0

3️⃣ Comments by incoming AI czar David Sacks on AGI threat resurface

Get our free newsletter here 👇

controlai.news/p/sub...

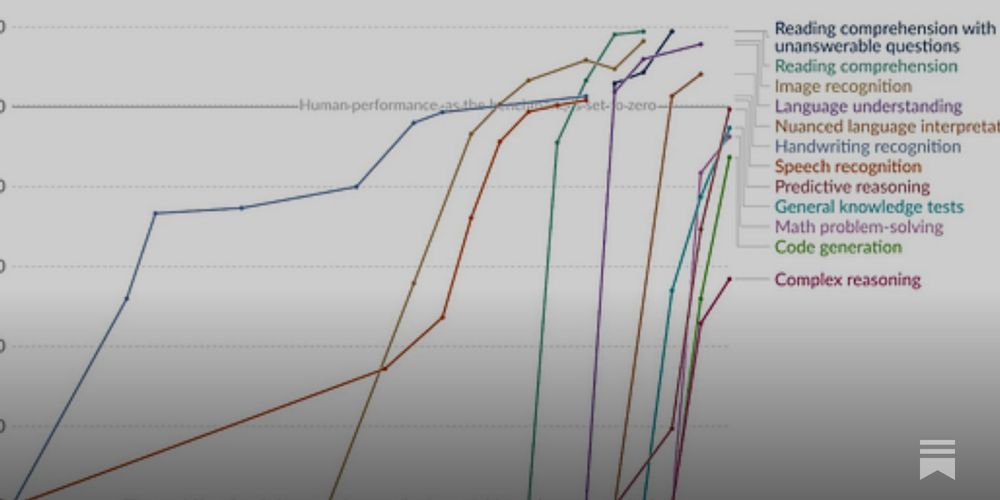

Creating AGI depends simply on enabling it to perform the intellectual tasks that we can.

Once AI can do that, we are on a path to godlike AI — systems so beyond our reach that they pose the risk of human extinction.

🧵

Creating AGI depends simply on enabling it to perform the intellectual tasks that we can.

Once AI can do that, we are on a path to godlike AI — systems so beyond our reach that they pose the risk of human extinction.

🧵

1️⃣ AI assists hackers mine sensitive data

2️⃣ Google DeepMind predicts weather more accurately than leading system

3️⃣ xAI plans massive expansion of its Memphis supercomputer

Get our free newsletter here 👇

controlai.news/p/con...

1️⃣ AI assists hackers mine sensitive data

2️⃣ Google DeepMind predicts weather more accurately than leading system

3️⃣ xAI plans massive expansion of its Memphis supercomputer

Get our free newsletter here 👇

controlai.news/p/con...

⬥ AI labs can't police themselves, more regulation is needed

⬥ They support AI Safety Institute testing of AI models, and this should be mandatory

⬥ AI safety testing is more important than US-China competition

⬥ AI labs can't police themselves, more regulation is needed

⬥ They support AI Safety Institute testing of AI models, and this should be mandatory

⬥ AI safety testing is more important than US-China competition

1️⃣ Biden and Xi agree AI shouldn’t control nuclear weapons

2️⃣ A US government commission recommends a race to AGI

3️⃣ Bengio writes about advances in the ability of AI to reason

controlai.news/p/con...

1️⃣ Biden and Xi agree AI shouldn’t control nuclear weapons

2️⃣ A US government commission recommends a race to AGI

3️⃣ Bengio writes about advances in the ability of AI to reason

controlai.news/p/con...