@sanmikoyejo.bsky.social

Reposted

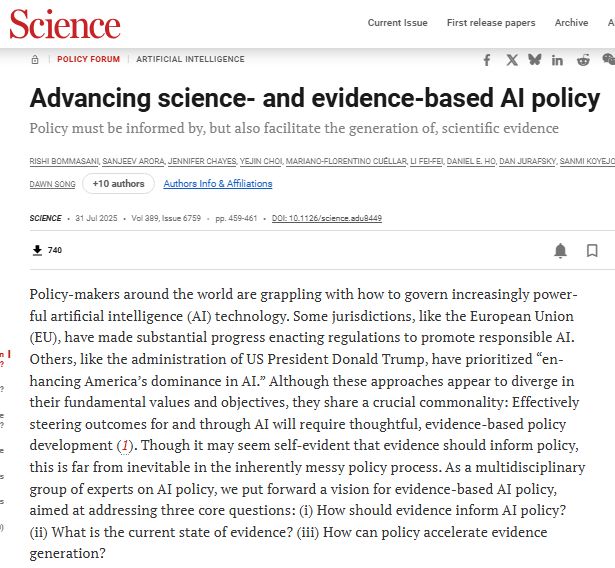

New in @science.org : 20+ AI scholars—inc. @alondra.bsky.social @randomwalker.bsky.social @sanmikoyejo.bsky.social et al, lay out a playbook for evidence-based AI governance.

Without solid data, we risk both hype and harm. Thread 👇

Without solid data, we risk both hype and harm. Thread 👇

August 5, 2025 at 4:48 PM

New in @science.org : 20+ AI scholars—inc. @alondra.bsky.social @randomwalker.bsky.social @sanmikoyejo.bsky.social et al, lay out a playbook for evidence-based AI governance.

Without solid data, we risk both hype and harm. Thread 👇

Without solid data, we risk both hype and harm. Thread 👇

Reposted

Grateful to win Best Paper at ACL for our work on Fairness through Difference Awareness with my amazing collaborators!! Check out the paper for why we think fairness has both gone too far, and at the same time, not far enough aclanthology.org/2025.acl-lon...

July 30, 2025 at 3:34 PM

Grateful to win Best Paper at ACL for our work on Fairness through Difference Awareness with my amazing collaborators!! Check out the paper for why we think fairness has both gone too far, and at the same time, not far enough aclanthology.org/2025.acl-lon...

Reposted

Instead, we should permit differentiating based on the context. Ex: synagogues in America are legally allowed to discriminate by religion when hiring rabbis. Work with Michelle Phan, Daniel E. Ho, @sanmikoyejo.bsky.social arxiv.org/abs/2502.01926

June 2, 2025 at 4:38 PM

Instead, we should permit differentiating based on the context. Ex: synagogues in America are legally allowed to discriminate by religion when hiring rabbis. Work with Michelle Phan, Daniel E. Ho, @sanmikoyejo.bsky.social arxiv.org/abs/2502.01926

Reposted

Most major LLMs are trained using English data, making it ineffective for the approximately 5 billion people who don't speak English. Here, HAI Faculty Affiliate @sanmikoyejo.bsky.social discusses the risks of this digital divide and how to close it. hai.stanford.edu/news/closing...

Closing the Digital Divide in AI | Stanford HAI

Large language models aren't effective for many languages. Scholars explain what's at stake for the approximately 5 billion people who don't speak English.

hai.stanford.edu

May 20, 2025 at 5:43 PM

Most major LLMs are trained using English data, making it ineffective for the approximately 5 billion people who don't speak English. Here, HAI Faculty Affiliate @sanmikoyejo.bsky.social discusses the risks of this digital divide and how to close it. hai.stanford.edu/news/closing...

Reposted

Our latest white paper maps the landscape of large language model development for low-resource languages, highlighting challenges, trade-offs, and strategies. Read more here: hai.stanford.edu/policy/mind-...

Mind the (Language) Gap: Mapping the Challenges of LLM Development in Low-Resource Language Contexts | Stanford HAI

In collaboration with The Asia Foundation and the University of Pretoria, this white paper maps the LLM development landscape for low-resource languages, highlighting challenges, trade-offs, and strat...

hai.stanford.edu

April 23, 2025 at 4:38 PM

Our latest white paper maps the landscape of large language model development for low-resource languages, highlighting challenges, trade-offs, and strategies. Read more here: hai.stanford.edu/policy/mind-...

Reposted

Collaboration with a bunch of lovely people I am thankful to be able to work with: @hannawallach.bsky.social , @angelinawang.bsky.social , Olawale Salaudeen, Rishi Bommasani, and @sanmikoyejo.bsky.social. 🤗

April 16, 2025 at 4:45 PM

Collaboration with a bunch of lovely people I am thankful to be able to work with: @hannawallach.bsky.social , @angelinawang.bsky.social , Olawale Salaudeen, Rishi Bommasani, and @sanmikoyejo.bsky.social. 🤗

Reposted

🧑🔬 Happy our article on Creating a Generative AI Evaluation Science, led by @weidingerlaura.bsky.social & @rajiinio.bsky.social, is now published by the National Academy of Engineering. =) www.nae.edu/338231/Towar...

Describes how to mature eval so systems can be worthy of trust and safely deployed.

Describes how to mature eval so systems can be worthy of trust and safely deployed.

Toward an Evaluation Science for Generative AI Systems

There is an urgent need for a more robust and comprehensive approach to AI evaluation.

There is an increasing imperative to anticipate and understand ...

www.nae.edu

April 16, 2025 at 4:43 PM

🧑🔬 Happy our article on Creating a Generative AI Evaluation Science, led by @weidingerlaura.bsky.social & @rajiinio.bsky.social, is now published by the National Academy of Engineering. =) www.nae.edu/338231/Towar...

Describes how to mature eval so systems can be worthy of trust and safely deployed.

Describes how to mature eval so systems can be worthy of trust and safely deployed.

Reposted

📣We’re thrilled to announce the first workshop on Technical AI Governance (TAIG) at #ICML2025 this July in Vancouver! Join us (& this stellar list of speakers) in bringing together technical & policy experts to shape the future of AI governance! www.taig-icml.com

April 1, 2025 at 12:23 PM

📣We’re thrilled to announce the first workshop on Technical AI Governance (TAIG) at #ICML2025 this July in Vancouver! Join us (& this stellar list of speakers) in bringing together technical & policy experts to shape the future of AI governance! www.taig-icml.com

Reposted

AI systems present an opportunity to reflect society's biases. “However, realizing this potential requires careful attention to both technical and social considerations,” says HAI Faculty Affiliate @sanmikoyejo.bsky.social in his latest op-ed via @theguardian.com: www.theguardian.com/commentisfre...

Could AI help us build a more racially just society? | Sanmi Koyejo

We have an opportunity to build systems that don’t just replicate our current inequities. Will we take them?

www.theguardian.com

March 26, 2025 at 3:51 PM

AI systems present an opportunity to reflect society's biases. “However, realizing this potential requires careful attention to both technical and social considerations,” says HAI Faculty Affiliate @sanmikoyejo.bsky.social in his latest op-ed via @theguardian.com: www.theguardian.com/commentisfre...

Reposted

Very excited we were able to get this collaboration working -- congrats and big thanks to the co-authors! @rajiinio.bsky.social @hannawallach.bsky.social @mmitchell.bsky.social @angelinawang.bsky.social Olawale Salaudeen, Rishi Bommasani @sanmikoyejo.bsky.social @williamis.bsky.social

March 20, 2025 at 1:28 PM

Very excited we were able to get this collaboration working -- congrats and big thanks to the co-authors! @rajiinio.bsky.social @hannawallach.bsky.social @mmitchell.bsky.social @angelinawang.bsky.social Olawale Salaudeen, Rishi Bommasani @sanmikoyejo.bsky.social @williamis.bsky.social

Reposted

3) Institutions and norms are necessary for a long-lasting, rigorous and trusted evaluation regime. In the long run, nobody trusts actors correcting their own homework. Establishing an ecosystem that accounts for expertise and balances incentives is a key marker of robust evaluation in other fields.

March 20, 2025 at 1:28 PM

3) Institutions and norms are necessary for a long-lasting, rigorous and trusted evaluation regime. In the long run, nobody trusts actors correcting their own homework. Establishing an ecosystem that accounts for expertise and balances incentives is a key marker of robust evaluation in other fields.

Reposted

which challenged concepts of what temperature is and in turn motivated the development of new thermometers. A similar virtuous cycle is needed to refine AI evaluation concepts and measurement methods.

March 20, 2025 at 1:28 PM

which challenged concepts of what temperature is and in turn motivated the development of new thermometers. A similar virtuous cycle is needed to refine AI evaluation concepts and measurement methods.

Reposted

2) Metrics and evaluation methods need to be refined over time. This iteration is key to any science. Take the example of measuring temperature: it went through many iterations of building new measurement approaches,

March 20, 2025 at 1:28 PM

2) Metrics and evaluation methods need to be refined over time. This iteration is key to any science. Take the example of measuring temperature: it went through many iterations of building new measurement approaches,

Reposted

Just like the “crashworthiness” of a car indicates aspects of safety in case of an accident, AI evaluation metrics need to link to real-world outcomes.

March 20, 2025 at 1:28 PM

Just like the “crashworthiness” of a car indicates aspects of safety in case of an accident, AI evaluation metrics need to link to real-world outcomes.

Reposted

We identify three key lessons in particular.

1) Meaningful metrics: evaluation metrics must connect to AI system behaviour or impact that is of relevance in the real-world. They can be abstract or simplified -- but they need to correspond to real-world performance or outcomes in a meaningful way.

1) Meaningful metrics: evaluation metrics must connect to AI system behaviour or impact that is of relevance in the real-world. They can be abstract or simplified -- but they need to correspond to real-world performance or outcomes in a meaningful way.

March 20, 2025 at 1:28 PM

We identify three key lessons in particular.

1) Meaningful metrics: evaluation metrics must connect to AI system behaviour or impact that is of relevance in the real-world. They can be abstract or simplified -- but they need to correspond to real-world performance or outcomes in a meaningful way.

1) Meaningful metrics: evaluation metrics must connect to AI system behaviour or impact that is of relevance in the real-world. They can be abstract or simplified -- but they need to correspond to real-world performance or outcomes in a meaningful way.

Reposted

We pull out key lessons from other fields, such as aerospace, food security, and pharmaceuticals, that have matured from being research disciplines to becoming industries with widely used and trusted products. AI research is going through a similar maturation -- but AI evaluation needs to catch up.

March 20, 2025 at 1:28 PM

We pull out key lessons from other fields, such as aerospace, food security, and pharmaceuticals, that have matured from being research disciplines to becoming industries with widely used and trusted products. AI research is going through a similar maturation -- but AI evaluation needs to catch up.

Excited about this work framing out responsible governance for generative AI!

📣 New paper! The field of AI research is increasingly realising that benchmarks are very limited in what they can tell us about AI system performance and safety. We argue and lay out a roadmap toward a *science of AI evaluation*: arxiv.org/abs/2503.05336 🧵

LinkedIn

This link will take you to a page that’s not on LinkedIn

lnkd.in

March 20, 2025 at 9:21 PM

Excited about this work framing out responsible governance for generative AI!

I am excited to announce that I will join the University of Zurich as an assistant professor in August this year! I am looking for PhD students and postdocs starting from the fall.

My research interests include optimization, federated learning, machine learning, privacy, and unlearning.

My research interests include optimization, federated learning, machine learning, privacy, and unlearning.

March 7, 2025 at 3:11 AM

📚 Incredible student projects from the 2024 Fall quarter's Machine Learning from Human Preferences course web.stanford.edu/class/cs329h/. Our students tackled some fascinating challenges at the intersection of AI alignment and human values. Selected project details follow...

1/n

1/n

CS329H: Machine Learning from Human Preferences

Machine Learning from Human Preferences

web.stanford.edu

January 12, 2025 at 4:55 AM

📚 Incredible student projects from the 2024 Fall quarter's Machine Learning from Human Preferences course web.stanford.edu/class/cs329h/. Our students tackled some fascinating challenges at the intersection of AI alignment and human values. Selected project details follow...

1/n

1/n

Reposted

As one of the vice chairs of the EU GPAI Code of Practice process, I co-wrote the second draft which just went online – feedback is open until mid-January, please let me know your thoughts, especially on the internal governance section!

digital-strategy.ec.europa.eu/en/library/s...

digital-strategy.ec.europa.eu/en/library/s...

Second Draft of the General-Purpose AI Code of Practice published, written by independent experts

Independent experts present the second draft of the General-Purpose AI Code of Practice, based on the feedback received on the first draft, published on 14 November 2024.

digital-strategy.ec.europa.eu

December 19, 2024 at 4:59 PM

As one of the vice chairs of the EU GPAI Code of Practice process, I co-wrote the second draft which just went online – feedback is open until mid-January, please let me know your thoughts, especially on the internal governance section!

digital-strategy.ec.europa.eu/en/library/s...

digital-strategy.ec.europa.eu/en/library/s...

Reposted

What happens when "If at first you don't succeed, try again?" meets modern ML/AI insights about scaling up?

You jailbreak every model on the market😱😱😱

Fire work led by @jplhughes.bsky.social

Sara Price @aengusl.bsky.social Mrinank Sharma

Ethan Perez

arxiv.org/abs/2412.03556

You jailbreak every model on the market😱😱😱

Fire work led by @jplhughes.bsky.social

Sara Price @aengusl.bsky.social Mrinank Sharma

Ethan Perez

arxiv.org/abs/2412.03556

December 13, 2024 at 4:51 PM

What happens when "If at first you don't succeed, try again?" meets modern ML/AI insights about scaling up?

You jailbreak every model on the market😱😱😱

Fire work led by @jplhughes.bsky.social

Sara Price @aengusl.bsky.social Mrinank Sharma

Ethan Perez

arxiv.org/abs/2412.03556

You jailbreak every model on the market😱😱😱

Fire work led by @jplhughes.bsky.social

Sara Price @aengusl.bsky.social Mrinank Sharma

Ethan Perez

arxiv.org/abs/2412.03556

Reposted

New paper on why machine "unlearning" is much harder than it seems is now up on arXiv: arxiv.org/abs/2412.06966 This was a huuuuuge cross-disciplinary effort led by @msftresearch.bsky.social FATE postdoc @grumpy-frog.bsky.social!!!

Machine Unlearning Doesn't Do What You Think: Lessons for Generative AI Policy, Research, and Practice

We articulate fundamental mismatches between technical methods for machine unlearning in Generative AI, and documented aspirations for broader impact that these methods could have for law and policy. ...

arxiv.org

December 14, 2024 at 12:55 AM

New paper on why machine "unlearning" is much harder than it seems is now up on arXiv: arxiv.org/abs/2412.06966 This was a huuuuuge cross-disciplinary effort led by @msftresearch.bsky.social FATE postdoc @grumpy-frog.bsky.social!!!

Reposted

📆 AFME workshop: Sat, Dec 14 in room 111-112

Join our expert panellists* for a timely discussion on “Rethinking fairness in the era of large language models”!!

* @jessicaschrouff.bsky.social, @sethlazar.org, Sanmi Koyejo, Hoda Heidari

Join our expert panellists* for a timely discussion on “Rethinking fairness in the era of large language models”!!

* @jessicaschrouff.bsky.social, @sethlazar.org, Sanmi Koyejo, Hoda Heidari

December 10, 2024 at 4:51 AM

📆 AFME workshop: Sat, Dec 14 in room 111-112

Join our expert panellists* for a timely discussion on “Rethinking fairness in the era of large language models”!!

* @jessicaschrouff.bsky.social, @sethlazar.org, Sanmi Koyejo, Hoda Heidari

Join our expert panellists* for a timely discussion on “Rethinking fairness in the era of large language models”!!

* @jessicaschrouff.bsky.social, @sethlazar.org, Sanmi Koyejo, Hoda Heidari

Reposted

Check out our new paper on privacy preserving style and content transfer 👇 arxiv.org/abs/2411.14639

Led by @poonpura.bsky.social , who is applying for PhD programs this year 🚀

w/ @poonpura.bsky.social , Wei-Ning Chen, Sanmi Koyejo, Albert No

Led by @poonpura.bsky.social , who is applying for PhD programs this year 🚀

w/ @poonpura.bsky.social , Wei-Ning Chen, Sanmi Koyejo, Albert No

November 27, 2024 at 6:45 PM

Check out our new paper on privacy preserving style and content transfer 👇 arxiv.org/abs/2411.14639

Led by @poonpura.bsky.social , who is applying for PhD programs this year 🚀

w/ @poonpura.bsky.social , Wei-Ning Chen, Sanmi Koyejo, Albert No

Led by @poonpura.bsky.social , who is applying for PhD programs this year 🚀

w/ @poonpura.bsky.social , Wei-Ning Chen, Sanmi Koyejo, Albert No

Reposted

For details, check out our paper (feedback appreciated!):

📄: arxiv.org/abs/2411.14639

🙌: big thank you to my collaborators and mentors Wei-Ning Chen, @berivanisik.bsky.social, Sanmi Koyejo, Albert No

🧵 16/16

📄: arxiv.org/abs/2411.14639

🙌: big thank you to my collaborators and mentors Wei-Ning Chen, @berivanisik.bsky.social, Sanmi Koyejo, Albert No

🧵 16/16

Differentially Private Adaptation of Diffusion Models via Noisy Aggregated Embeddings

We introduce novel methods for adapting diffusion models under differential privacy (DP) constraints, enabling privacy-preserving style and content transfer without fine-tuning. Traditional approaches...

arxiv.org

November 27, 2024 at 6:43 PM

For details, check out our paper (feedback appreciated!):

📄: arxiv.org/abs/2411.14639

🙌: big thank you to my collaborators and mentors Wei-Ning Chen, @berivanisik.bsky.social, Sanmi Koyejo, Albert No

🧵 16/16

📄: arxiv.org/abs/2411.14639

🙌: big thank you to my collaborators and mentors Wei-Ning Chen, @berivanisik.bsky.social, Sanmi Koyejo, Albert No

🧵 16/16