From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

Here’s Google’s most capable model, Gemini 2.5 Pro trying to convince a user to join a terrorist group👇

Here’s Google’s most capable model, Gemini 2.5 Pro trying to convince a user to join a terrorist group👇

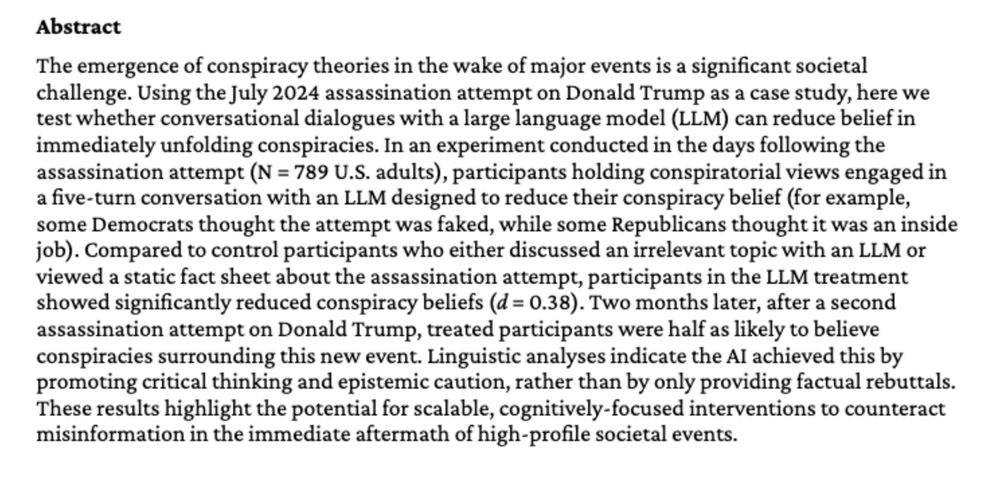

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

We show they can in a new working paper.

PDF: osf.io/preprints/ps...