Jevan Hutson

@jevan.bsky.social

data privacy/cybersecurity attorney by day, tech law professor/clinic director by night. into data rights, not into data wrongs.

Reposted by Jevan Hutson

No, no it cannot. Looks like we need to re-up our paper @jevan.bsky.social

A new paper suggests a photo can tell a recruiter much about an applicant’s personality

Should facial analysis help determine whom companies hire?

A new paper suggests a photo can tell a recruiter much about an applicant’s personality

econ.st

November 6, 2025 at 7:51 PM

No, no it cannot. Looks like we need to re-up our paper @jevan.bsky.social

Reposted by Jevan Hutson

“Terrible things are happening outside. Poor helpless people are being dragged out of their homes. Families are torn apart. Men, women, and children are separated. Children come home from school to find that their parents have disappeared.”

Diary of Anne Frank

January 13, 1943

Diary of Anne Frank

January 13, 1943

October 4, 2025 at 8:17 PM

“Terrible things are happening outside. Poor helpless people are being dragged out of their homes. Families are torn apart. Men, women, and children are separated. Children come home from school to find that their parents have disappeared.”

Diary of Anne Frank

January 13, 1943

Diary of Anne Frank

January 13, 1943

Reposted by Jevan Hutson

📣🚨NEW: ☁️ Big Cloud—Google, Microsoft & Amazon—control two thirds of the cloud compute market. They’re getting rich off the AI gold rush.

In new work with @nathanckim.bsky.social, we show how Big Cloud is expanding their empire by scrutinizing their *investments*… 🧵

📄PDF: dx.doi.org/10.2139/ssrn...

In new work with @nathanckim.bsky.social, we show how Big Cloud is expanding their empire by scrutinizing their *investments*… 🧵

📄PDF: dx.doi.org/10.2139/ssrn...

August 6, 2025 at 2:40 PM

📣🚨NEW: ☁️ Big Cloud—Google, Microsoft & Amazon—control two thirds of the cloud compute market. They’re getting rich off the AI gold rush.

In new work with @nathanckim.bsky.social, we show how Big Cloud is expanding their empire by scrutinizing their *investments*… 🧵

📄PDF: dx.doi.org/10.2139/ssrn...

In new work with @nathanckim.bsky.social, we show how Big Cloud is expanding their empire by scrutinizing their *investments*… 🧵

📄PDF: dx.doi.org/10.2139/ssrn...

Reposted by Jevan Hutson

I'm delighted to share that my article, AI and Doctrinal Collapse, is forthcoming in Stanford Law Review! Draft at papers.ssrn.com/sol3/papers.....

August 17, 2025 at 3:25 PM

I'm delighted to share that my article, AI and Doctrinal Collapse, is forthcoming in Stanford Law Review! Draft at papers.ssrn.com/sol3/papers.....

Reposted by Jevan Hutson

Judge a society by how it treats its most vulnerable members

ICE is sweeping up people with disabilities now, making no accommodations for them.

www.latimes.com/california/s...

www.latimes.com/california/s...

Deaf, mute and terrified: ICE arrests DACA recipient and ships him to Texas

Javier Diaz Santana, who is deaf and mute, was swept up in a federal immigration raid at his job in Temple City, unable to communicate in handcuffs. His attorney says U.S. agents "don’t care whether y...

www.latimes.com

July 22, 2025 at 5:14 PM

Judge a society by how it treats its most vulnerable members

Reposted by Jevan Hutson

Excellent scoop by @eileenguo.bsky.social! This is a timely reminder: always keep private data off public sites. If it's out there, it will likely be harvested. Prioritise your online privacy and think twice before oversharing.

#PII #Privacy #Cybersecurity

#PII #Privacy #Cybersecurity

NEW FROM ME: new research has found millions of ex's of personal info, including credit cards, passports, résumés, birth certificates etc in 1 of the largest web-scraped datasets used to train image generation AI models.

It's a major privacy violation.

www.technologyreview.com/2025/07/18/1...

It's a major privacy violation.

www.technologyreview.com/2025/07/18/1...

A major AI training data set contains millions of examples of personal data

Personally identifiable information has been found in DataComp CommonPool, one of the largest open-source data sets used to train image generation models.

www.technologyreview.com

July 19, 2025 at 1:38 AM

Excellent scoop by @eileenguo.bsky.social! This is a timely reminder: always keep private data off public sites. If it's out there, it will likely be harvested. Prioritise your online privacy and think twice before oversharing.

#PII #Privacy #Cybersecurity

#PII #Privacy #Cybersecurity

Reposted by Jevan Hutson

A great @technologyreview.com article quoting from @rachelhong.bsky.social and @willie-agnew.bsky.social!

Millions of images of passports, credit cards, birth certificates, and other documents containing personally identifiable information are likely included in one of the biggest open-source AI training sets, new research has found.

A major AI training data set contains millions of examples of personal data

Personally identifiable information has been found in DataComp CommonPool, one of the largest open-source data sets used to train image generation models.

www.technologyreview.com

July 18, 2025 at 9:57 PM

A great @technologyreview.com article quoting from @rachelhong.bsky.social and @willie-agnew.bsky.social!

Reposted by Jevan Hutson

Important new work from @jevan.bsky.social, Yoshi Kohno, & other UW & CMU coauthors.

NEW FROM ME: new research has found millions of ex's of personal info, including credit cards, passports, résumés, birth certificates etc in 1 of the largest web-scraped datasets used to train image generation AI models.

It's a major privacy violation.

www.technologyreview.com/2025/07/18/1...

It's a major privacy violation.

www.technologyreview.com/2025/07/18/1...

A major AI training data set contains millions of examples of personal data

Personally identifiable information has been found in DataComp CommonPool, one of the largest open-source data sets used to train image generation models.

www.technologyreview.com

July 18, 2025 at 7:15 PM

Important new work from @jevan.bsky.social, Yoshi Kohno, & other UW & CMU coauthors.

Reposted by Jevan Hutson

My response to Ring's decision to roll back reforms and go back to surveillance on behalf of police as a business model.

This is part of a trend of companies bending over backwards to get in on the techno-authoritarian money/power grab that comes with being a lapdog of the carceral state.

This is part of a trend of companies bending over backwards to get in on the techno-authoritarian money/power grab that comes with being a lapdog of the carceral state.

Amazon Ring Cashes in on Techno-Authoritarianism and Mass Surveillance

Ring founder Jamie Siminoff is back at the helm of the surveillance doorbell company, and with him is the surveillance-first-privacy-last approach that made Ring one of the most maligned tech devices....

www.eff.org

July 18, 2025 at 4:14 PM

My response to Ring's decision to roll back reforms and go back to surveillance on behalf of police as a business model.

This is part of a trend of companies bending over backwards to get in on the techno-authoritarian money/power grab that comes with being a lapdog of the carceral state.

This is part of a trend of companies bending over backwards to get in on the techno-authoritarian money/power grab that comes with being a lapdog of the carceral state.

Reposted by Jevan Hutson

Another major AI privacy violation and, again, almost no way for injured individuals to get remedy—or even to know who stole or misused their sensitive personal data.

NEW FROM ME: new research has found millions of ex's of personal info, including credit cards, passports, résumés, birth certificates etc in 1 of the largest web-scraped datasets used to train image generation AI models.

It's a major privacy violation.

www.technologyreview.com/2025/07/18/1...

It's a major privacy violation.

www.technologyreview.com/2025/07/18/1...

A major AI training data set contains millions of examples of personal data

Personally identifiable information has been found in DataComp CommonPool, one of the largest open-source data sets used to train image generation models.

www.technologyreview.com

July 18, 2025 at 3:55 PM

Another major AI privacy violation and, again, almost no way for injured individuals to get remedy—or even to know who stole or misused their sensitive personal data.

Reposted by Jevan Hutson

The bottom line, says @willie-agnew.bsky.social, a postdoctoral fellow in AI ethics at Carnegie Mellon University and one of the coauthors, is that “anything you put online can [be] and probably has been scraped.”

The paper: arxiv.org/pdf/2506.17185

The paper: arxiv.org/pdf/2506.17185

July 18, 2025 at 3:52 PM

The bottom line, says @willie-agnew.bsky.social, a postdoctoral fellow in AI ethics at Carnegie Mellon University and one of the coauthors, is that “anything you put online can [be] and probably has been scraped.”

The paper: arxiv.org/pdf/2506.17185

The paper: arxiv.org/pdf/2506.17185

Reposted by Jevan Hutson

NEW FROM ME: new research has found millions of ex's of personal info, including credit cards, passports, résumés, birth certificates etc in 1 of the largest web-scraped datasets used to train image generation AI models.

It's a major privacy violation.

www.technologyreview.com/2025/07/18/1...

It's a major privacy violation.

www.technologyreview.com/2025/07/18/1...

A major AI training data set contains millions of examples of personal data

Personally identifiable information has been found in DataComp CommonPool, one of the largest open-source data sets used to train image generation models.

www.technologyreview.com

July 18, 2025 at 3:25 PM

NEW FROM ME: new research has found millions of ex's of personal info, including credit cards, passports, résumés, birth certificates etc in 1 of the largest web-scraped datasets used to train image generation AI models.

It's a major privacy violation.

www.technologyreview.com/2025/07/18/1...

It's a major privacy violation.

www.technologyreview.com/2025/07/18/1...

Reposted by Jevan Hutson

Super excited and thankful to have Tech Review feature our work!

Millions of images of passports, credit cards, birth certificates, and other documents containing personally identifiable information are likely included in one of the biggest open-source AI training sets, new research has found.

A major AI training data set contains millions of examples of personal data

Personally identifiable information has been found in DataComp CommonPool, one of the largest open-source data sets used to train image generation models.

www.technologyreview.com

July 18, 2025 at 3:51 PM

Super excited and thankful to have Tech Review feature our work!

Reposted by Jevan Hutson

Please join us for the Co-Constructing the Future of Digital Intimacy workshop at #CSCW2025! (with @lucyq.bsky.social, @allismc.bsky.social, @dianafreed.bsky.social, @jevan.bsky.social, @eredmil1.bsky.social, @zahrastardust.bsky.social, @mirandawei.bsky.social ++)

futureofdigitalintimacy.github.io

futureofdigitalintimacy.github.io

Co-Constructing the Future of Digital Intimacy

futureofdigitalintimacy.github.io

July 16, 2025 at 8:55 PM

Please join us for the Co-Constructing the Future of Digital Intimacy workshop at #CSCW2025! (with @lucyq.bsky.social, @allismc.bsky.social, @dianafreed.bsky.social, @jevan.bsky.social, @eredmil1.bsky.social, @zahrastardust.bsky.social, @mirandawei.bsky.social ++)

futureofdigitalintimacy.github.io

futureofdigitalintimacy.github.io

Reposted by Jevan Hutson

Millions of images of passports, credit cards, birth certificates, and other documents containing personally identifiable information are likely included in one of the biggest open-source AI training sets, new research has found.

A major AI training data set contains millions of examples of personal data

Personally identifiable information has been found in DataComp CommonPool, one of the largest open-source data sets used to train image generation models.

www.technologyreview.com

July 18, 2025 at 1:39 PM

Millions of images of passports, credit cards, birth certificates, and other documents containing personally identifiable information are likely included in one of the biggest open-source AI training sets, new research has found.

Reposted by Jevan Hutson

"Men who sell machines that mimic people want us to become people who mimic machines. They want techno feudal subjects who will believe and do what they’re told. We, as people, are being strategically simplified. This is a fascist process."

organizingmythoughts.org/some-thought...

organizingmythoughts.org/some-thought...

Some Thoughts on Techno-Fascism From Socialism 2025

"This is the endgame of our isolation."

organizingmythoughts.org

July 5, 2025 at 10:24 AM

"Men who sell machines that mimic people want us to become people who mimic machines. They want techno feudal subjects who will believe and do what they’re told. We, as people, are being strategically simplified. This is a fascist process."

organizingmythoughts.org/some-thought...

organizingmythoughts.org/some-thought...

Reposted by Jevan Hutson

New paper alert! In a collaboration between computer scientists and legal scholars, we find a significant amount of PII in a common AI training dataset and conduct a legal analysis showing that these issues put web-scale datasets in tension with existing privacy law. [🧵1/N] arxiv.org/abs/2506.17185

A Common Pool of Privacy Problems: Legal and Technical Lessons from a Large-Scale Web-Scraped Machine Learning Dataset

We investigate the contents of web-scraped data for training AI systems, at sizes where human dataset curators and compilers no longer manually annotate every sample. Building off of prior privacy con...

arxiv.org

June 30, 2025 at 9:15 PM

New paper alert! In a collaboration between computer scientists and legal scholars, we find a significant amount of PII in a common AI training dataset and conduct a legal analysis showing that these issues put web-scale datasets in tension with existing privacy law. [🧵1/N] arxiv.org/abs/2506.17185

Reposted by Jevan Hutson

Forget Me Not? Machine Unlearning's Implications for Privacy Law: this paper explores the

technical challenges, and legal implications, of effectively removing or suppressing personal #data from large, complex models.

http://spkl.io/63325fCCiz

@jevan.bsky.social @cedricwhitney.bsky.social

technical challenges, and legal implications, of effectively removing or suppressing personal #data from large, complex models.

http://spkl.io/63325fCCiz

@jevan.bsky.social @cedricwhitney.bsky.social

June 24, 2025 at 3:00 PM

Forget Me Not? Machine Unlearning's Implications for Privacy Law: this paper explores the

technical challenges, and legal implications, of effectively removing or suppressing personal #data from large, complex models.

http://spkl.io/63325fCCiz

@jevan.bsky.social @cedricwhitney.bsky.social

technical challenges, and legal implications, of effectively removing or suppressing personal #data from large, complex models.

http://spkl.io/63325fCCiz

@jevan.bsky.social @cedricwhitney.bsky.social

Reposted by Jevan Hutson

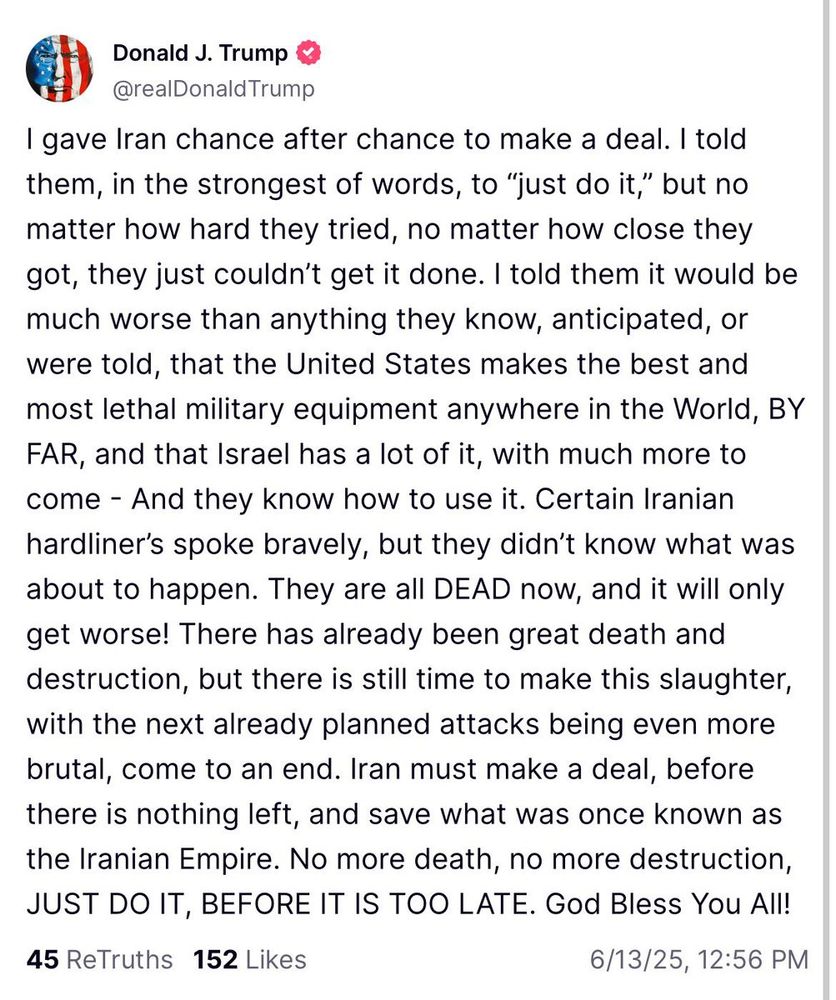

If another world leader had threatened, in the middle of a bombing campaign, that a government "must make a deal, before there is nothing left" -- both scholars and the U.S. government would have labeled it a threat of genocide.

June 14, 2025 at 2:37 AM

If another world leader had threatened, in the middle of a bombing campaign, that a government "must make a deal, before there is nothing left" -- both scholars and the U.S. government would have labeled it a threat of genocide.

Reposted by Jevan Hutson

ICE is going to parks in Los Angeles and kidnapping nannies who are caring for small children

The children are witnessing these kidnappings and being held in custody until their parents are contacted

Women and children in parks

The children are witnessing these kidnappings and being held in custody until their parents are contacted

Women and children in parks

June 14, 2025 at 12:55 AM

ICE is going to parks in Los Angeles and kidnapping nannies who are caring for small children

The children are witnessing these kidnappings and being held in custody until their parents are contacted

Women and children in parks

The children are witnessing these kidnappings and being held in custody until their parents are contacted

Women and children in parks

Reposted by Jevan Hutson

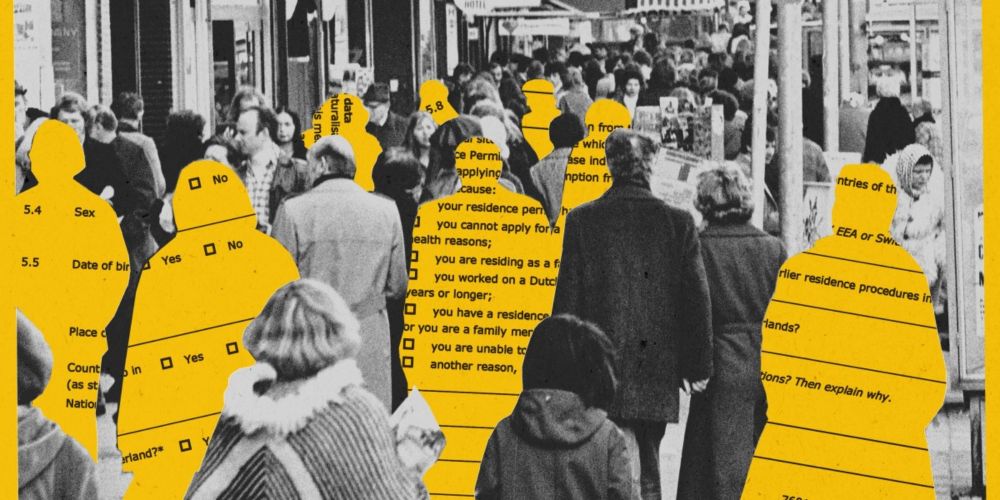

New from me @gabrielgeiger.bsky.social + Justin-Casimir Braun:

Amsterdam believed that it could build a #predictiveAI for welfare fraud that would ALSO be fair, unbiased, & a positive case study for #ResponsibleAI. It didn't work.

Our deep dive why: www.technologyreview.com/2025/06/11/1...

Amsterdam believed that it could build a #predictiveAI for welfare fraud that would ALSO be fair, unbiased, & a positive case study for #ResponsibleAI. It didn't work.

Our deep dive why: www.technologyreview.com/2025/06/11/1...

Inside Amsterdam’s high-stakes experiment to create fair welfare AI

The Dutch city thought it could break a decade-long trend of implementing discriminatory algorithms. Its failure raises the question: can these programs ever be fair?

www.technologyreview.com

June 11, 2025 at 5:04 PM

New from me @gabrielgeiger.bsky.social + Justin-Casimir Braun:

Amsterdam believed that it could build a #predictiveAI for welfare fraud that would ALSO be fair, unbiased, & a positive case study for #ResponsibleAI. It didn't work.

Our deep dive why: www.technologyreview.com/2025/06/11/1...

Amsterdam believed that it could build a #predictiveAI for welfare fraud that would ALSO be fair, unbiased, & a positive case study for #ResponsibleAI. It didn't work.

Our deep dive why: www.technologyreview.com/2025/06/11/1...

Reposted by Jevan Hutson

Palestinians gunned down while trying to reach food aid site in Gaza, hospital says

Palestinians gunned down while trying to reach food aid site in Gaza, hospital says

Witnesses say Israeli forces opened fire on people near distribution point run by Israel-backed foundation

More than 20 people were killed on Sunday as they went to receive food at an aid distribution point set up by an Israeli-backed foundation in the Gaza Strip, according to a hospital run by the Red Cross that received the bodies.

Witnesses told the Associated Press that Israeli forces had opened fire on people as they headed toward the aid distribution site run by the Gaza Humanitarian Foundation (GHF). “There were many martyrs, including women,” the 40-year-old resident said. “We were about 300 metres away from the military.” Continue reading...

www.theguardian.com

June 1, 2025 at 8:17 AM

Palestinians gunned down while trying to reach food aid site in Gaza, hospital says

Reposted by Jevan Hutson

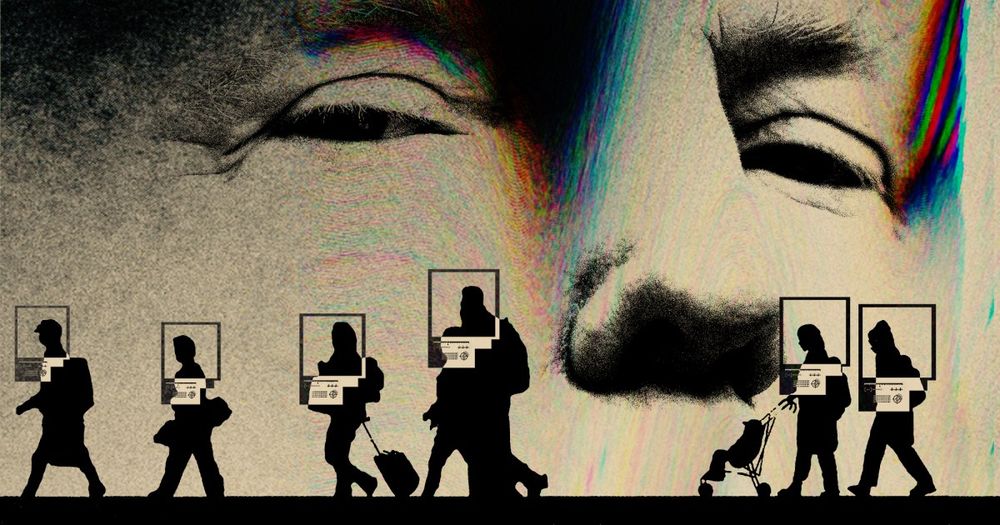

“Thousands of newly obtained documents show” that the founders of Clearview AI—which is backed by Peter Thiel—“always intended to target immigrants & the political left. Now their digital dragnet is in the hands of the Trump admin.” This is incredible reporting by @lukeobrien.bsky.social, 4/2025 1/

The shocking far-right agenda behind the surveillance tech used by ICE and the FBI

Clearview AI’s founders always intended to target immigrants and the political left. Now their digital dragnet is in the hands of the Trump administration.

www.motherjones.com

June 1, 2025 at 5:47 PM

“Thousands of newly obtained documents show” that the founders of Clearview AI—which is backed by Peter Thiel—“always intended to target immigrants & the political left. Now their digital dragnet is in the hands of the Trump admin.” This is incredible reporting by @lukeobrien.bsky.social, 4/2025 1/

Reposted by Jevan Hutson

The Administration has arrested a judge in WI and a mayor in NJ, and is threatening to arrest federal lawmakers. In any other country we would say the strongman's security forces are locking up political opponents. We need to treat it exactly that way here. www.axios.com/2025/05/10/t...

Trump administration threatens to arrest House Democrats over ICE facility incident

"There will likely be more arrests coming," a Department of Homeland Security spokesperson said.

www.axios.com

May 10, 2025 at 7:48 PM

The Administration has arrested a judge in WI and a mayor in NJ, and is threatening to arrest federal lawmakers. In any other country we would say the strongman's security forces are locking up political opponents. We need to treat it exactly that way here. www.axios.com/2025/05/10/t...

Reposted by Jevan Hutson

Elon Musk’s AI data centers polluting Black communities so that his Nazi followers can ask his chatbot questions is a perfect distillation of why AI is fascist technology

May 10, 2025 at 10:49 PM

Elon Musk’s AI data centers polluting Black communities so that his Nazi followers can ask his chatbot questions is a perfect distillation of why AI is fascist technology