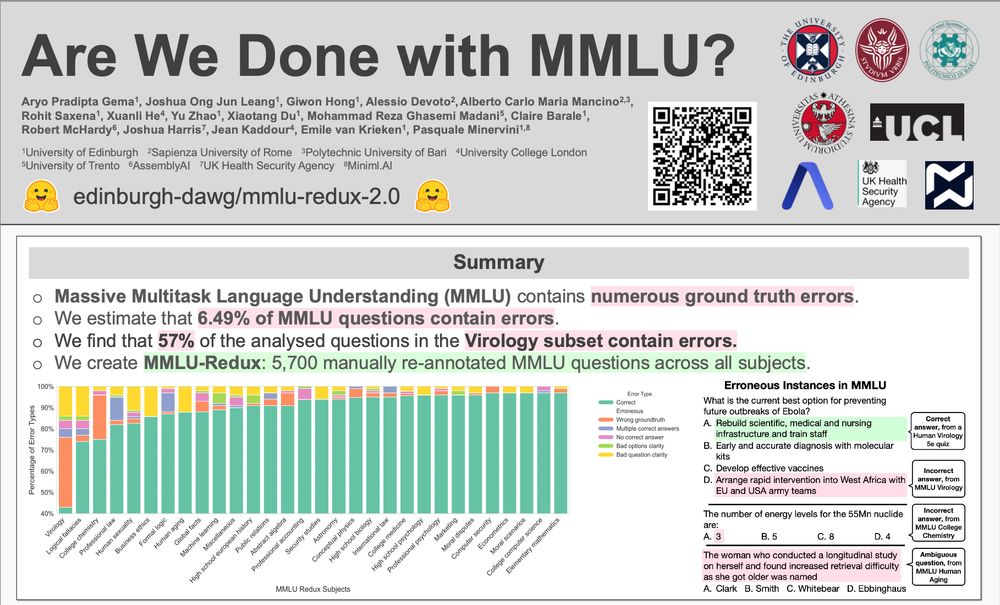

Wish I could be there for our "Are We Done with MMLU?" poster today (9:00-10:30am in Hall 3, Poster Session 7), but visa drama said nope 😅

If anyone's swinging by, give our research some love! Hit me up if you check it out! 👋

Wish I could be there for our "Are We Done with MMLU?" poster today (9:00-10:30am in Hall 3, Poster Session 7), but visa drama said nope 😅

If anyone's swinging by, give our research some love! Hit me up if you check it out! 👋

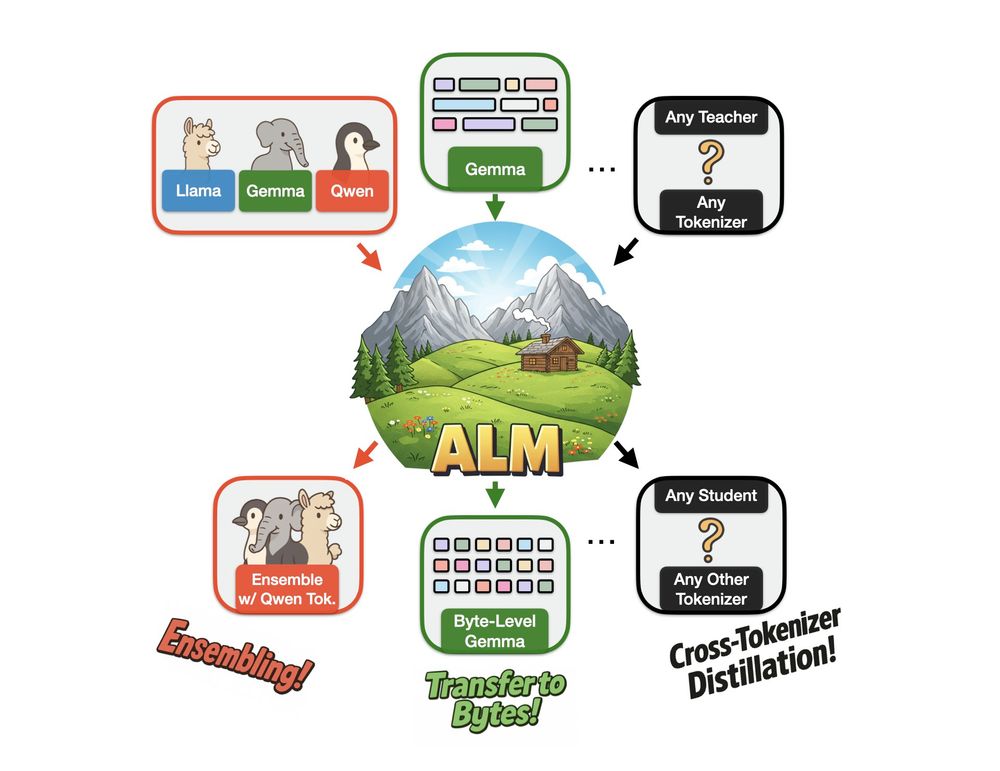

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

💡Mixtures of In-Context Learners (𝗠𝗼𝗜𝗖𝗟): we treat LLMs prompted with subsets of demonstrations as experts and learn a weighting function to optimise the distribution over the continuation (🧵1/n)

💡Mixtures of In-Context Learners (𝗠𝗼𝗜𝗖𝗟): we treat LLMs prompted with subsets of demonstrations as experts and learn a weighting function to optimise the distribution over the continuation (🧵1/n)

I'd love to chat about my recent works (DeCoRe, MMLU-Redux, etc.). DM me if you’re around! 👋

DeCoRe: arxiv.org/abs/2410.18860

MMLU-Redux: arxiv.org/abs/2406.04127

I'd love to chat about my recent works (DeCoRe, MMLU-Redux, etc.). DM me if you’re around! 👋

DeCoRe: arxiv.org/abs/2410.18860

MMLU-Redux: arxiv.org/abs/2406.04127