I tried them out myself and the performance is amazing.

On top of that we just got a fresh batch of H100s as well. At $4.5/hour it's a clear winner in terms of price/perf compared to the A100.

I tried them out myself and the performance is amazing.

On top of that we just got a fresh batch of H100s as well. At $4.5/hour it's a clear winner in terms of price/perf compared to the A100.

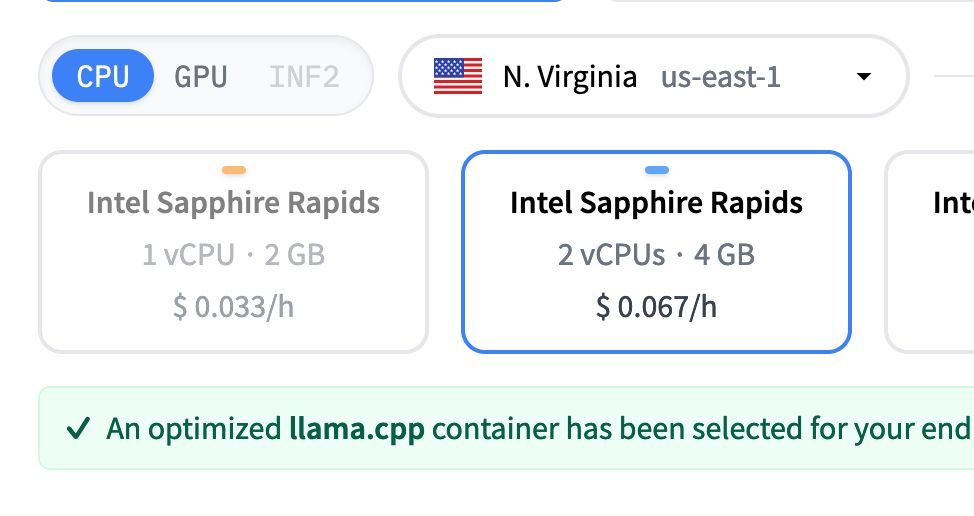

You can deploy it from endpoints directly with an optimally selected hardware and configurations.

Give it a try 👇

You can deploy it from endpoints directly with an optimally selected hardware and configurations.

Give it a try 👇

10001001110111101000100111111011

and decodes the intel 8088 assembly from it like:

mov si, bx

mov bx, di

only works on the mov instruction, register to register.

code: github.com/ErikKaum/bit...

10001001110111101000100111111011

and decodes the intel 8088 assembly from it like:

mov si, bx

mov bx, di

only works on the mov instruction, register to register.

code: github.com/ErikKaum/bit...

You should always aim higher, but that easily becomes a state where you're never satisfied. Just reached 10k MRR. Now there's the next goal of 20k.

Sharif has a good talk on this: emotional runway.

How do you deal with this paradox?

video: www.youtube.com/watch?v=zUnQ...

You should always aim higher, but that easily becomes a state where you're never satisfied. Just reached 10k MRR. Now there's the next goal of 20k.

Sharif has a good talk on this: emotional runway.

How do you deal with this paradox?

video: www.youtube.com/watch?v=zUnQ...

Why this is a huge deal? Llama.cpp is well-known for running very well on CPU. If you're running small models like Llama 1B or embedding models, this will definitely save tons of money 💰 💰

Why this is a huge deal? Llama.cpp is well-known for running very well on CPU. If you're running small models like Llama 1B or embedding models, this will definitely save tons of money 💰 💰

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

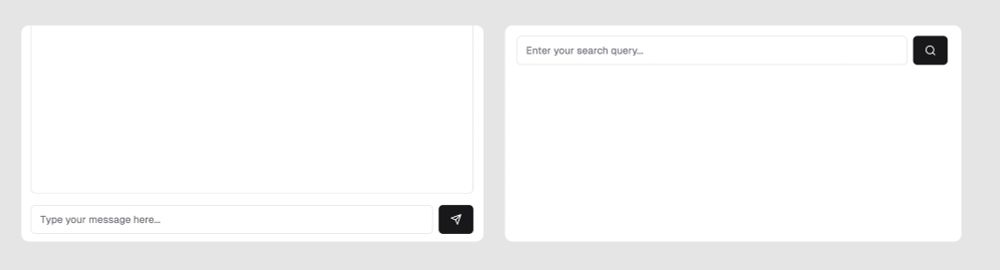

I've noticed that perplexity positions the question on the top and generates the text below.

Is it because they want to position more as a search engine?

I've noticed that perplexity positions the question on the top and generates the text below.

Is it because they want to position more as a search engine?

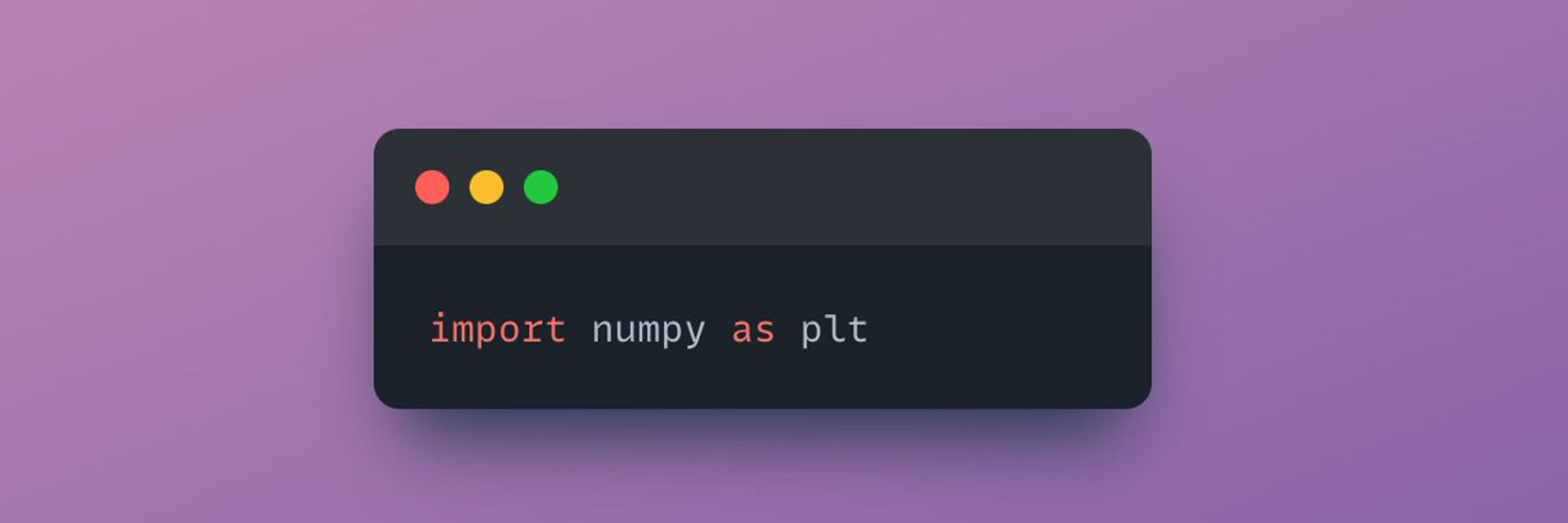

in plain english i'd say "2 conversions at the same time"

in plain english i'd say "2 conversions at the same time"

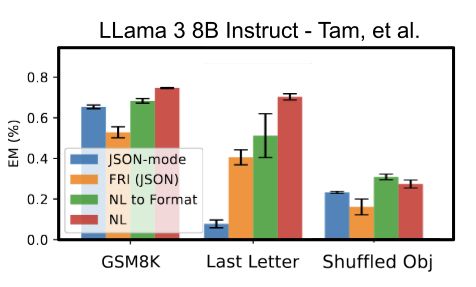

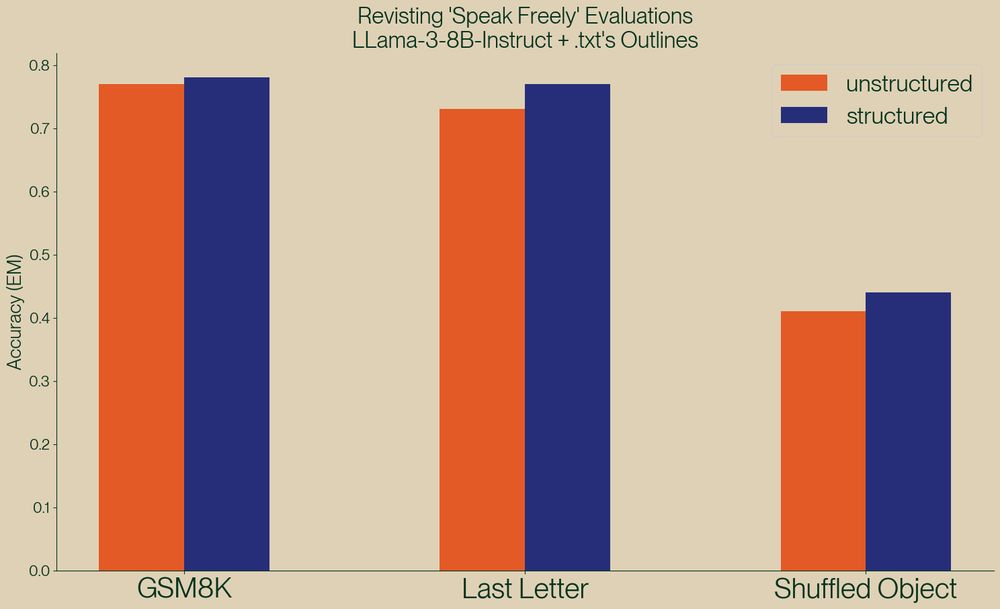

Well, we've taken a look and found serious issue in this paper, and shown, once again, that structured generation *improves* evaluation performance!

Damn, I had almost forgotten how enjoyable it’s to program in.

Just breezing through the code. If I need thousands of threads, it’s just there.

Damn, I had almost forgotten how enjoyable it’s to program in.

Just breezing through the code. If I need thousands of threads, it’s just there.

To build a secure, fast, and lightweight sandbox for code execution — ideal for running LLM-generated Python code.

- Send code simply as a POST request

- 1-2ms startup times

github.com/ErikKaum/run...

To build a secure, fast, and lightweight sandbox for code execution — ideal for running LLM-generated Python code.

- Send code simply as a POST request

- 1-2ms startup times

github.com/ErikKaum/run...

huggingface-projects-docs-llms-txt.hf.space/transformers...

huggingface-projects-docs-llms-txt.hf.space/transformers...

Same applies imo to coding and why it's so important to open your editor, start tinkering and sketching things out.

quote from: paulgraham.com/writes.html

Same applies imo to coding and why it's so important to open your editor, start tinkering and sketching things out.

quote from: paulgraham.com/writes.html