David Holzmüller

@dholzmueller.bsky.social

Postdoc in machine learning with Francis Bach &

@GaelVaroquaux: neural networks, tabular data, uncertainty, active learning, atomistic ML, learning theory.

https://dholzmueller.github.io

@GaelVaroquaux: neural networks, tabular data, uncertainty, active learning, atomistic ML, learning theory.

https://dholzmueller.github.io

Pinned

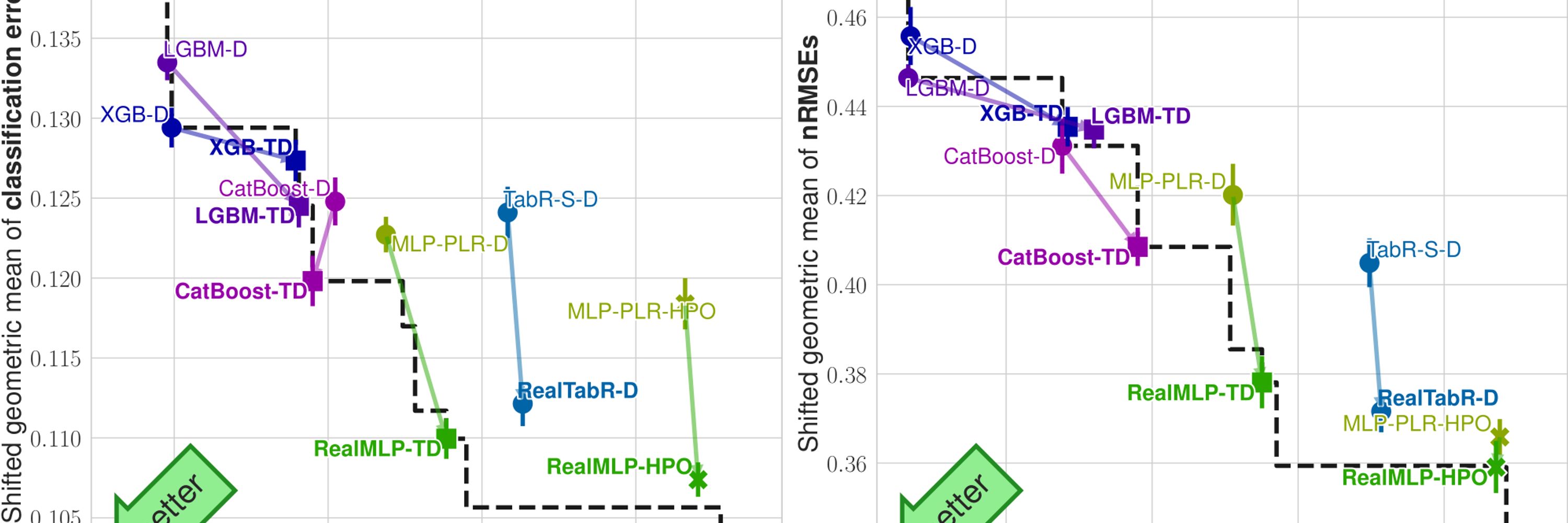

Can deep learning finally compete with boosted trees on tabular data? 🌲

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

Reposted by David Holzmüller

Reposted by David Holzmüller

Daniel Beaglehole, David Holzm\"uller, Adityanarayanan Radhakrishnan, Mikhail Belkin: xRFM: Accurate, scalable, and interpretable feature learning models for tabular data https://arxiv.org/abs/2508.10053 https://arxiv.org/pdf/2508.10053 https://arxiv.org/html/2508.10053

August 15, 2025 at 6:32 AM

Daniel Beaglehole, David Holzm\"uller, Adityanarayanan Radhakrishnan, Mikhail Belkin: xRFM: Accurate, scalable, and interpretable feature learning models for tabular data https://arxiv.org/abs/2508.10053 https://arxiv.org/pdf/2508.10053 https://arxiv.org/html/2508.10053

I got 3rd out of 691 in a tabular kaggle competition – with only neural networks! 🥉

My solution is short (48 LOC) and relatively general-purpose – I used skrub to preprocess string and date columns, and pytabkit to create an ensemble of RealMLP and TabM models. Link below👇

My solution is short (48 LOC) and relatively general-purpose – I used skrub to preprocess string and date columns, and pytabkit to create an ensemble of RealMLP and TabM models. Link below👇

July 29, 2025 at 11:10 AM

I got 3rd out of 691 in a tabular kaggle competition – with only neural networks! 🥉

My solution is short (48 LOC) and relatively general-purpose – I used skrub to preprocess string and date columns, and pytabkit to create an ensemble of RealMLP and TabM models. Link below👇

My solution is short (48 LOC) and relatively general-purpose – I used skrub to preprocess string and date columns, and pytabkit to create an ensemble of RealMLP and TabM models. Link below👇

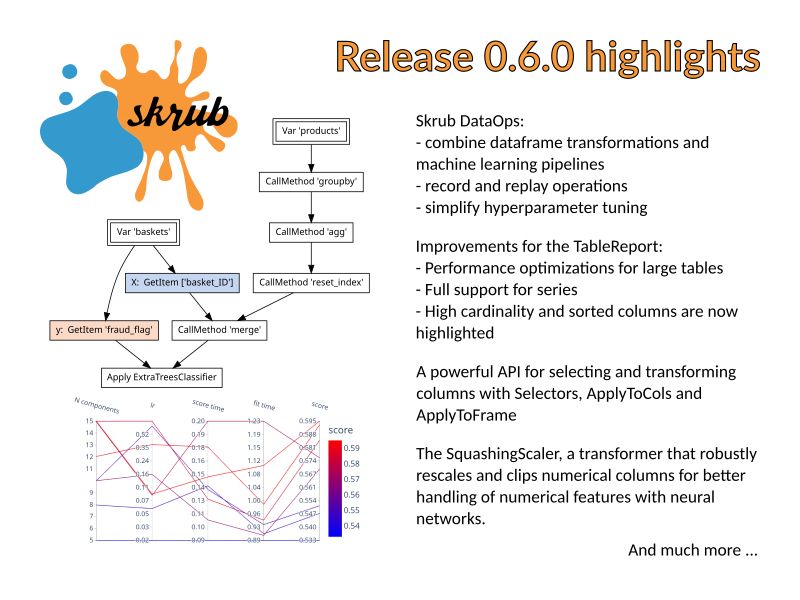

Excited to have co-contributed the SquashingScaler, which implements the robust numerical preprocessing from RealMLP!

⚡ Release 0.6.0 is now out! ⚡

🚀 Major update! Skrub DataOps, various improvements for the TableReport, new tools for applying transformers to the columns, and a new robust transformer for numerical features are only some of the features included in this release.

🚀 Major update! Skrub DataOps, various improvements for the TableReport, new tools for applying transformers to the columns, and a new robust transformer for numerical features are only some of the features included in this release.

July 24, 2025 at 4:00 PM

Excited to have co-contributed the SquashingScaler, which implements the robust numerical preprocessing from RealMLP!

Reposted by David Holzmüller

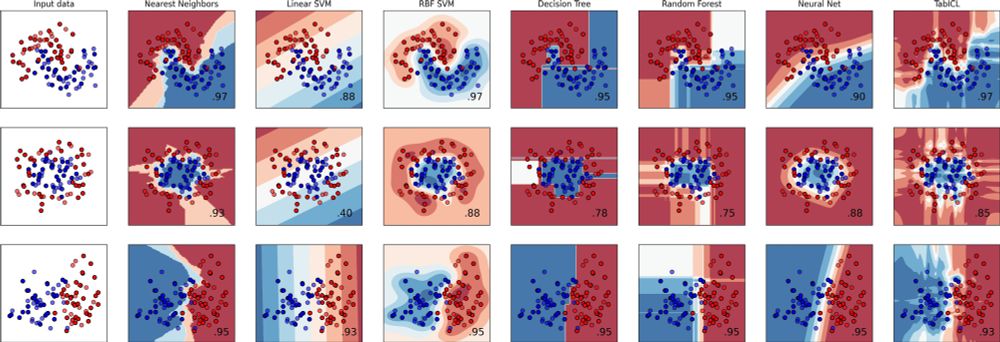

👨🎓🧾✨#icml2025 Paper: TabICL, A Tabular Foundation Model for In-Context Learning on Large Data

With Jingang Qu, @dholzmueller.bsky.social, and Marine Le Morvan

TL;DR: a well-designed architecture and pretraining gives best tabular learner, and more scalable

On top, it's 100% open source

1/9

With Jingang Qu, @dholzmueller.bsky.social, and Marine Le Morvan

TL;DR: a well-designed architecture and pretraining gives best tabular learner, and more scalable

On top, it's 100% open source

1/9

July 9, 2025 at 6:42 PM

👨🎓🧾✨#icml2025 Paper: TabICL, A Tabular Foundation Model for In-Context Learning on Large Data

With Jingang Qu, @dholzmueller.bsky.social, and Marine Le Morvan

TL;DR: a well-designed architecture and pretraining gives best tabular learner, and more scalable

On top, it's 100% open source

1/9

With Jingang Qu, @dholzmueller.bsky.social, and Marine Le Morvan

TL;DR: a well-designed architecture and pretraining gives best tabular learner, and more scalable

On top, it's 100% open source

1/9

Reposted by David Holzmüller

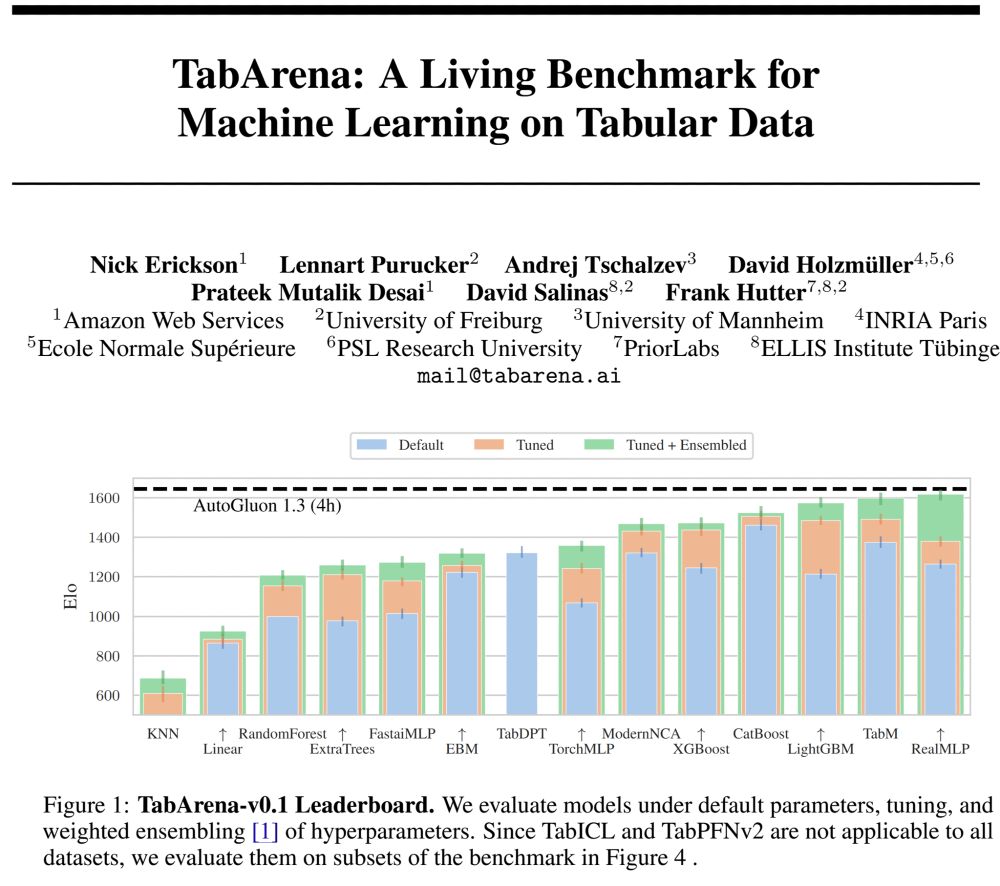

🚨What is SOTA on tabular data, really? We are excited to announce 𝗧𝗮𝗯𝗔𝗿𝗲𝗻𝗮, a living benchmark for machine learning on IID tabular data with:

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

June 23, 2025 at 10:15 AM

🚨What is SOTA on tabular data, really? We are excited to announce 𝗧𝗮𝗯𝗔𝗿𝗲𝗻𝗮, a living benchmark for machine learning on IID tabular data with:

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

Reposted by David Holzmüller

Missed the school? We have uploaded recordings of most talks to our YouTube Channel www.youtube.com/@AutoML_org 🙌

AutoML Freiburg Hannover Tübingen

This channel features videos about automated machine learning (AutoML) from the AutoML groups at the University of Freiburg, Leibniz University Hannover and University of Tübingen. Common topics inclu...

www.youtube.com

June 20, 2025 at 3:36 PM

Missed the school? We have uploaded recordings of most talks to our YouTube Channel www.youtube.com/@AutoML_org 🙌

Reposted by David Holzmüller

📝 The skrub TextEncoder brings the power of HuggingFace language models to embed text features in tabular machine learning, for all those use cases that involve text-based columns.

May 28, 2025 at 8:43 AM

📝 The skrub TextEncoder brings the power of HuggingFace language models to embed text features in tabular machine learning, for all those use cases that involve text-based columns.

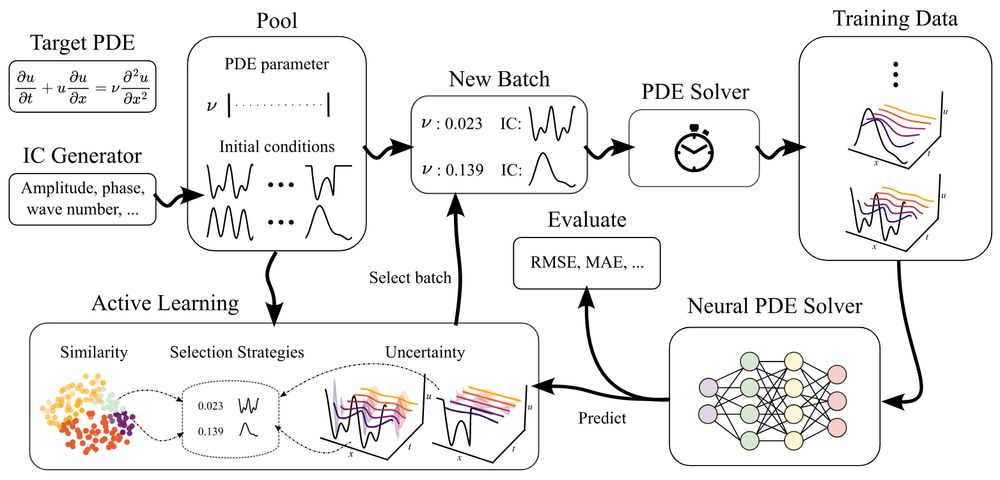

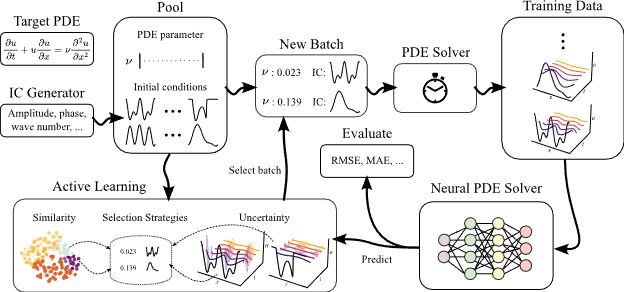

🚨ICLR poster in 1.5 hours, presented by @danielmusekamp.bsky.social :

Can active learning help to generate better datasets for neural PDE solvers?

We introduce a new benchmark to find out!

Featuring 6 PDEs, 6 AL methods, 3 architectures and many ablations - transferability, speed, etc.!

Can active learning help to generate better datasets for neural PDE solvers?

We introduce a new benchmark to find out!

Featuring 6 PDEs, 6 AL methods, 3 architectures and many ablations - transferability, speed, etc.!

April 24, 2025 at 12:38 AM

🚨ICLR poster in 1.5 hours, presented by @danielmusekamp.bsky.social :

Can active learning help to generate better datasets for neural PDE solvers?

We introduce a new benchmark to find out!

Featuring 6 PDEs, 6 AL methods, 3 architectures and many ablations - transferability, speed, etc.!

Can active learning help to generate better datasets for neural PDE solvers?

We introduce a new benchmark to find out!

Featuring 6 PDEs, 6 AL methods, 3 architectures and many ablations - transferability, speed, etc.!

Reposted by David Holzmüller

The Skrub TableReport is a lightweight tool that allows to get a rich overview of a table quickly and easily.

✅ Filter columns

🔎 Look at each column's distribution

📊 Get a high level view of the distributions through stats and plots, including correlated columns

🌐 Export the report as html

✅ Filter columns

🔎 Look at each column's distribution

📊 Get a high level view of the distributions through stats and plots, including correlated columns

🌐 Export the report as html

April 23, 2025 at 11:49 AM

The Skrub TableReport is a lightweight tool that allows to get a rich overview of a table quickly and easily.

✅ Filter columns

🔎 Look at each column's distribution

📊 Get a high level view of the distributions through stats and plots, including correlated columns

🌐 Export the report as html

✅ Filter columns

🔎 Look at each column's distribution

📊 Get a high level view of the distributions through stats and plots, including correlated columns

🌐 Export the report as html

Reposted by David Holzmüller

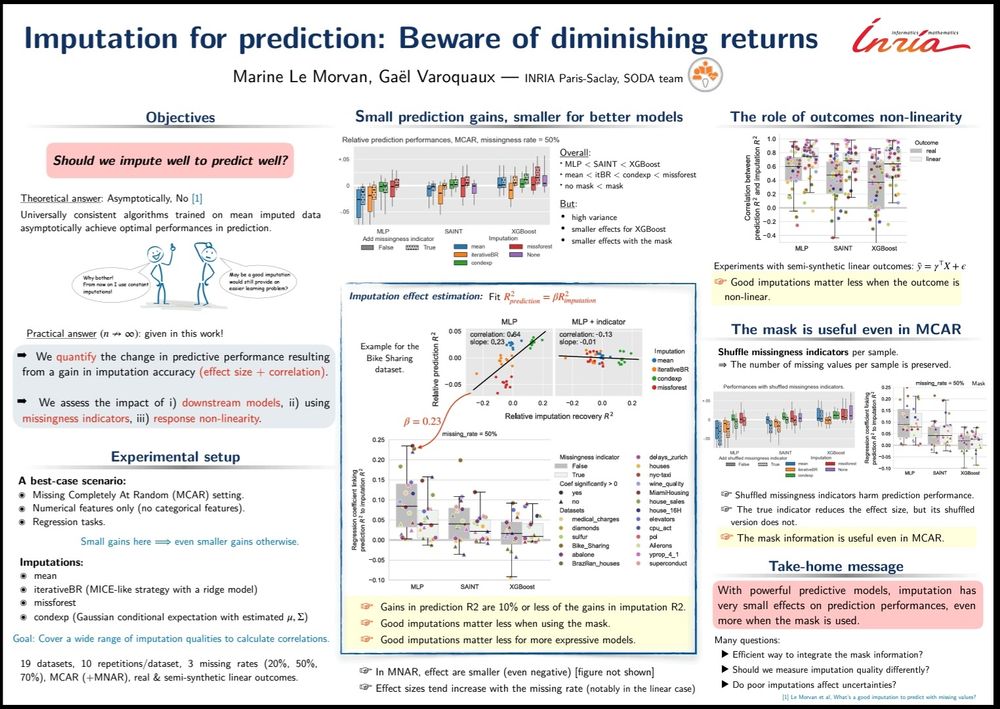

#ICLR2025 Marine Le Morvan presents "Imputation for prediction: beware of diminishing returns": poster Thu 24th

arxiv.org/abs/2407.19804

Concludes 6 years of research on prediction with missing values: Imputation is useful but improvements are expensive, while better learners yield easier gains.

arxiv.org/abs/2407.19804

Concludes 6 years of research on prediction with missing values: Imputation is useful but improvements are expensive, while better learners yield easier gains.

April 23, 2025 at 7:55 AM

#ICLR2025 Marine Le Morvan presents "Imputation for prediction: beware of diminishing returns": poster Thu 24th

arxiv.org/abs/2407.19804

Concludes 6 years of research on prediction with missing values: Imputation is useful but improvements are expensive, while better learners yield easier gains.

arxiv.org/abs/2407.19804

Concludes 6 years of research on prediction with missing values: Imputation is useful but improvements are expensive, while better learners yield easier gains.

Reposted by David Holzmüller

Excited to share the new monthly Table Representation Learning (TRL) Seminar under the ELLIS Amsterdam TRL research theme! To recur every 2nd Friday.

Who: Marine Le Morvan, Inria (in-person)

When: Friday 11 April 4-5pm (+drinks)

Where: L3.36 Lab42 Science Park / Zoom

trl-lab.github.io/trl-seminar/

Who: Marine Le Morvan, Inria (in-person)

When: Friday 11 April 4-5pm (+drinks)

Where: L3.36 Lab42 Science Park / Zoom

trl-lab.github.io/trl-seminar/

April 2, 2025 at 9:42 AM

Excited to share the new monthly Table Representation Learning (TRL) Seminar under the ELLIS Amsterdam TRL research theme! To recur every 2nd Friday.

Who: Marine Le Morvan, Inria (in-person)

When: Friday 11 April 4-5pm (+drinks)

Where: L3.36 Lab42 Science Park / Zoom

trl-lab.github.io/trl-seminar/

Who: Marine Le Morvan, Inria (in-person)

When: Friday 11 April 4-5pm (+drinks)

Where: L3.36 Lab42 Science Park / Zoom

trl-lab.github.io/trl-seminar/

Reposted by David Holzmüller

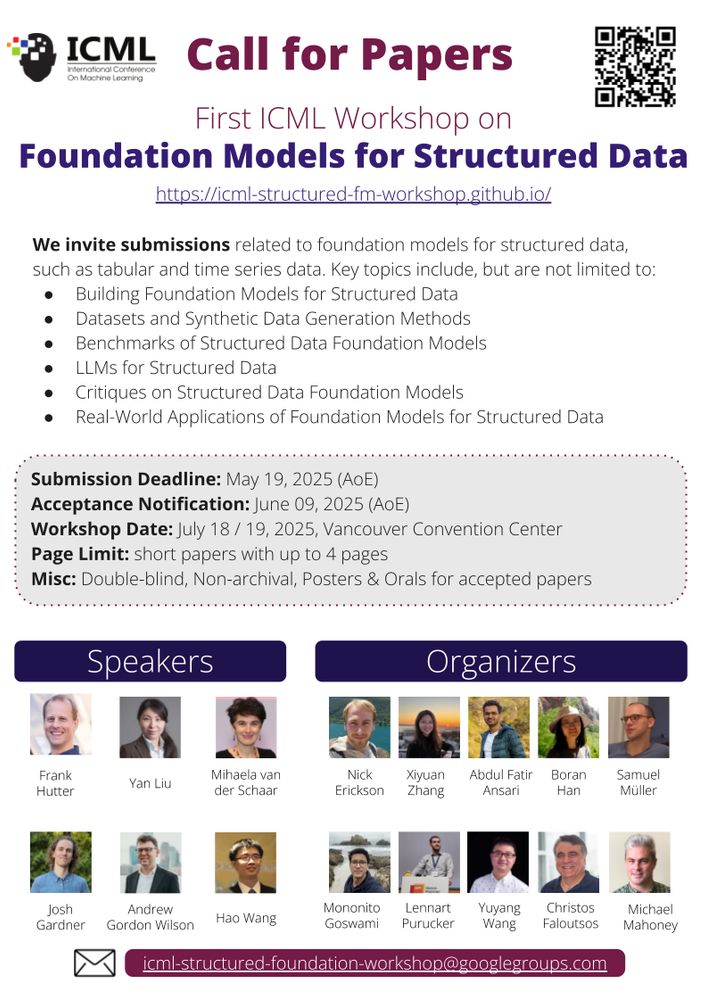

We are excited to announce #FMSD: "1st Workshop on Foundation Models for Structured Data" has been accepted to #ICML 2025!

Call for Papers: icml-structured-fm-workshop.github.io

Call for Papers: icml-structured-fm-workshop.github.io

March 25, 2025 at 5:59 PM

We are excited to announce #FMSD: "1st Workshop on Foundation Models for Structured Data" has been accepted to #ICML 2025!

Call for Papers: icml-structured-fm-workshop.github.io

Call for Papers: icml-structured-fm-workshop.github.io

Reposted by David Holzmüller

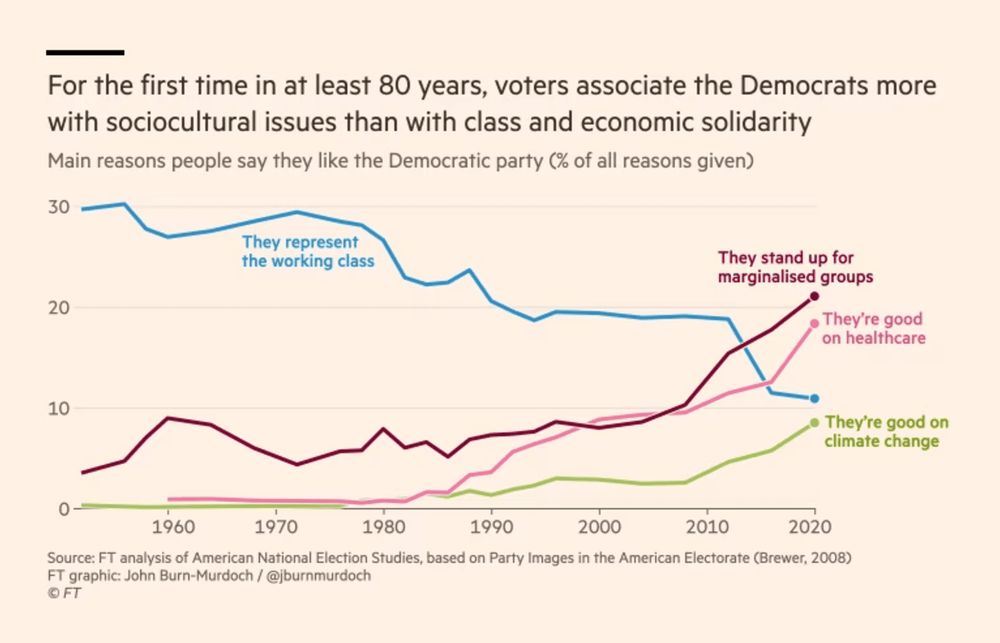

Trying something new:

A 🧵 on a topic I find many students struggle with: "why do their 📊 look more professional than my 📊?"

It's *lots* of tiny decisions that aren't the defaults in many libraries, so let's break down 1 simple graph by @jburnmurdoch.bsky.social

🔗 www.ft.com/content/73a1...

A 🧵 on a topic I find many students struggle with: "why do their 📊 look more professional than my 📊?"

It's *lots* of tiny decisions that aren't the defaults in many libraries, so let's break down 1 simple graph by @jburnmurdoch.bsky.social

🔗 www.ft.com/content/73a1...

November 20, 2024 at 5:09 PM

Trying something new:

A 🧵 on a topic I find many students struggle with: "why do their 📊 look more professional than my 📊?"

It's *lots* of tiny decisions that aren't the defaults in many libraries, so let's break down 1 simple graph by @jburnmurdoch.bsky.social

🔗 www.ft.com/content/73a1...

A 🧵 on a topic I find many students struggle with: "why do their 📊 look more professional than my 📊?"

It's *lots* of tiny decisions that aren't the defaults in many libraries, so let's break down 1 simple graph by @jburnmurdoch.bsky.social

🔗 www.ft.com/content/73a1...

Reposted by David Holzmüller

🚀Continuing the spotlight series with the next @iclr-conf.bsky.social MLMP 2025 Oral presentation!

📝LOGLO-FNO: Efficient Learning of Local and Global Features in Fourier Neural Operators

📷 Join us on April 27 at #ICLR2025!

#AI #ML #ICLR #AI4Science

📝LOGLO-FNO: Efficient Learning of Local and Global Features in Fourier Neural Operators

📷 Join us on April 27 at #ICLR2025!

#AI #ML #ICLR #AI4Science

March 18, 2025 at 8:12 AM

🚀Continuing the spotlight series with the next @iclr-conf.bsky.social MLMP 2025 Oral presentation!

📝LOGLO-FNO: Efficient Learning of Local and Global Features in Fourier Neural Operators

📷 Join us on April 27 at #ICLR2025!

#AI #ML #ICLR #AI4Science

📝LOGLO-FNO: Efficient Learning of Local and Global Features in Fourier Neural Operators

📷 Join us on April 27 at #ICLR2025!

#AI #ML #ICLR #AI4Science

Practitioners are often sceptical of academic tabular benchmarks, so I am elated to see that our RealMLP model outperformed boosted trees in two 2nd place Kaggle solutions, for a $10,000 forecasting challenge and a research competition on survival analysis.

Rohlik Sales Forecasting Challenge

Use historical product sales data to predict future sales.

www.kaggle.com

March 10, 2025 at 3:53 PM

Practitioners are often sceptical of academic tabular benchmarks, so I am elated to see that our RealMLP model outperformed boosted trees in two 2nd place Kaggle solutions, for a $10,000 forecasting challenge and a research competition on survival analysis.

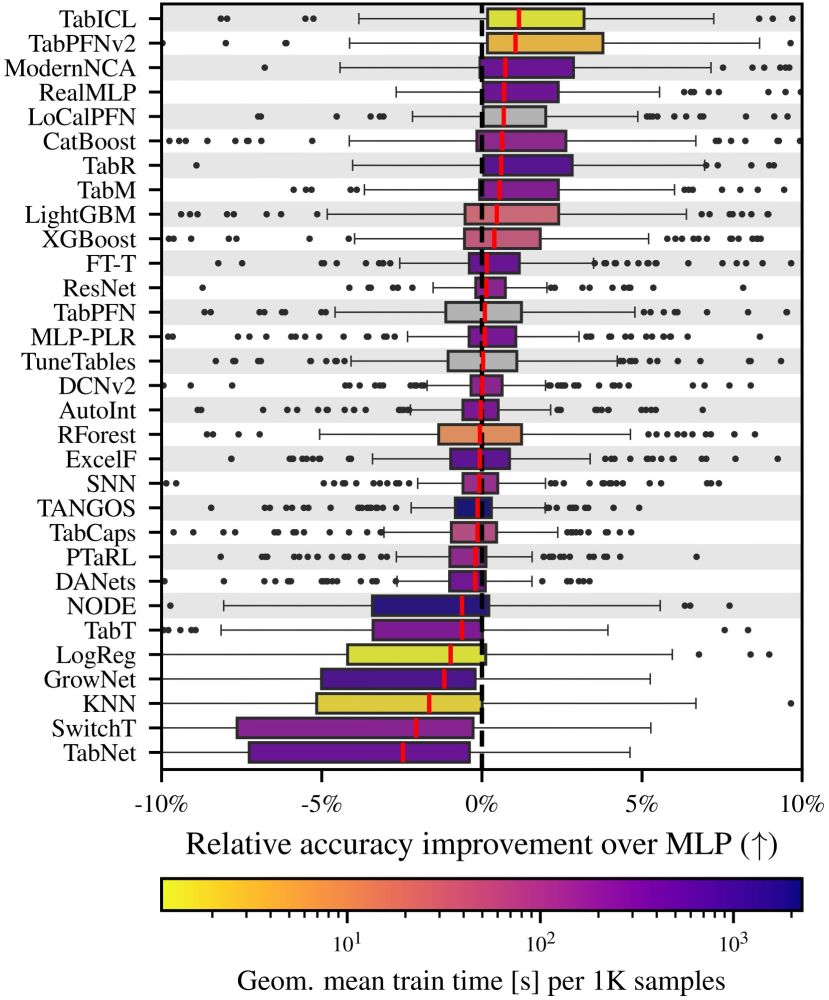

A new tabular classification benchmark provides another independent evaluation of our RealMLP. RealMLP is the best classical DL model, although some other recent baselines are missing. TabPFN is better on small datasets and boosted trees on larger datasets, though.

March 4, 2025 at 2:25 PM

A new tabular classification benchmark provides another independent evaluation of our RealMLP. RealMLP is the best classical DL model, although some other recent baselines are missing. TabPFN is better on small datasets and boosted trees on larger datasets, though.

Reposted by David Holzmüller

Learning rate schedules seem mysterious? Why is the loss going down so fast during cooldown?

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

The Surprising Agreement Between Convex Optimization Theory and Learning-Rate Scheduling for Large Model Training

We show that learning-rate schedules for large model training behave surprisingly similar to a performance bound from non-smooth convex optimization theory. We provide a bound for the constant schedul...

arxiv.org

February 5, 2025 at 10:13 AM

Learning rate schedules seem mysterious? Why is the loss going down so fast during cooldown?

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

Reposted by David Holzmüller

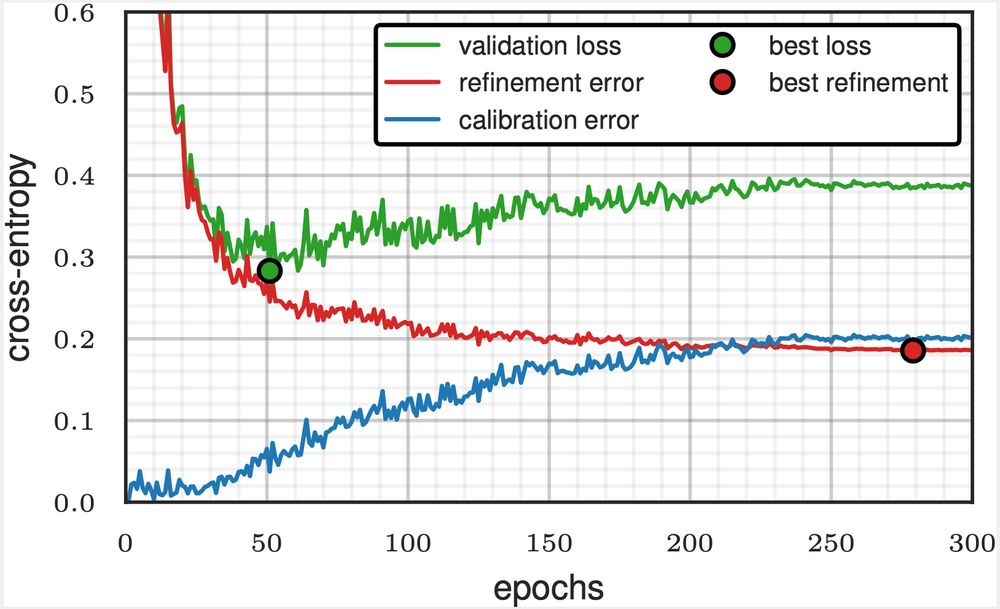

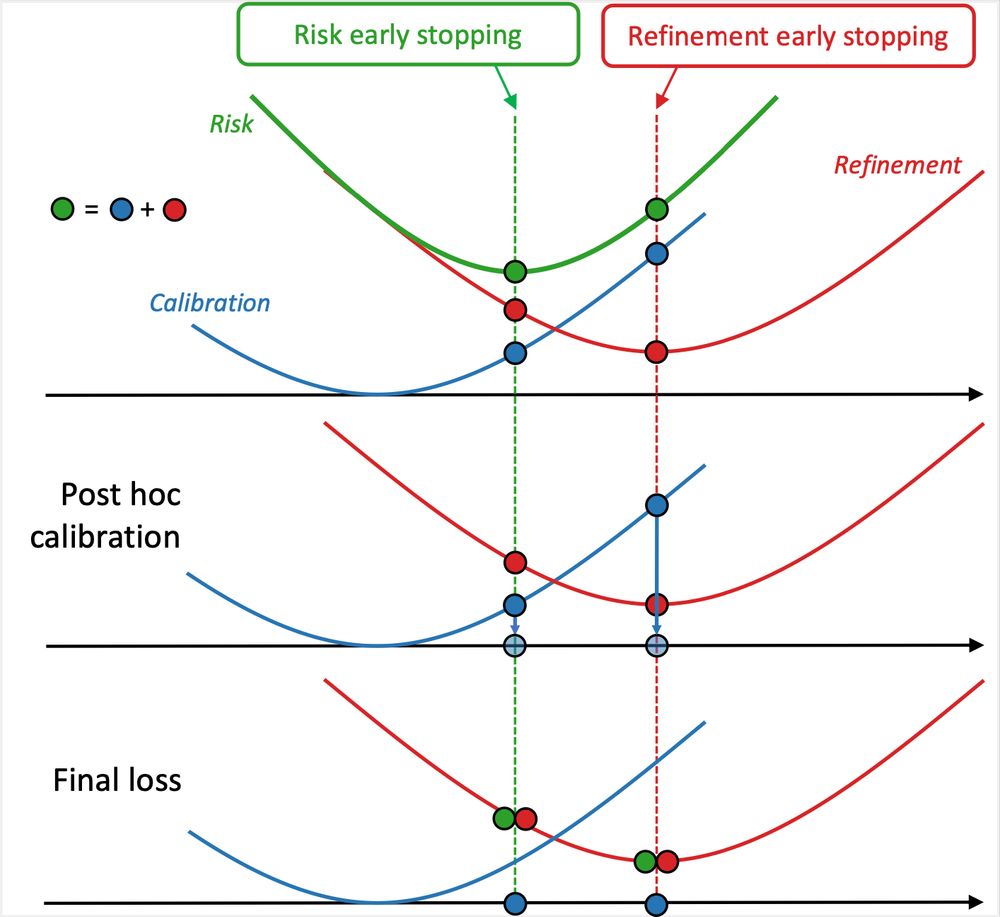

Early stopping on validation loss? This leads to suboptimal calibration and refinement errors—but you can do better!

With @dholzmueller.bsky.social, Michael I. Jordan, and @bachfrancis.bsky.social, we propose a method that integrates with any model and boosts classification performance across tasks.

With @dholzmueller.bsky.social, Michael I. Jordan, and @bachfrancis.bsky.social, we propose a method that integrates with any model and boosts classification performance across tasks.

February 3, 2025 at 1:03 PM

Early stopping on validation loss? This leads to suboptimal calibration and refinement errors—but you can do better!

With @dholzmueller.bsky.social, Michael I. Jordan, and @bachfrancis.bsky.social, we propose a method that integrates with any model and boosts classification performance across tasks.

With @dholzmueller.bsky.social, Michael I. Jordan, and @bachfrancis.bsky.social, we propose a method that integrates with any model and boosts classification performance across tasks.

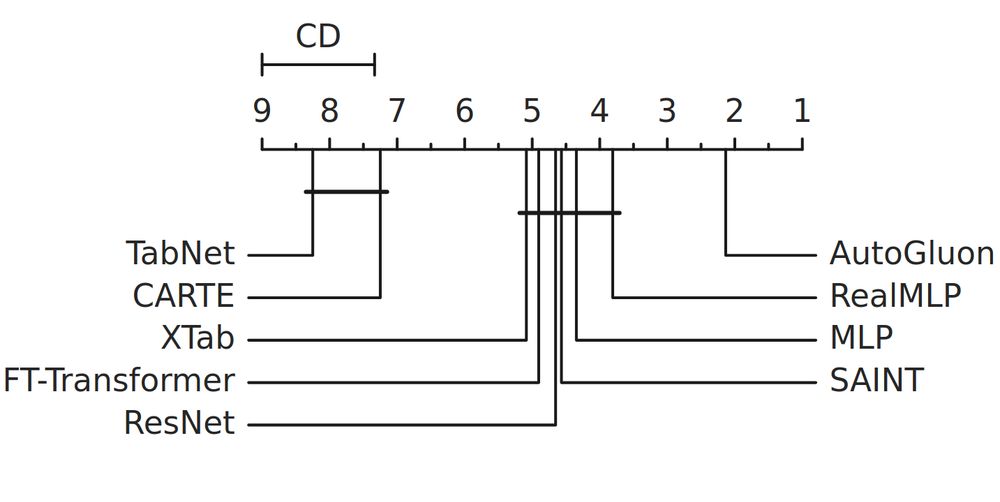

The first independent evaluation of our RealMLP is here!

On a recent 300-dataset benchmark with many baselines, RealMLP takes a shared first place overall. 🔥

Importantly, RealMLP is also relatively CPU-friendly, unlike other SOTA DL models (including TabPFNv2 and TabM). 🧵 1/

On a recent 300-dataset benchmark with many baselines, RealMLP takes a shared first place overall. 🔥

Importantly, RealMLP is also relatively CPU-friendly, unlike other SOTA DL models (including TabPFNv2 and TabM). 🧵 1/

January 16, 2025 at 12:05 PM

The first independent evaluation of our RealMLP is here!

On a recent 300-dataset benchmark with many baselines, RealMLP takes a shared first place overall. 🔥

Importantly, RealMLP is also relatively CPU-friendly, unlike other SOTA DL models (including TabPFNv2 and TabM). 🧵 1/

On a recent 300-dataset benchmark with many baselines, RealMLP takes a shared first place overall. 🔥

Importantly, RealMLP is also relatively CPU-friendly, unlike other SOTA DL models (including TabPFNv2 and TabM). 🧵 1/

Reposted by David Holzmüller

Join us on 27 Feb in Amsterdam for the ELLIS workshop on Representation Learning and Generative Models for Structured Data ✨

sites.google.com/view/rl-and-...

Inspiring talks by @eisenjulian.bsky.social, @neuralnoise.com, Frank Hutter, Vaishali Pal, TBC.

We welcome extended abstracts until 31 Jan!

sites.google.com/view/rl-and-...

Inspiring talks by @eisenjulian.bsky.social, @neuralnoise.com, Frank Hutter, Vaishali Pal, TBC.

We welcome extended abstracts until 31 Jan!

January 7, 2025 at 6:46 PM

Join us on 27 Feb in Amsterdam for the ELLIS workshop on Representation Learning and Generative Models for Structured Data ✨

sites.google.com/view/rl-and-...

Inspiring talks by @eisenjulian.bsky.social, @neuralnoise.com, Frank Hutter, Vaishali Pal, TBC.

We welcome extended abstracts until 31 Jan!

sites.google.com/view/rl-and-...

Inspiring talks by @eisenjulian.bsky.social, @neuralnoise.com, Frank Hutter, Vaishali Pal, TBC.

We welcome extended abstracts until 31 Jan!

Reposted by David Holzmüller

My book is (at last) out, just in time for Christmas!

A blog post to celebrate and present it: francisbach.com/my-book-is-o...

A blog post to celebrate and present it: francisbach.com/my-book-is-o...

December 21, 2024 at 3:23 PM

My book is (at last) out, just in time for Christmas!

A blog post to celebrate and present it: francisbach.com/my-book-is-o...

A blog post to celebrate and present it: francisbach.com/my-book-is-o...

I'll present our paper in the afternoon poster session at 4:30pm - 7:30 pm in East Exhibit Hall A-C, poster 3304!

Can deep learning finally compete with boosted trees on tabular data? 🌲

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

December 12, 2024 at 6:54 PM

I'll present our paper in the afternoon poster session at 4:30pm - 7:30 pm in East Exhibit Hall A-C, poster 3304!

We wrote a benchmark paper with many practical insights on (the benefits of) active learning for training neural PDE solvers. 🚀

I was happy to be a co-advisor on this project - most of the credit goes to Daniel and Marimuthu.

I was happy to be a co-advisor on this project - most of the credit goes to Daniel and Marimuthu.

Neural surrogates can accelerate PDE solving but need expensive ground-truth training data. Can we reduce the training data size with active learning (AL)? In our NeurIPS D3S3 poster, we introduce AL4PDE, an extensible AL benchmark for autoregressive neural PDE solvers. 🧵

December 11, 2024 at 6:50 PM

We wrote a benchmark paper with many practical insights on (the benefits of) active learning for training neural PDE solvers. 🚀

I was happy to be a co-advisor on this project - most of the credit goes to Daniel and Marimuthu.

I was happy to be a co-advisor on this project - most of the credit goes to Daniel and Marimuthu.

Reposted by David Holzmüller

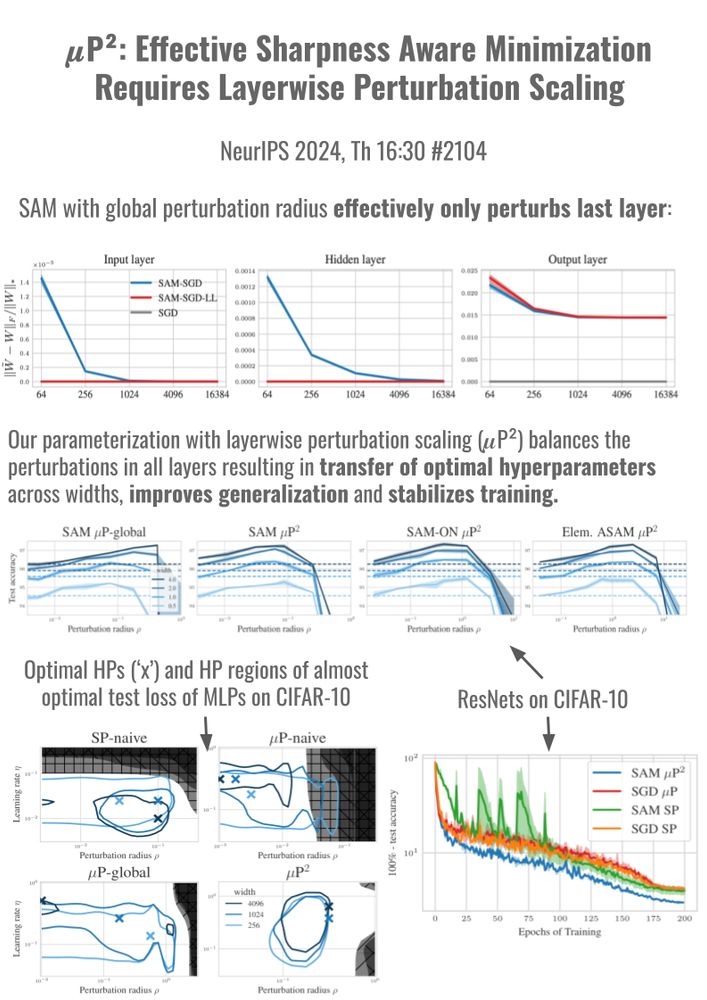

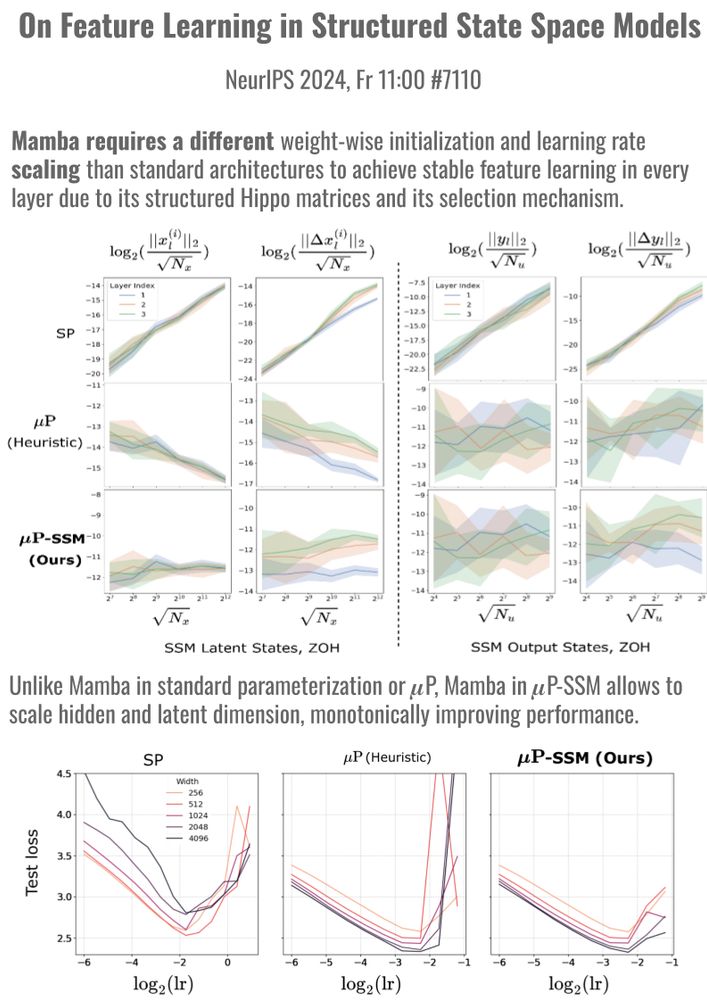

Stable model scaling with width-independent dynamics?

Thrilled to present 2 papers at #NeurIPS 🎉 that study width-scaling in Sharpness Aware Minimization (SAM) (Th 16:30, #2104) and in Mamba (Fr 11, #7110). Our scaling rules stabilize training and transfer optimal hyperparams across scales.

🧵 1/10

Thrilled to present 2 papers at #NeurIPS 🎉 that study width-scaling in Sharpness Aware Minimization (SAM) (Th 16:30, #2104) and in Mamba (Fr 11, #7110). Our scaling rules stabilize training and transfer optimal hyperparams across scales.

🧵 1/10

December 10, 2024 at 7:08 AM

Stable model scaling with width-independent dynamics?

Thrilled to present 2 papers at #NeurIPS 🎉 that study width-scaling in Sharpness Aware Minimization (SAM) (Th 16:30, #2104) and in Mamba (Fr 11, #7110). Our scaling rules stabilize training and transfer optimal hyperparams across scales.

🧵 1/10

Thrilled to present 2 papers at #NeurIPS 🎉 that study width-scaling in Sharpness Aware Minimization (SAM) (Th 16:30, #2104) and in Mamba (Fr 11, #7110). Our scaling rules stabilize training and transfer optimal hyperparams across scales.

🧵 1/10