CTO at Multiplayer.app, full stack session recordings to seamlessly capture and resolve issues or develop new features.

Also: 🤖 robot builder 🏃♂️ runner 🎸 guitar player

Thomas Floyd Johnson was an American composer and music critic associated with minimalism. After a religious upbringing in Colorado, he studied at Yale with Allen Forte and in New York City with Morton Feldman. There he covered the work of several noted composers, bringing them to wider attention in The Village Voice. .. more

You can schedule a call with me here: cal.com/multiplayer/...

I’m building the only tool that gives teams full-stack session replays out of the box. All correlated, all AI-ready.

But if getting there feels harder than it should, that’s on me.

www.multiplayer.app/blog/multipl...

No more alerts without answers.

No more “let me feed this to AI and see what happens.”

Just actionable suggestions that know your system state.

What if your system could automatically turn errors into PRs?

So for 2026 we’re building the Multiplayer AI agent:

Instead of manually exporting session data into separate AI tools,

you’ll receive pull requests with suggested fixes automatically, grounded in real production context.

✔️ Including full request/response content and headers from internal services and external dependencies

✔️ Making that context available to AI workflows via the MCP server

But we didn’t stop at making data accessible.

If my background in neural nets has taught me anything it’s that data is everything. That’s why our 2025 focus was about capturing the right data in the right structure:

As CTO of a startup building with AI, I keep coming back to this question. I shared my thoughts in this LeadDev article 👇

leaddev.com/ai/are-smart...

You can also create free notebooks on Multiplayer to test your real-world API integrations, chaining your APIs and code snippets for realistic workflows.

beyondruntime.substack.com/p/the-hidden...

Bad news: that pause is expensive.

Good news: this is preventable.

Better news: what makes your APIs testable also make your systems more observable.

beyondruntime.substack.com/p/logs-that-...

The trick is making those logs useful, faster.

• how to let tools like ChatGPT, Claude, or Gemini interact with your apps safely

• how to turn small AI experiments into prod features

• how to keep costs predictable

• and how to put the right guardrails in place

…then you’ll get a lot out of this panel.

(That’s what we do at Multiplayer with full stack session recordings 😉)

beyondruntime.substack.com/p/from-red-a...

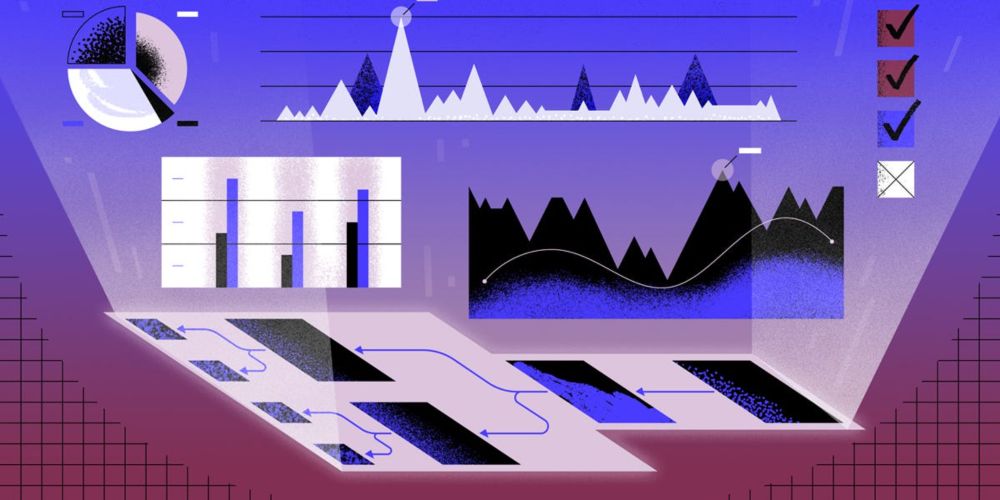

But modern distributed systems require more: you need immediate, surgical and complete visibility *across your stack* to fully understand system behavior.

And it starts with high-quality issue reporting powered by full-stack session recordings.

Internal tools don’t get the same love as customer-facing products, but the pain of debugging them is just as real.

👇 Check the full article: beyondruntime.substack.com/p/apis-dont-...

• Keep your test data clean, parameterized, and repeatable

• Continuously monitor the health of your test suite, track flaky tests, and evolve coverage as the system changes

• Write isolated, assertive tests that validate one behavior at a time, with strong assertions

• Use mocks, stubs, or service virtualization for dependencies

Here are a few proven best practices: 🧵

(and how Multiplayer full stack session recordings are built to solve that 😊)

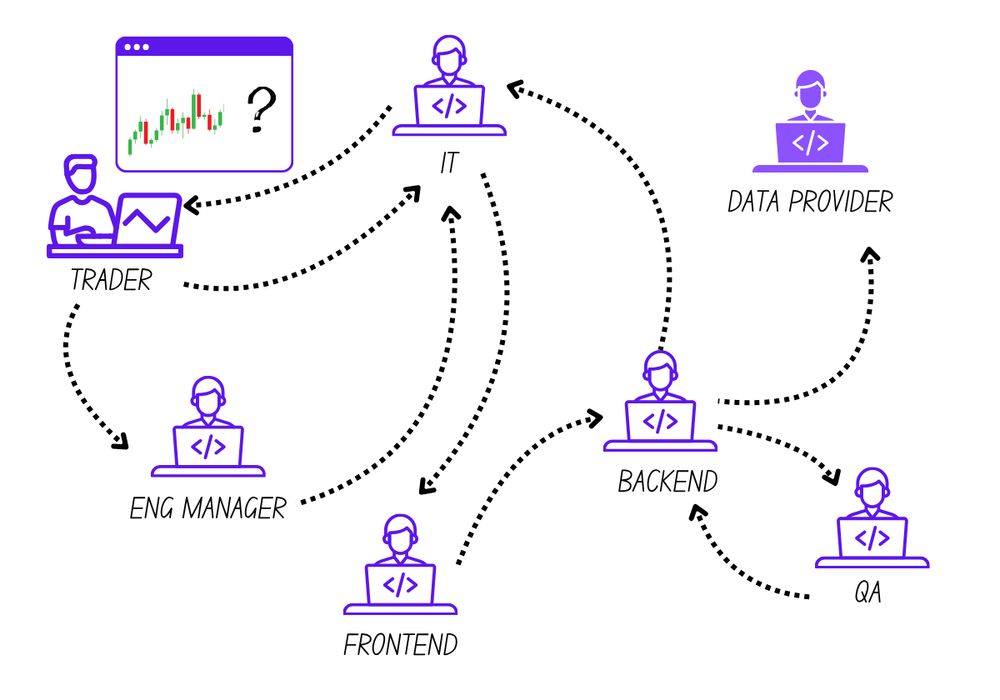

@farisaziz12.bsky.social is describing the nightmare that of debugging vague support tickets, with blurry photos, no reproduction steps, and endless back and forth.

If yes, share the latest rabbit hole you fell into.

‣ Prioritize security.

‣ Be deliberate with receivers.

‣ Export with efficiency.

‣ Monitor the Collector itself.

The lesson I keep coming back to is simple: an observability framework is only as strong as its Collector configuration.