James Bland

@jamesbland.bsky.social

950 followers

1.5K following

310 posts

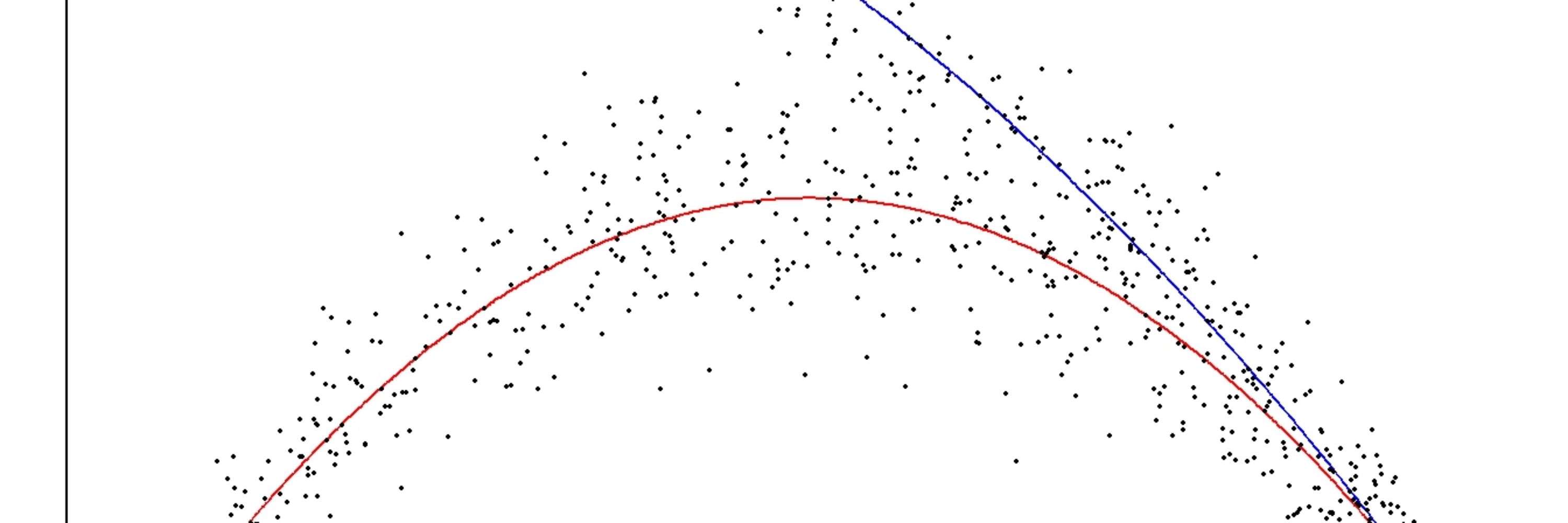

Economist at UToledo. 🇦🇺 Bayesian Econometrics for economic experiments and Behavioral Economics

Free online book on this stuff here: https://jamesblandecon.github.io/StructuralBayesianTechniques/section.html

https://sites.google.com/site/jamesbland/

He/his

Posts

Media

Videos

Starter Packs

Reposted by James Bland