MIRI

@intelligence.org

47 followers

5 following

38 posts

For over two decades, the Machine Intelligence Research Institute (MIRI) has worked to understand and prepare for the critical challenges that humanity will face as it transitions to a world with artificial superintelligence.

Posts

Media

Videos

Starter Packs

Pinned

Reposted by MIRI

Reposted by MIRI

Reposted by MIRI

Business Insider

@businessinsider.com

· Sep 16

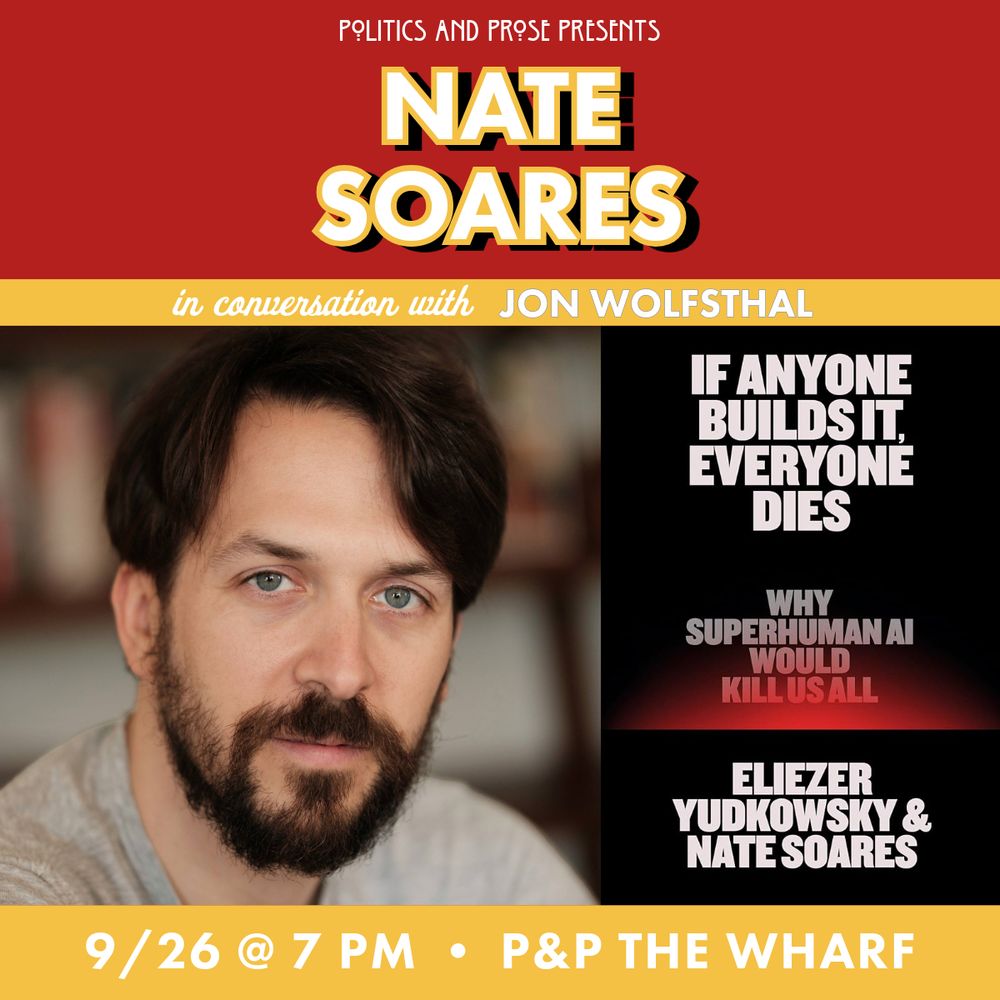

Forget woke chatbots — an AI researcher says the real danger is an AI that doesn't care if we live or die

AI researcher Eliezer Yudkowsky warned superintelligent AI could threaten humanity by pursuing its own goals over human survival.

www.businessinsider.com

Reposted by MIRI

Reposted by MIRI