Cedric Clyburn

@cedricclyburn.com

190 followers

290 following

69 posts

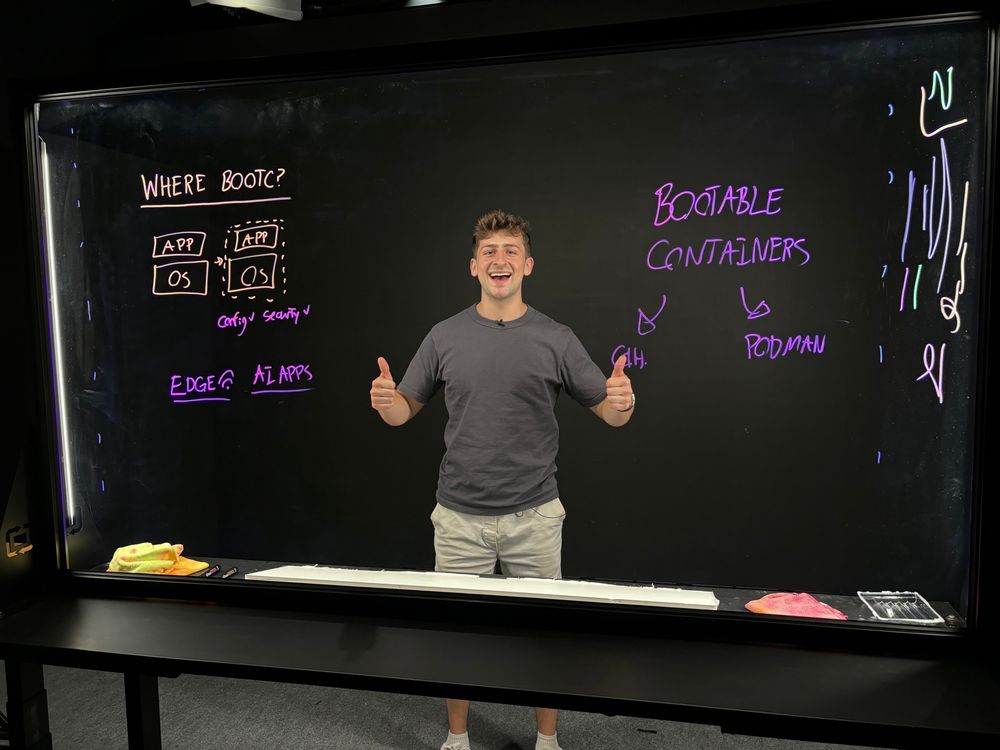

Developer Advocate at Red Hat • Organizer KCD New York • Previously at MongoDB • containers, k8s, & everything in between

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Cedric Clyburn

Reposted by Cedric Clyburn

Cedric Clyburn

@cedricclyburn.com

· Sep 4

Reposted by Cedric Clyburn

Devnexus

@devnexus.bsky.social

· Aug 28

Containers and #Kubernetes Made Easy Deep Dive into Podman Desktop with Cedric Clyburn

In a compelling presentation at Devnexus, Cedric Clyburn guided the audience from the fundamentals of containerization to advanced AI integration using Podma...

devnexus.com

Cedric Clyburn

@cedricclyburn.com

· Aug 14

Reposted by Cedric Clyburn

Reposted by Cedric Clyburn