Michal

@arathunku.com

850 followers

110 following

1.2K posts

https://arathunku.com

🧙♂️💻 #SRE & Platform things #Elixir #ElixirLang

🐕🐾 #luna #dog owner

🏃🏃 #running

🇵🇱 🧳🚗➡️ 🇩🇪

Posts

Media

Videos

Starter Packs

Michal

@arathunku.com

· 7h

Michal

@arathunku.com

· 7h

Michal

@arathunku.com

· 7h

Michal

@arathunku.com

· 7h

Reposted by Michal

Reposted by Michal

Michal

@arathunku.com

· 16h

Michal

@arathunku.com

· 1d

Michal

@arathunku.com

· 2d

Michal

@arathunku.com

· 2d

Michal

@arathunku.com

· 3d

Michal

@arathunku.com

· 3d

Michal

@arathunku.com

· 3d

Michal

@arathunku.com

· 3d

Michal

@arathunku.com

· 5d

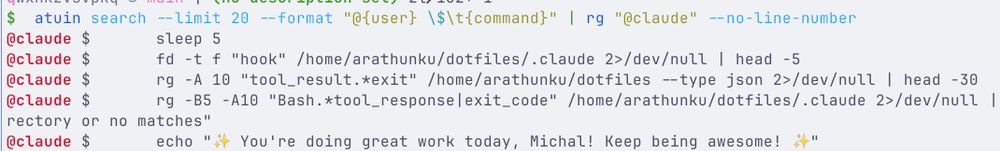

![$ anion hook-validate 'Bash' --command "elixir -e ''"

Validating: Bash with parameters: {"command":"elixir -e ''"}

CWD: /home/arathunku/code/github.com/arathunku/anion

Commands: ["elixir"]

Checking BLOCK rules...

✗ pattern="env *" - no match

✗ pattern="RAILS_ENV*" - no match

✗ pattern="RACK_ENV*" - no match

✗ pattern="NODE_ENV*" - no match

✗ pattern="MIX_ENV*" - no match

✗ pattern="printenv *" - no match

✗ exact="git" - no match

✗ exact="find" - no match

✗ pattern="grep *" - no match

✓ pattern="elixir -e*" - MATCH

Reason: Use mix or tidewave.

────────────────────────────────────────────────────────────

Decision: BLOCK

Reason: Use mix or tidewave.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:yww4iq4ogs7f4bmqbiwfzbck/bafkreicatc2a2vvyd5qkqaiay2ywges4dyqldhod3z2td7lkc42dj7nkxa@jpeg)